[CI/CD] Jenkins CI/ArgoCD + K8S

들어가며

이번 주에는 ArgoCD와 Jenkins를 사용하여 Kubernetes로의 배포를 하는 CI/CD를 구성해보겠습니다.

Jenkins CI/ArgoCD + K8S

실습환경 구성

- 이번에는 개발 PC에 Jenkins와 Gogs를 설치하고, Kind를 사용하여 Kubernetes 클러스터를 구성하고 ArgoCD를 설치하여 CI/CD를 구성해보겠습니다.

Jenkins, Gogs 설치

- Jenkins의 경우 1주차에서 설치하였지만 다시 한번 되짚어 보겠습니다.

- 또한 이번에는 Gitlab 클라우드 서비스 대신, 자체 PC에 Gogs를 설치하여 사용해보겠습니다.

# 작업 디렉토리 생성 후 이동

$ mkdir cicd-labs

$ cd cicd-labs

#

$ cat <<EOT > docker-compose.yaml

services:

jenkins:

container_name: jenkins

image: jenkins/jenkins

restart: unless-stopped

networks:

- cicd-network

ports:

- "8080:8080"

- "50000:50000"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- jenkins_home:/var/jenkins_home

gogs:

container_name: gogs

image: gogs/gogs

restart: unless-stopped

networks:

- cicd-network

ports:

- "10022:22"

- "3000:3000"

volumes:

- gogs-data:/data

volumes:

jenkins_home:

gogs-data:

networks:

cicd-network:

driver: bridge

EOT

# 배포

$ docker compose up -d

# => [+] Running 2/2

# ⠿ Container jenkins Started 0.5s

# ⠿ Container gogs Started 0.5s

$ docker compose ps

# => NAME COMMAND SERVICE STATUS PORTS

# gogs "/app/gogs/docker/st…" gogs running (healthy) 0.0.0.0:10022->22/tcp, 0.0.0.0:3000->3000/tcp

# jenkins "/usr/bin/tini -- /u…" jenkins running 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp

# 기본 정보 확인

$ for i in gogs jenkins ; do echo ">> container : $i <<"; docker compose exec $i sh -c "whoami && pwd"; echo; done

# => >> container : gogs <<

# root

# /app/gogs

#

# >> container : jenkins <<

# jenkins

# /

# 도커를 이용하여 각 컨테이너로 접속

$ docker compose exec jenkins bash

$ exit

$ docker compose exec gogs bash

$ exit

Jenkins 컨테이너 초기설정

# Jenkins 초기 암호 확인

$ docker compose exec jenkins cat /var/jenkins_home/secrets/initialAdminPassword

# => 3da38dccc7d14d1a8bee4b02c4e09da8

# Jenkins 웹 접속 주소 확인 : 계정 / 암호 입력 >> **admin / qwe123**

$ open "http://127.0.0.1:8080" # macOS

# (참고) 로그 확인 : 플러그인 설치 과정 확인

$ docker compose logs jenkins -f

# IP 확인 (MacOS 기준)

$ ifconfig | grep "inet " | grep -v 127.0.0

# => inet <span style="color: red;">10.0.4.3</span> netmask 0xffffff00 broadcast 10.0.4.255

# 또는

$ ipconfig getifaddr en0

# => <span style="color: red;">10.0.4.3</span>

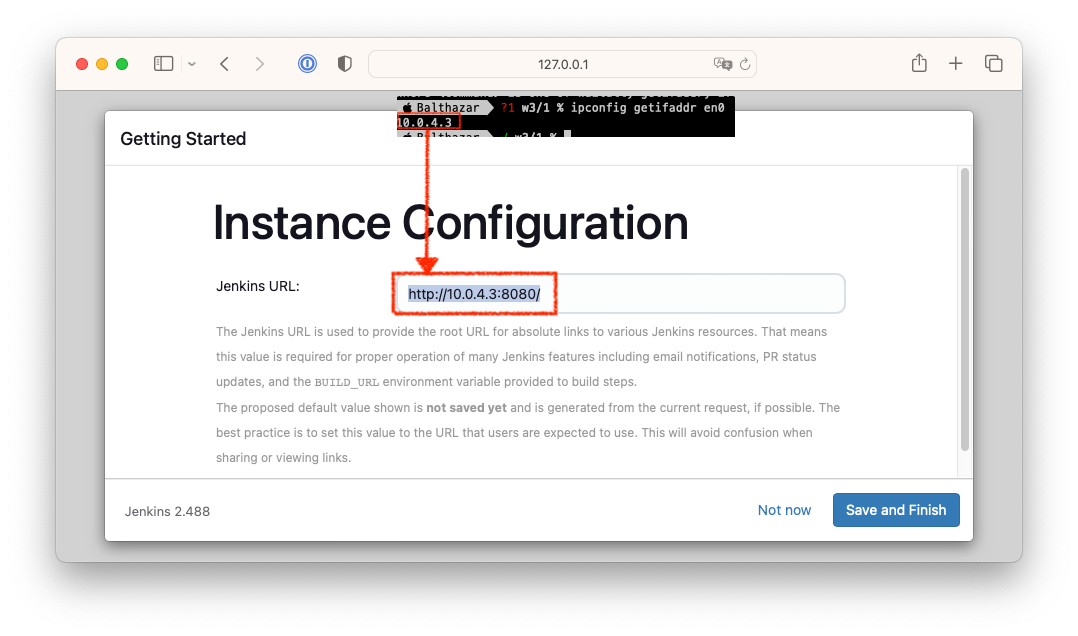

- Jenkins URL 설정

앞서 확인한 IP 주소를 이용하여 Jenkins URL을 설정합니다.

- 1주차때와 마찬가지로 Docker-out-of-Docker를 사용하겠습니다. 자세한 내용은 1주차 내용을 참고하세요.

# Jenkins 컨테이너 내부에 도커 실행 파일 설치

$ docker compose exec --privileged -u root jenkins bash

-----------------------------------------------------

$ id

$ curl -fsSL https://download.docker.com/linux/debian/gpg -o /etc/apt/keyrings/docker.asc

# => uid=0(root) gid=0(root) groups=0(root)

$ chmod a+r /etc/apt/keyrings/docker.asc

$ echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/debian \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

tee /etc/apt/sources.list.d/docker.list > /dev/null

$ apt-get update && apt install docker-ce-cli curl tree jq yq -y

$ docker info

# => Client: Docker Engine - Community

# Version: 27.4.1

# Context: default

# Debug Mode: false

# Plugins:

# buildx: Docker Buildx (Docker Inc.)

# Version: v0.19.3

# Path: /usr/libexec/docker/cli-plugins/docker-buildx

# compose: Docker Compose (Docker Inc.)

# Version: v2.32.1

# Path: /usr/libexec/docker/cli-plugins/docker-compose

#

# Server:

# Containers: 29

# Running: 2

# Paused: 0

# Stopped: 27

# Images: 42

# Server Version: 20.10.12

# Storage Driver: overlay2

# Backing Filesystem: extfs

# Supports d_type: true

# Native Overlay Diff: true

# userxattr: false

# Logging Driver: json-file

# Cgroup Driver: cgroupfs

# Cgroup Version: 2

# ...

# Insecure Registries:

# hubproxy.docker.internal:5000

# 127.0.0.0/8

# Live Restore Enabled: false

$ docker ps

# => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 110494b9ca48 gogs/gogs "/app/gogs/docker/st…" 14 hours ago Up 37 minutes (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

# 8b7c591c828d jenkins/jenkins "/usr/bin/tini -- /u…" 14 hours ago Up 37 minutes 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

# <span style="color: green;">👉 DooD이기 때문에 호스트에서 동작중인 컨테이너가 보입니다.</span>

$ which docker

# => /usr/bin/docker

# Jenkins 컨테이너 내부에서 root가 아닌 jenkins 유저도 docker를 실행할 수 있도록 권한을 부여

$ groupadd -g 2000 -f docker # macOS(Container)

# $ groupadd -g 1001 -f docker # Windows WSL2(Container) >> cat /etc/group 에서 docker 그룹ID를 지정

$ chgrp docker /var/run/docker.sock

$ chmod g+w /var/run/docker.sock

$ ls -l /var/run/docker.sock

# => srwxrwxr-x 1 root docker 0 Oct 01 06:03 /var/run/docker.sock

$ usermod -aG docker jenkins

$ cat /etc/group | grep docker

# => docker:x:2000:jenkins

exit

--------------------------------------------

# jenkins item 실행 시 docker 명령 실행 권한 에러 발생 : Jenkins 컨테이너 재기동으로 위 설정 내용을 Jenkins app 에도 적용 필요

$ docker compose restart jenkins

# => [+] Running 1/1

# ⠿ Container jenkins Started 0.9s

# $ sudo docker compose restart jenkins # Windows 경우 이후부터 sudo 붙여서 실행하자

# jenkins user로 docker 명령 실행 확인

$ docker compose exec jenkins id

# => uid=1000(jenkins) gid=1000(jenkins) groups=1000(jenkins),2000(docker)

$ docker compose exec jenkins docker info

# => Client: Docker Engine - Community

# Version: 27.4.1

# ...

#

# Server:

# Containers: 29

# Running: 2

# Paused: 0

# Stopped: 27

# Images: 42

# Server Version: 20.10.12

# ...

# Live Restore Enabled: false

$ docker compose exec jenkins docker ps

# => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 110494b9ca48 gogs/gogs "/app/gogs/docker/st…" 14 hours ago Up 41 minutes (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

# 8b7c591c828d jenkins/jenkins "/usr/bin/tini -- /u…" 14 hours ago Up 57 seconds 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

- OS 재부팅시에 jenkins 컨테이너에서 docker 실행이 실패하는 경우가 있는데, 그럴 경우 아래와 같이 docker 그룹을 다시 지정합니다.

# 소켓 파일의 권한 확인

$ docker compose exec --privileged -u root jenkins ls -l /var/run/docker.sock

# => srwxr-xr-x 1 root root 0 Oct 01 05:22 /var/run/docker.sock

# <span style="color: green;">👉 그룹이 root로 복구되어있습니다. docker 그룹으로 다시 변경해야 합니다.</span>

# 소켓 파일에 docker 그룹을 다시 지정

$ docker compose exec --privileged -u root jenkins chgrp docker /var/run/docker.sock

# 소켓 파일에 docker 그룹 쓰기권한 다시 지정

$ docker compose exec --privileged -u root jenkins chmod g+w /var/run/docker.sock

# 확인

$ docker compose exec --privileged -u root jenkins ls -l /var/run/docker.sock

# => srwxrwxr-x 1 root docker 0 Oct 01 05:22 /var/run/docker.sock

$ docker compose exec jenkins docker info

Gogs 초기 설정

- 초기설정을 위해 웹 접속을 합니다.

# 초기 설정 웹 접속

$ open "http://127.0.0.1:3000/install" # macOS

# 웹 브라우저에서 http://127.0.0.1:3000/install 접속 # Windows

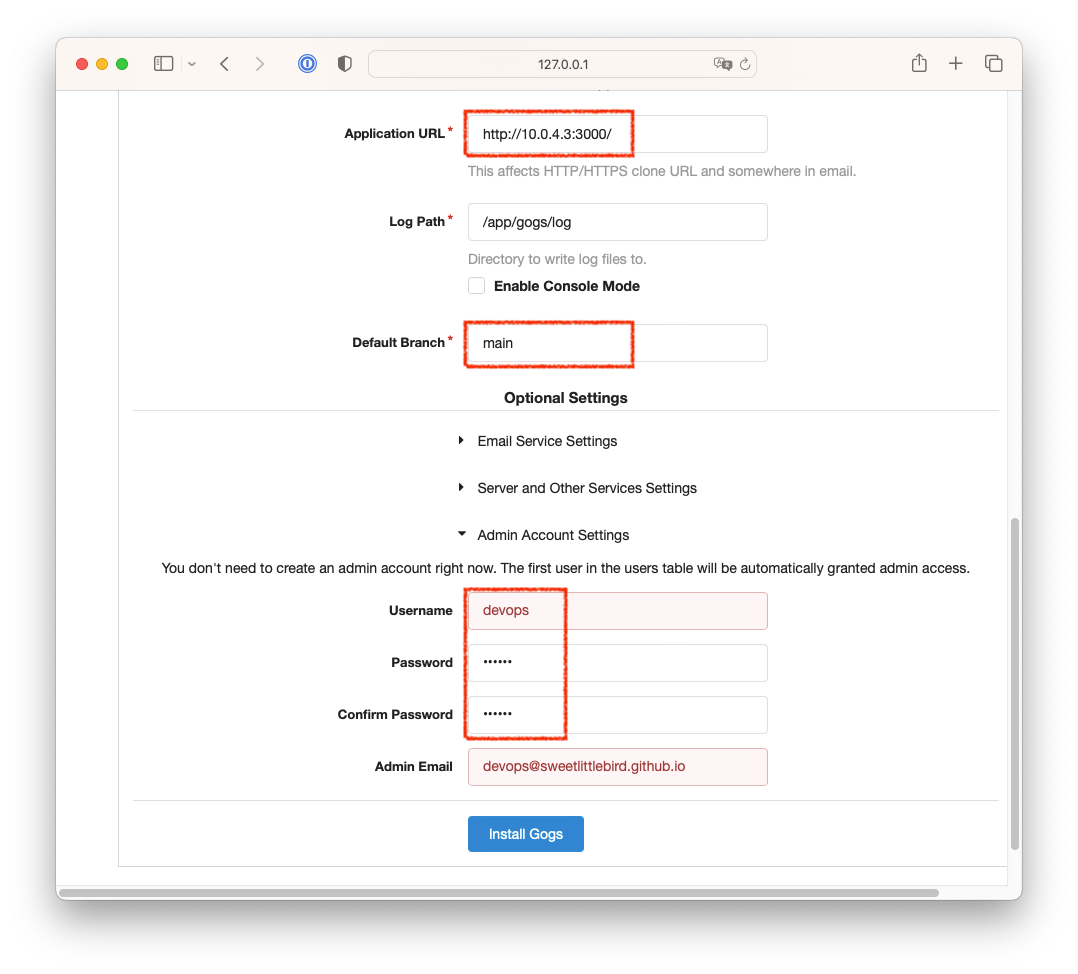

- 다음과 같이 설정값을 변경합니다.

- Database Type : SQLite3

- Application URL :

http://<앞에서 확인한 IP>:3000/ - Default Branch : main

- 관리자 계정 설정 클릭 : username : devops, password : qwe123, email입력

- Install Gogs 버튼 클릭 => 관리자 계정으로 로그인

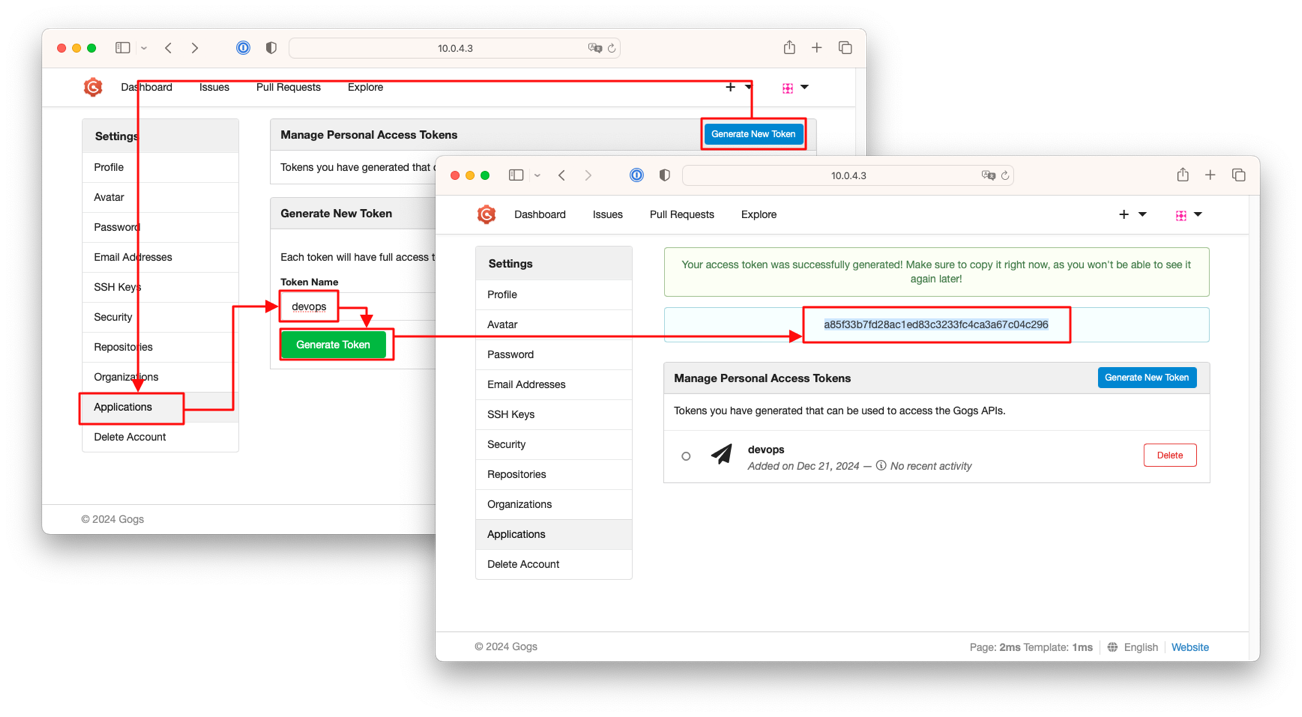

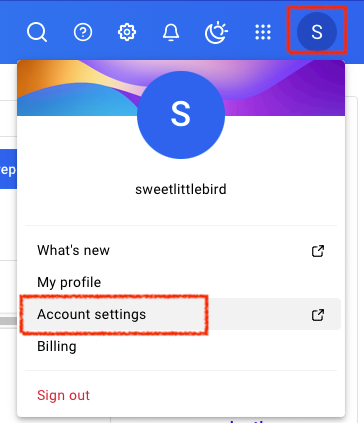

Access Token 발행

- 로그인 후 Your Settings > Applications > Generate New Token 클릭 > Token Name(devops) > Generate Token 클릭하여 토큰을 발행합니다.

- 발행된 토큰(

a85f33b7fd28ac1ed83c3233fc4ca3a67c04c296)을 복사하여 안전한 곳에 기록해둡니다.

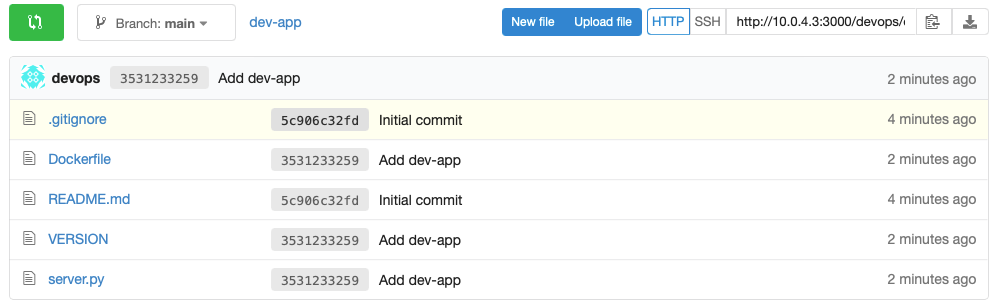

Repository 생성

- 우측상단의

+버튼을 클릭하여 나오는 메뉴에서 New Repository를 클릭해서 새로운 Repository를 다음과 같이 2개 생성합니다. -

개발팀용

- Repository Name : dev-app

- Visibility : (Check) This repository is Private

- .gitignore : Python

- Readme : Default → (Check) initialize this repository with selected files and template

⇒ Create Repository 클릭 => Repo 주소 확인 (

http://10.0.4.3:3000/devops/dev-apps.git) -

데브옵스팀용

- Repository Name : ops-deploy

- Visibility : (체크) This repository is Private

- Readme : Default → (체크) initialize this repository with selected files and template

⇒ Create Repository 클릭 => Repo 주소 확인 (

http://10.0.4.3:3000/devops/ops-deploy.git)

Gogs 실습을 위해 호스트 PC의 git 설정

# (옵션) GIT 인증 정보 초기화

$ git credential-cache exit

#

# $ git clone <각자 Gogs dev-app repo 주소>

$ git clone http://10.0.4.3:3000/devops/dev-app.git

# => Cloning into 'dev-app'...

# Username for 'http://10.0.4.3:3000': devops

# Password for 'http://a@10.0.4.3:3000': # <span style="color: green;">앞서 발급받은 access key 입력</span>

# remote: Enumerating objects: 4, done.

# remote: Counting objects: 100% (4/4), done.

# remote: Compressing objects: 100% (3/3), done.

# remote: Total 4 (delta 0), reused 0 (delta 0), pack-reused 0

# Unpacking objects: 100% (4/4), 705 bytes | 352.00 KiB/s, done.

#

$ cd dev-app

#

$ git config user.name "devops"

$ git config user.email "a@a.com"

$ git config init.defaultBranch main

$ git config credential.helper store

#

$ git branch

# => * main

$ git remote -v

# => origin http://10.0.4.3:3000/devops/dev-app.git (fetch)

# origin http://10.0.4.3:3000/devops/dev-app.git (push)

# server.py 파일 작성

$ cat > server.py <<EOF

from http.server import ThreadingHTTPServer, BaseHTTPRequestHandler

from datetime import datetime

import socket

class RequestHandler(BaseHTTPRequestHandler):

def do_GET(self):

self.send_response(200)

self.send_header('Content-type', 'text/plain')

self.end_headers()

now = datetime.now()

hostname = socket.gethostname()

response_string = now.strftime("The time is %-I:%M:%S %p, VERSION 0.0.1\n")

response_string += f"Server hostname: {hostname}\n"

self.wfile.write(bytes(response_string, "utf-8"))

def startServer():

try:

server = ThreadingHTTPServer(('', 80), RequestHandler)

print("Listening on " + ":".join(map(str, server.server_address)))

server.serve_forever()

except KeyboardInterrupt:

server.shutdown()

if __name__ == "__main__":

startServer()

EOF

# Dockerfile 생성

$ cat > Dockerfile <<EOF

FROM python:3.12

ENV PYTHONUNBUFFERED 1

COPY . /app

WORKDIR /app

CMD python3 server.py

EOF

# VERSION 파일 생성

$ echo "0.0.1" > VERSION

#

$ git status

# => On branch main

# Your branch is up to date with 'origin/main'.

#

# Untracked files:

# (use "git add <file>..." to include in what will be committed)

# Dockerfile

# VERSION

# server.py

#

# nothing added to commit but untracked files present (use "git add" to track)

$ git add .

$ git commit -m "Add dev-app"

# => [main 3531233] Add dev-app

# 3 files changed, 32 insertions(+)

# create mode 100644 Dockerfile

# create mode 100644 VERSION

# create mode 100644 server.py

$ git push -u origin main

# => Enumerating objects: 6, done.

# Counting objects: 100% (6/6), done.

# Delta compression using up to 8 threads

# Compressing objects: 100% (4/4), done.

# Writing objects: 100% (5/5), 900 bytes | 900.00 KiB/s, done.

# Total 5 (delta 0), reused 0 (delta 0), pack-reused 0

# To http://10.0.4.3:3000/devops/dev-app.git

# 5c906c3..3531233 main -> main

# branch 'main' set up to track 'origin/main'.

- Gogs Repo에서 확인

도커 허브 설정

- 도커 허브에 로그인하여 dev-app이라는 Repository를 생성합니다. 도커허브 소개와 Repository 생성은 1주차 내용을 참고하세요.

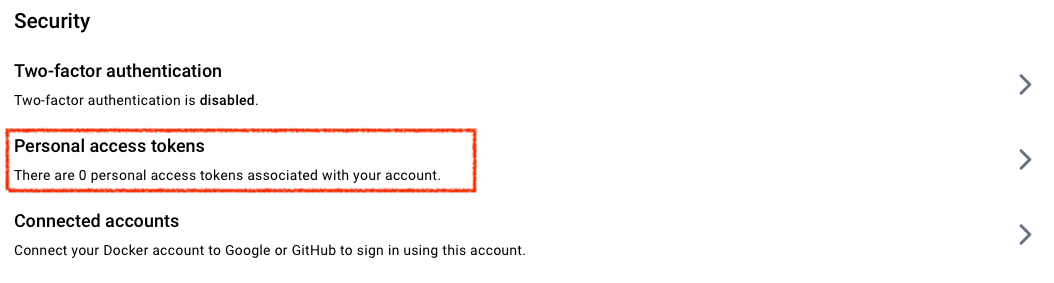

- 배포를 편하게 하기위해 Token도 발급하여 사용해보겠습니다.

- 계정 > Account Settings > Security 클릭

- New Access Token 클릭

- Token Name : devops

- Expireation date : 만료일을 적절히 선택합니다.

- 권한은 Read, Write, Delete를 선택합니다.

- Create 클릭하여 토큰을 생성하고, 발급된 토큰을 복사하여 안전한 곳에 저장합니다.

- 발급된 토큰 :

dckr_****

- 계정 > Account Settings > Security 클릭

Jenkins CI + K8S (Kind)

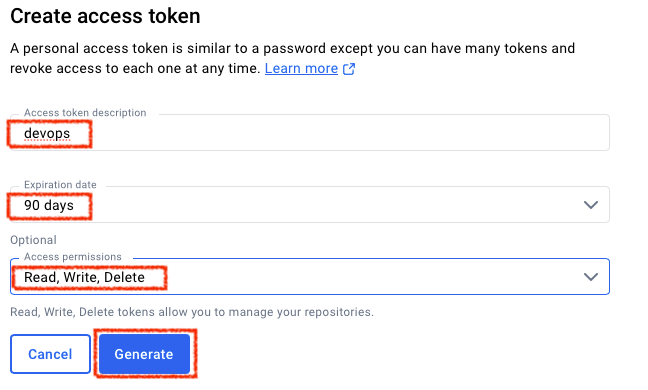

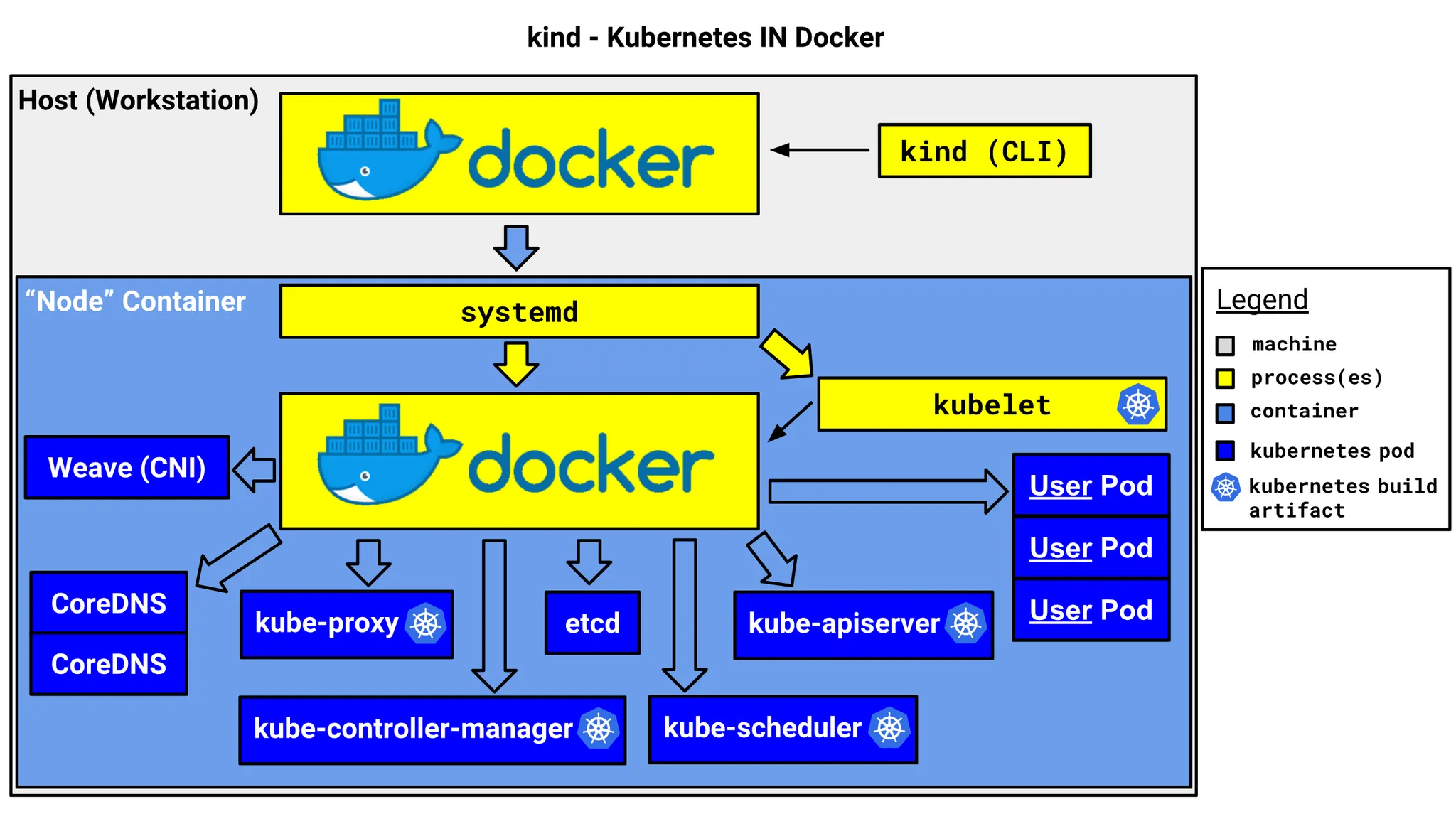

Kind 소개 및 설치

- Kind는 Kubernetes in Docker의 줄임말로, 로컬 환경에서 쉽게 Kubernetes 클러스터를 구성할 수 있도록 도와주는 도구입니다.

- 이름처럼 Docker를 이용하여 Kubernetes 클러스터를 구성하며, Docker를 이용하기 때문에 다양한 환경에서 쉽게 사용할 수 있습니다.

- Kind는 HA를 포함한 멀티노드를 지원하지만, 테스트와 실험적인 목적으로만 사용하기를 추천합니다.

- Kind는 클러스터를 구성하기 위해 kubeadm을 사용합니다.

- Kind 소개 및 설치, Kind 공식문서

Kind 구성도

Kind 구성도

Kind 및 툴 설치

- 필수 툴 설치

# Install Kind

$ brew install kind

$ kind --version

# => kind version 0.26.0

# Install kubectl

$ brew install kubernetes-cli

$ kubectl version --client=true

# => Client Version: v1.31.0

# Kustomize Version: v5.4.2

# Kubecolor Version: v0.4.0

## kubectl -> k 단축키 설정

$ echo "alias kubectl=kubecolor" >> ~/.zshrc

# Install Helm

$ brew install helm

$ helm version

# => version.BuildInfo{Version:"v3.15.4", GitCommit:"fa9efb07d9d8debbb4306d72af76a383895aa8c4", GitTreeState:"clean", GoVersion:"go1.22.6"}

- (권장) 유용한 툴 설치

# 툴 설치

$ brew install krew

$ brew install kube-ps1

$ brew install kubectx

# kubectl 출력 시 하이라이트 처리

$ brew install kubecolor

$ echo "alias kubectl=kubecolor" >> ~/.zshrc

$ echo "compdef kubecolor=kubectl" >> ~/.zshrc

# krew 플러그인 설치

$ kubectl krew install neat stren

Kind 기본 사용 - 클러스터 배포 및 확인

# 클러스터 배포 전 확인

$ docker ps

# => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 110494b9ca48 gogs/gogs "/app/gogs/docker/st…" 19 hours ago Up 5 hours (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

# 8b7c591c828d jenkins/jenkins "/usr/bin/tini -- /u…" 19 hours ago Up 4 hours 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

# Create a cluster with kind

$ kind create cluster

# => Creating cluster "kind" ...

# ✓ Ensuring node image (kindest/node:v1.32.0) 🖼

# ✓ Preparing nodes 📦

# ✓ Writing configuration 📜

# ✓ Starting control-plane 🕹️

# ✓ Installing CNI 🔌

# ✓ Installing StorageClass 💾

# Set kubectl context to "kind-kind"

# You can now use your cluster with:

#

# kubectl cluster-info --context kind-kind

#

# Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

# 클러스터 배포 확인

$ kind get clusters

# => kind

$ kind get nodes

# => kind-control-plane

$ kubectl cluster-info

# => Kubernetes control plane is running at https://127.0.0.1:64234

# CoreDNS is running at https://127.0.0.1:64234/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

#

# To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# 노드 정보 확인

$ kubectl get node -o wide

# => NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# kind-control-plane Ready control-plane 63s v1.32.0 172.20.0.2 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://1.7.24

# 파드 정보 확인

$ kubectl get pod -A

# => NAMESPACE NAME READY STATUS RESTARTS AGE

# kube-system coredns-668d6bf9bc-8pqmk 1/1 Running 0 67s

# kube-system coredns-668d6bf9bc-9ngw2 1/1 Running 0 67s

# kube-system etcd-kind-control-plane 1/1 Running 0 74s

# kube-system kindnet-zlwz2 1/1 Running 0 67s

# kube-system kube-apiserver-kind-control-plane 1/1 Running 0 74s

# kube-system kube-controller-manager-kind-control-plane 1/1 Running 0 74s

# kube-system kube-proxy-nbp2t 1/1 Running 0 67s

# kube-system kube-scheduler-kind-control-plane 1/1 Running 0 74s

# local-path-storage local-path-provisioner-58cc7856b6-wl6z8 1/1 Running 0 67s

$ kubectl get componentstatuses

# => NAME STATUS MESSAGE ERROR

# controller-manager Healthy ok

# scheduler Healthy ok

# etcd-0 Healthy ok

# 컨트롤플레인 (컨테이너) 노드 1대가 실행

$ docker ps

# => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 3d4063180754 kindest/node:v1.32.0 "/usr/local/bin/entr…" About a minute ago Up About a minute 127.0.0.1:64234->6443/tcp kind-control-plane

# 110494b9ca48 gogs/gogs "/app/gogs/docker/st…" 19 hours ago Up 5 hours (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

# 8b7c591c828d jenkins/jenkins "/usr/bin/tini -- /u…" 19 hours ago Up 4 hours 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

$ docker images

# => REPOSITORY TAG IMAGE ID CREATED SIZE

# kindest/node <none> b5a8f8764a3e 7 days ago 1.05GB

# kube config 파일 확인

$ cat ~/.kube/config

# 혹은

# $ cat $KUBECONFIG # KUBECONFIG 변수 지정 사용 시

# nginx 파드 배포 및 확인 : 컨트롤플레인 노드인데 파드가 배포 될까요?

$ kubectl run nginx --image=nginx:alpine

# => pod/nginx created

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# nginx 1/1 Running 0 10s 10.244.0.5 kind-control-plane <none> <none>

# 노드에 Taints 정보 확인

$ kubectl describe node | grep Taints

# => Taints: <none>

# 클러스터 삭제

$ kind delete cluster

# => Deleting cluster "kind" ...

# Deleted nodes: ["kind-control-plane"]

# kube config 삭제 확인

$ cat ~/.kube/config

# 혹은

# $ cat $KUBECONFIG # KUBECONFIG 변수 지정 사용 시

Kind로 Kubernetes 클러스터 배포 - 3노드

# 클러스터 배포 전 확인

$ docker ps

# => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 110494b9ca48 gogs/gogs "/app/gogs/docker/st…" 19 hours ago Up 5 hours (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

# 8b7c591c828d jenkins/jenkins "/usr/bin/tini -- /u…" 19 hours ago Up 4 hours 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

# 방안1 : 환경변수 지정

$ export KUBECONFIG=$PWD/kubeconfig

# Create a cluster with kind

# $ MyIP=<각자 자신의 PC IP>

$ MyIP=10.0.4.3

$ cd ..

$ cat > kind-3node.yaml <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

networking:

apiServerAddress: "$MyIP"

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 30000

hostPort: 30000

- containerPort: 30001

hostPort: 30001

- containerPort: 30002

hostPort: 30002

- containerPort: 30003

hostPort: 30003

- role: worker

- role: worker

EOF

$ kind create cluster --config kind-3node.yaml --name myk8s --image kindest/node:v1.30.6

# => Creating cluster "myk8s" ...

# ✓ Ensuring node image (kindest/node:v1.30.6) 🖼

# ✓ Preparing nodes 📦 📦 📦

# ✓ Writing configuration 📜

# ✓ Starting control-plane 🕹️

# ✓ Installing CNI 🔌

# ✓ Installing StorageClass 💾

# ✓ Joining worker nodes 🚜

# Set kubectl context to "kind-myk8s"

# You can now use your cluster with:

#

# kubectl cluster-info --context kind-myk8s

#

# Thanks for using kind! 😊

# 확인

$ kind get nodes --name myk8s

# => myk8s-control-plane

# myk8s-worker

# myk8s-worker2

$ kubens default

# => Context "kind-myk8s" modified.

# Active namespace is "default".

# kind 는 별도 도커 네트워크 생성 후 사용 : 기본값 172.18.0.0/16

$ docker network ls

# => NETWORK ID NAME DRIVER SCOPE

# 8204a0851463 host host local

# 3bbcc6aa8f38 kind bridge local

$ docker inspect kind | jq

# => [

# {

# "Name": "kind",

# "Id": "3bbcc6aa8f388f86f02478f41de1e4dd917e5812b6cf6257972e4af0bedf5021",

# "Created": "2024-10-01T11:37:09.195259833Z",

# "Scope": "local",

# "Driver": "bridge",

# "EnableIPv6": true,

# "IPAM": {

# "Driver": "default",

# "Options": {},

# "Config": [

# {

# "Subnet": "172.20.0.0/16",

# "Gateway": "172.20.0.1"

# }

# ]

# },

# "Internal": false,

# "Attachable": false,

# "Ingress": false,

# "ConfigFrom": {

# "Network": ""

# },

# "ConfigOnly": false,

# "Containers": {

# "35739bf3542771236d47fd4dcb27da13814184a3de57c7203904f66ecbab4710": {

# "Name": "myk8s-worker",

# "EndpointID": "5de8e9e48e611f2c4cb908649f5dcdf63c82d624b85f85a6573ced7cbd454554",

# "MacAddress": "02:42:ac:14:00:03",

# "IPv4Address": "172.20.0.3/16",

# },

# "48023a25d056141b00747f14ff52da2b46c46c0d0edbeb714dedd1f3c71360e4": {

# "Name": "myk8s-control-plane",

# "EndpointID": "9d51136474882d6ca7a4aabe7291e26527f44c3b88c7191b654506fdf1d65c84",

# "MacAddress": "02:42:ac:14:00:04",

# "IPv4Address": "172.20.0.4/16",

# },

# "fefdfb00a2228f646119483a24503e1dc8bd74292e462fc9fa2ef3446004b4af": {

# "Name": "myk8s-worker2",

# "EndpointID": "c669ce7940abb8722b7ac41cc533c260838d31881c915a49147829e9d28a746c",

# "MacAddress": "02:42:ac:14:00:02",

# "IPv4Address": "172.20.0.2/16",

# }

# },

# "Options": {

# "com.docker.network.bridge.enable_ip_masquerade": "true",

# "com.docker.network.driver.mtu": "1500"

# },

# "Labels": {}

# }

# ]

# k8s api 주소 확인 : 어떻게 로컬에서 접속이 되는 걸까?

$ kubectl cluster-info

# => Kubernetes control plane is running at https://10.0.4.3:51235

# CoreDNS is running at https://10.0.4.3:51235/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

#

# To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# <span style="color: green;">👉 docker가 10.0.4.3:51235 접속시 kind 컨테이너의 6443 포트로 포워딩 해주고</span>

# <span style="color: green;"> ~/.kube/config 에서 10.0.4.3:51235를 apiserver 주소로 지정하고 있기 때문에 접속이 가능합니다.</span>

# 노드 정보 확인 : CRI 는 containerd 사용

$ kubectl get node -o wide

# => NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# myk8s-control-plane Ready control-plane 3m8s v1.30.6 172.20.0.4 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://1.7.18

# myk8s-worker Ready <none> 2m48s v1.30.6 172.20.0.3 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://1.7.18

# myk8s-worker2 Ready <none> 2m48s v1.30.6 172.20.0.2 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://1.7.18

# 파드 정보 확인 : CNI 는 kindnet 사용

$ kubectl get pod -A -o wide

# => NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# kube-system coredns-55cb58b774-m7h2c 1/1 Running 0 3m7s 10.244.0.2 myk8s-control-plane <none> <none>

# kube-system coredns-55cb58b774-z88v5 1/1 Running 0 3m7s 10.244.0.3 myk8s-control-plane <none> <none>

# kube-system etcd-myk8s-control-plane 1/1 Running 0 3m22s 172.20.0.4 myk8s-control-plane <none> <none>

# kube-system kindnet-mp6mj 1/1 Running 0 3m7s 172.20.0.4 myk8s-control-plane <none> <none>

# kube-system kindnet-q2k9w 1/1 Running 0 3m4s 172.20.0.2 myk8s-worker2 <none> <none>

# kube-system kindnet-t99c4 1/1 Running 0 3m4s 172.20.0.3 myk8s-worker <none> <none>

# kube-system kube-apiserver-myk8s-control-plane 1/1 Running 0 3m21s 172.20.0.4 myk8s-control-plane <none> <none>

# kube-system kube-controller-manager-myk8s-control-plane 1/1 Running 0 3m21s 172.20.0.4 myk8s-control-plane <none> <none>

# kube-system kube-proxy-f85sx 1/1 Running 0 3m7s 172.20.0.4 myk8s-control-plane <none> <none>

# kube-system kube-proxy-ltckc 1/1 Running 0 3m4s 172.20.0.2 myk8s-worker2 <none> <none>

# kube-system kube-proxy-njr42 1/1 Running 0 3m4s 172.20.0.3 myk8s-worker <none> <none>

# kube-system kube-scheduler-myk8s-control-plane 1/1 Running 0 3m21s 172.20.0.4 myk8s-control-plane <none> <none>

# local-path-storage local-path-provisioner-7d4d9bdcc5-jhl5h 1/1 Running 0 3m7s 10.244.0.4 myk8s-control-plane <none> <none>

# 쿠버네티스 네임스페이스 확인 >> 도커 컨테이너에서 배운 네임스페이스와 다릅니다!

$ kubectl get namespaces

# => NAME STATUS AGE

# default Active 3m42s

# kube-node-lease Active 3m42s

# kube-public Active 3m42s

# kube-system Active 3m42s

# local-path-storage Active 3m35s

# 컨트롤플레인/워커 노드(컨테이너) 확인 : 도커 컨테이너 이름은 myk8s-control-plane , myk8s-worker/worker-2 임을 확인

$ docker ps

# => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 35739bf35427 kindest/node:v1.30.6 "/usr/local/bin/entr…" 4 minutes ago Up 4 minutes myk8s-worker

# 48023a25d056 kindest/node:v1.30.6 "/usr/local/bin/entr…" 4 minutes ago Up 4 minutes 0.0.0.0:30000-30003->30000-30003/tcp, 10.0.4.3:51235->6443/tcp myk8s-control-plane

# fefdfb00a222 kindest/node:v1.30.6 "/usr/local/bin/entr…" 4 minutes ago Up 4 minutes myk8s-worker2

$ docker images

# 디버그용 내용 출력에 ~/.kube/config 권한 인증 로드

$ kubectl get pod -v6

# => I1221 20:19:47.265879 56969 loader.go:395] Config loaded from file: /Users/anonym/Documents/GitHub/cicd-lite/w3/1/dev-app/kubeconfig

# I1221 20:19:47.354543 56969 round_trippers.go:553] GET https://10.0.4.3:51235/api/v1/namespaces/default/pods?limit=500 200 OK in 79 milliseconds

# No resources found in default namespace.

# kube config 파일 확인

$ cat $KUBECONFIG

$ ls -l $KUBECONFIG

# => .rw------- anonym staff 5.5 KB Sat Oct 01 20:16:21 2024 /Users/user/Documents/GitHub/cicd-lite/w3/1/dev-app/kubeconfig

kube-ops-view 설치

- kube-ops-view는 쿠버네티스 클러스터의 상태를 시각적으로 보여주는 대시보드입니다.

# kube-ops-view

# helm show values geek-cookbook/kube-ops-view

$ helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

$ helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set service.main.type=NodePort,service.main.ports.http.nodePort=30001 --set env.TZ="Asia/Seoul" --namespace kube-system

# 설치 확인

$ kubectl get deploy,pod,svc,ep -n kube-system -l app.kubernetes.io/instance=kube-ops-view

# => NAME READY UP-TO-DATE AVAILABLE AGE

# deployment.apps/kube-ops-view 1/1 1 1 25s

#

# NAME READY STATUS RESTARTS AGE

# pod/kube-ops-view-796947d6dc-6b6xx 1/1 Running 0 25s

#

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# service/kube-ops-view NodePort 10.96.18.59 <none> 8080:30001/TCP 25s

#

# NAME ENDPOINTS AGE

# endpoints/kube-ops-view 10.244.1.2:8080 25s

# kube-ops-view 접속 URL 확인 (1.5 , 2 배율)

$ open "http://127.0.0.1:30001/#scale=1.5"

$ open "http://127.0.0.1:30001/#scale=2"

클러스터 삭제

# 클러스터 삭제

$ kind delete cluster --name myk8s

# => Deleting cluster "myk8s" ...

# Deleted nodes: ["myk8s-worker" "myk8s-control-plane" "myk8s-worker2"]

$ docker ps

# => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 110494b9ca48 gogs/gogs "/app/gogs/docker/st…" 19 hours ago Up 6 hours (healthy) 0.0.0.0:3000->3000/tcp, 0.0.0.0:10022->22/tcp gogs

# 8b7c591c828d jenkins/jenkins "/usr/bin/tini -- /u…" 19 hours ago Up 5 hours 0.0.0.0:8080->8080/tcp, 0.0.0.0:50000->50000/tcp jenkins

$ cat $KUBECONFIG

$ unset KUBECONFIG

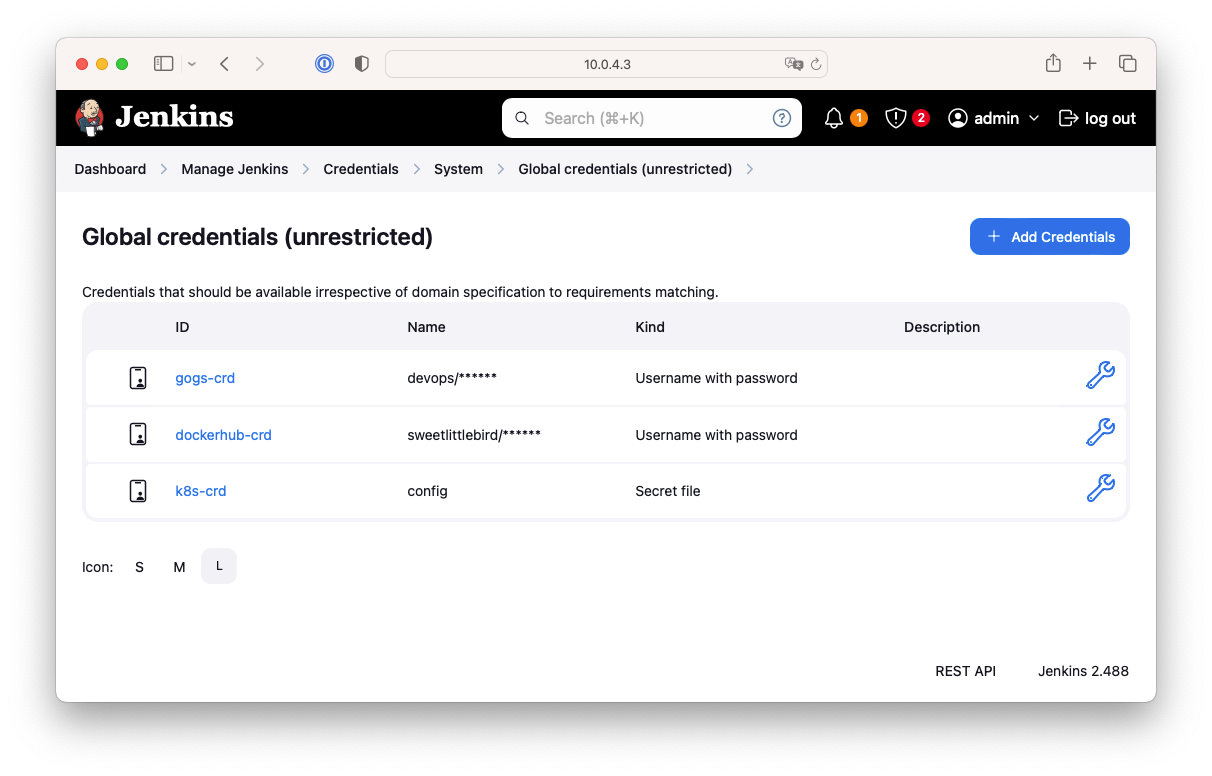

Jenkins 설정 : Plugin 설치, 자격증명 설정

- Jenkins Plugin 설치 : Dashboard > Manage Jenkins > Plugins > Available plugins 탭에서 설치

- 자격증명 설정 : Dashboard > Manage Jenkins > Credentials > Global > Add Credentials 에서 추가

- Gogs Repo 자격증명 설정 : gogs-crd

- Kind : Username with password

- Username :

devops - Password :

<Gogs 토큰> - ID :

gogs-crd

- 도커 허브 자격증명 설정 : dockerhub-crd

- Kind : Username with password

- Username :

<도커 계정명> - Password :

<도커 계정 암호 혹은 토큰> - ID :

dockerhub-crd

- k8s(kind) 자격증명 설정 : k8s-crd

- Kind :

Secret file - File :

<kubeconfig 파일 업로드> - ID :

k8s-crd

- Kind :

- Gogs Repo 자격증명 설정 : gogs-crd

Jenkins 자격증명 설정 결과

Jenkins 자격증명 설정 결과

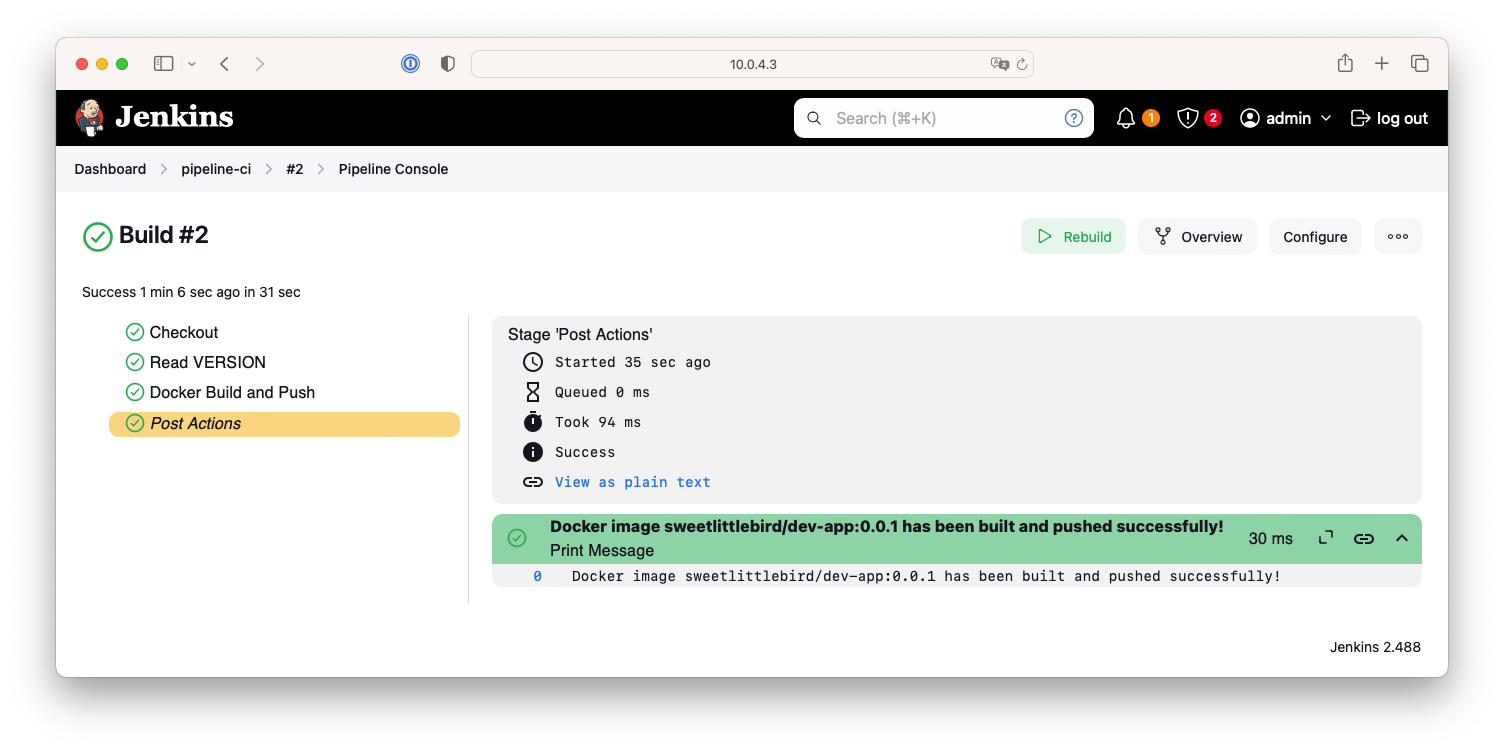

Jenkins item 생성 (Pipeline)

- 간단한 Pipeline 스크립트를 작성하여 gogs와 도커허브의 자격증명이 잘 연동됨을 확인해보겠습니다.

- 아래의 Pipeline 스크립트를

pipeline-ci라는 이름으로 생성합니다.

pipeline {

agent any

environment {

DOCKER_IMAGE = '<자신의 도커 허브 계정>/dev-app' // Docker 이미지 이름

}

stages {

stage('Checkout') {

steps {

git branch: 'main',

url: 'http://<PC의 IP>:3000/devops/dev-app.git', // Git에서 코드 체크아웃

credentialsId: 'gogs-crd' // Credentials ID

}

}

stage('Read VERSION') {

steps {

script {

// VERSION 파일 읽기

def version = readFile('VERSION').trim()

echo "Version found: ${version}"

// 환경 변수 설정

env.DOCKER_TAG = version

}

}

}

stage('Docker Build and Push') {

steps {

script {

docker.withRegistry('https://index.docker.io/v1/', 'dockerhub-crd') {

// DOCKER_TAG 사용

def appImage = docker.build("${DOCKER_IMAGE}:${DOCKER_TAG}")

appImage.push()

appImage.push("latest")

}

}

}

}

}

post {

success {

echo "Docker image ${DOCKER_IMAGE}:${DOCKER_TAG} has been built and pushed successfully!"

}

failure {

echo "Pipeline failed. Please check the logs."

}

}

}

- 지금 빌드 => 콘솔 Output 확인

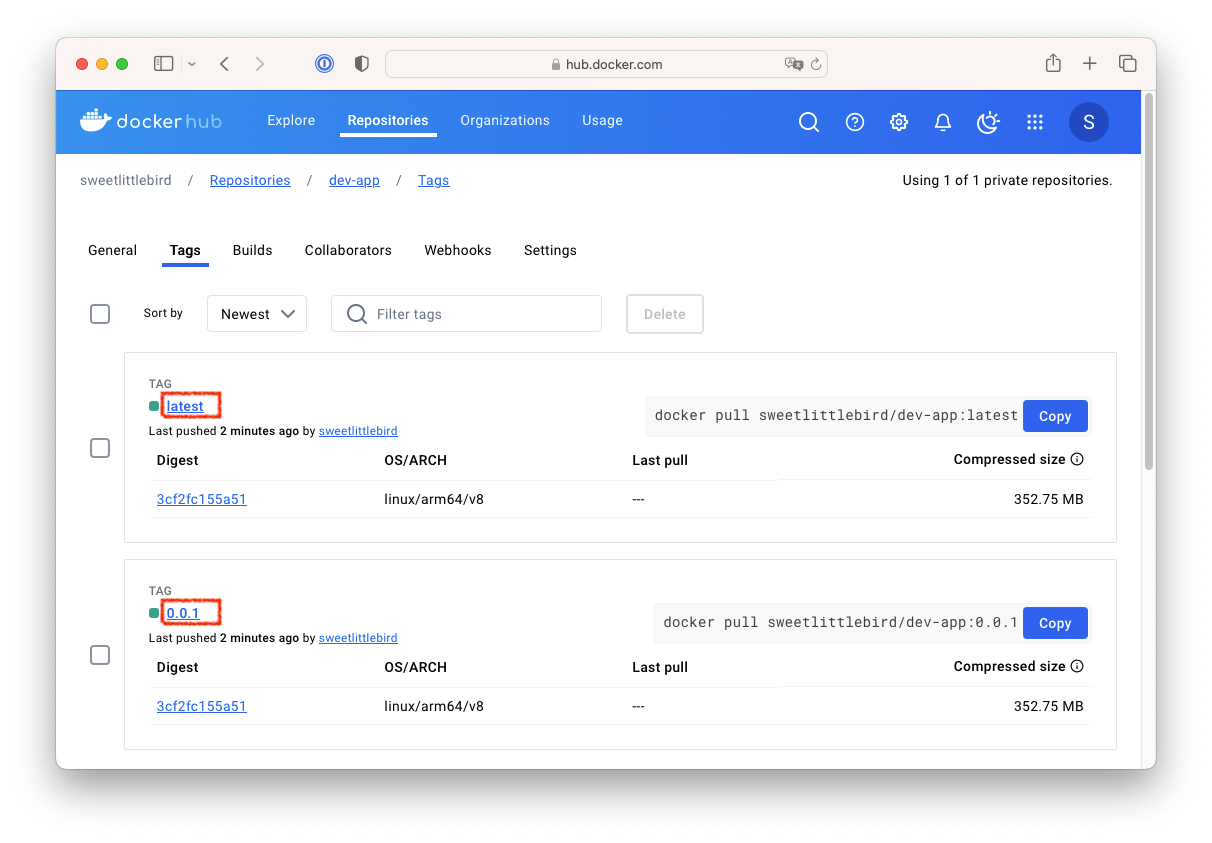

- 도커 허브 확인

- 자격증명들이 잘 연동되어, 파이프라인에서 지정한것 처럼 버전명의 태그(0.0.1)과 latest 태그가 잘 생성되었습니다!

Kubernetes 클러스터에 응용프로그램 배포하기

- Jenkins Pipeline을 통해 Kubernetes 클러스터에 응용프로그램을 배포해보겠습니다.

- 먼저 Kubernetes 클러스터에 배포할때 사용하는 deployment에 대해 알아보겠습니다.

Deployment 소개

Kubernetes 배포 구조

- Kubernetes를 배포하는 최소단위는 Pod이며, 하나 이상의 컨테이너로 구성됩니다.

- Pod는 ReplicaSet에 의해 관리되며, ReplicaSet은 Pod의 수를 유지하도록 관리합니다.

-

Deployment는 ReplicaSet을 관리하며, Pod의 배포 및 업데이트를 관리합니다.

- Kubernetes는 manifest라는 yaml 파일을 통해 리소스를 정의하고, 선언형 방식으로 원하는 상태를 선언시 해당 상태를 충족시키기 위해 클러스터를 조정합니다.

- 아래는 kubernetes의 manifest의 구조입니다.

apiVersion: ... # <span style="color: green;">👉 리소스를 만드는데 사용할 Kubernetes API 버전</span> kind: ... # <span style="color: green;">👉 만들고자 하는 리소스의 종류</span> metadata: ... # <span style="color: green;">👉 리소스를 식별하는 고유 데이터와 상태와 관련없는 메타데이터</span> spec: ... # <span style="color: green;">👉 리소스의 원하는 상태를 정의</span>

Deployment 배포 실습

-

앞서 작성한 Jenkins Pipeline을 통해 빌드된 도커 이미지를 Kubernetes 클러스터에 응용프로그램을 배포해보겠습니다.

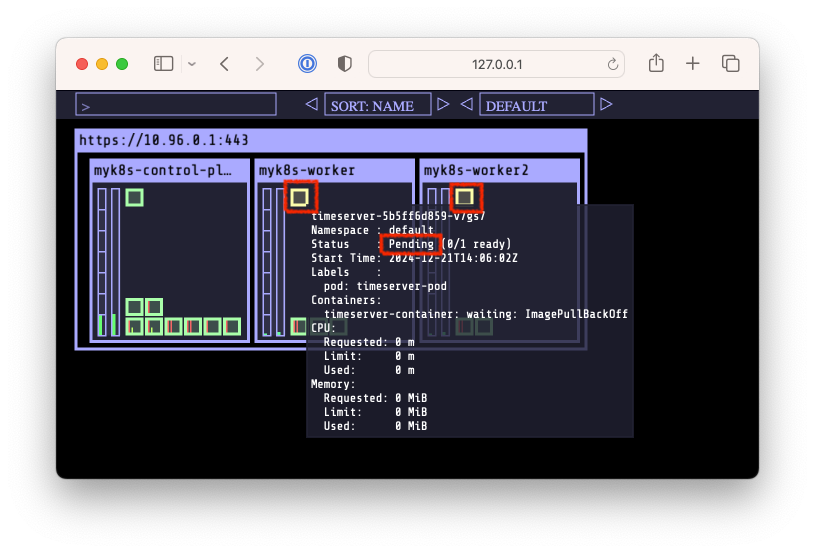

# 디플로이먼트 오브젝트 배포 : 리플리카(파드 2개), 컨테이너 이미지 >> 아래 도커 계정 부분만 변경해서 배포해보자 #$ DHUSER=<도커 허브 계정명> $ DHUSER=sweetlittlebird $ cat <<EOF | kubectl apply -f - apiVersion: apps/v1 kind: Deployment metadata: name: timeserver spec: replicas: 2 selector: matchLabels: pod: timeserver-pod template: metadata: labels: pod: timeserver-pod spec: containers: - name: timeserver-container image: docker.io/$DHUSER/dev-app:0.0.1 EOF # => deployment.apps/timeserver created $ watch -d kubectl get deploy,pod -o wide # 배포 상태 확인 : kube-ops-view 웹 확인 $ kubectl get deploy,pod -o wide # => NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR # deployment.apps/timeserver 0/2 2 0 45s timeserver-container docker.io/sweetlittlebird/dev-app:0.0.1 pod=timeserver-pod # # NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES # pod/timeserver-5b5ff6d859-s282p 0/1 <span style="color: red;">ImagePullBackOff</span> 0 45s 10.244.2.2 myk8s-worker2 <none> <none> # pod/timeserver-5b5ff6d859-v7gs7 0/1 <span style="color: red;">ImagePullBackOff</span> 0 45s 10.244.1.2 myk8s-worker <none> <none> # <span style="color: green;">👉 배포상태가 ImagePullBackOff로 배포가 되지 않았습니다.</span> $ kubectl describe pod # => Name: timeserver-5b5ff6d859-s282p # ... # Events: # Type Reason Age From Message # ---- ------ ---- ---- ------- # Normal Scheduled 5m38s default-scheduler Successfully assigned default/timeserver-5b5ff6d859-s282p to myk8s-worker2 # Normal Pulling 4m4s (x4 over 5m37s) kubelet Pulling image "docker.io/sweetlittlebird/dev-app:0.0.1" # Warning Failed 4m3s (x4 over 5m35s) kubelet Failed to pull image "docker.io/sweetlittlebird/dev-app:0.0.1": failed to pull and unpack image "docker.io/sweetlittlebird/dev-app:0.0.1": failed to resolve reference "docker.io/sweetlittlebird/dev-app:0.0.1": pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed # Warning Failed 4m3s (x4 over 5m35s) kubelet Error: ErrImagePull # Warning Failed 3m48s (x6 over 5m35s) kubelet Error: ImagePullBackOff # Normal BackOff 22s (x20 over 5m35s) kubelet Back-off pulling image "docker.io/sweetlittlebird/dev-app:0.0.1"```

Kube-Ops-View를 통해 살펴본 Kubernetes 클러스터에 timeserver 배포 실패

Kube-Ops-View를 통해 살펴본 Kubernetes 클러스터에 timeserver 배포 실패

- 위와 같이 Image pull error가 나면서 배포가 실패했습니다.

-

kubectl describe pod를 통해 확인한 결과,pull access denied, repository does not exist or may require authorization: server message: insufficient_scope: authorization failed와 같은 메시지가 나타나고 이는 Kubernetes 클러스터에서 도커 이미지를 pull할 때 도커 허브에 인증 토큰이 되어있지 않아서 발생한 문제를 의미합니다. - 도커 허브의 인증토큰을 등록하고 다시 시도해보겠습니다.

K8S에 Docker Hub 인증토큰 등록 후 다시 배포

# k8s secret : 도커 자격증명 설정

$ kubectl get secret -A # 기존 시크릿 확인

# => NAMESPACE NAME TYPE DATA AGE

# kube-system bootstrap-token-abcdef bootstrap.kubernetes.io/token 6 164m

# kube-system sh.helm.release.v1.kube-ops-view.v1 helm.sh/release.v1 1 25m

#$ DHUSER=<도커 허브 계정>

#$ DHPASS=<도커 허브 암호 혹은 토큰>

$ DHUSER=sweetlittlebird

$ DHPASS=dckr_****

$ echo $DHUSER $DHPASS

# => sweetlittlebird dckr_****

# 도커 허브 시크릿 생성

$ kubectl create secret docker-registry dockerhub-secret \

--docker-server=https://index.docker.io/v1/ \

--docker-username=$DHUSER \

--docker-password=$DHPASS

# 확인

$ kubectl get secret

# => NAME TYPE DATA AGE

# dockerhub-secret kubernetes.io/dockerconfigjson 1 8s

$ kubectl describe secret

# => Name: dockerhub-secret

# Namespace: default

# Labels: <none>

# Annotations: <none>

#

# Type: kubernetes.io/dockerconfigjson

#

# Data

# ====

# .dockerconfigjson: 205 bytes

$ kubectl get secrets -o yaml | kubectl neat # base64 인코딩 확인

# => apiVersion: v1

# items:

# - apiVersion: v1

# data:

# .dockerconfigjson: eyJhdXRocyI6eyJodHRwczovL2luZGV4LmRvY2tlci5pby92MS8iOnsidXNlcm5hbWUiOiJzZWNyZXRsaXR0bGViaXJkIiwicGFzc3dvcmQiOiJkY2tyX3BhdF9QdWNpVTRIMFBPZVpYWGNvV1VNd1ozTVpZVEUiLCJhdXRoIjoiYzJWamNtVjBiR2wwZEd4bFltbHlaRHBrWTJ0eVgzQmhkRjlRZFdOcFZUUklNRkJQWlZwWVdHTnZWMVZOZDFvelRWcFpWRVU9In19fQ==

# kind: Secret

# metadata:

# name: dockerhub-secret

# namespace: default

# type: kubernetes.io/dockerconfigjson

# kind: List

# metadata: {}

$ SECRET=eyJhdXRocyI6eyJodHRwczovL2luZGV4LmRvY2tlci5pby92MS8iOnsidXNlcm5hbWUiOiJzZWNyZXRsaXR0bGViaXJkIiwicGFzc3dvcmQiOiJkY2tyX3BhdF9QdWNpVTRIMFBPZVpYWGNvV1VNd1ozTVpZVEUiLCJhdXRoIjoiYzJWamNtVjBiR2wwZEd4bFltbHlaRHBrWTJ0eVgzQmhkRjlRZFdOcFZUUklNRkJQWlZwWVdHTnZWMVZOZDFvelRWcFpWRVU9In19fQ==

$ echo "$SECRET" | base64 -d ; echo

# => {"auths":{"https://index.docker.io/v1/":{"username":"sweetlittlebird","password":"dckr_****","auth":"c2VjcmV0bGl0dGxlYmlyZDpkY2tyX3BhdF9QdWNpVTRIMFBPZVpYWGNvV1VNd1ozTVpZVEU="}}}

# 도커허브 인증 토큰이 등록되었으니 다시 배포해보겠습니다.

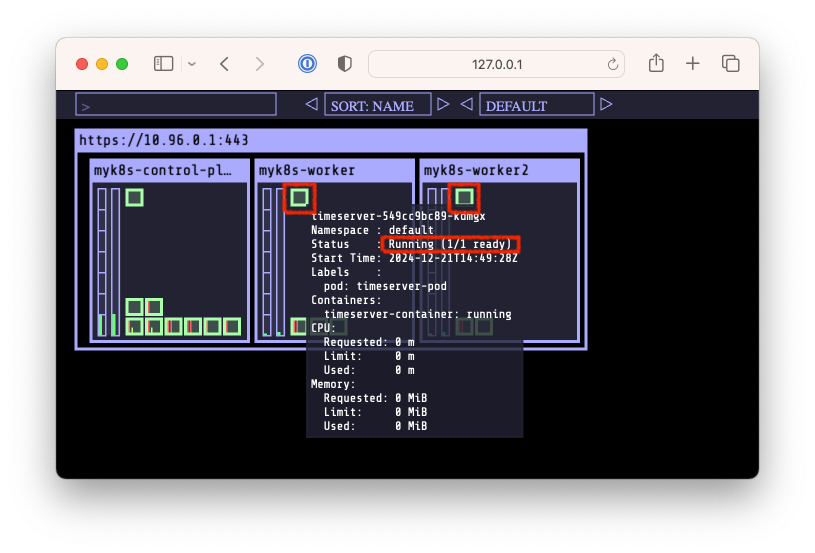

$ cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: timeserver

spec:

replicas: 2

selector:

matchLabels:

pod: timeserver-pod

template:

metadata:

labels:

pod: timeserver-pod

spec:

containers:

- name: timeserver-container

image: docker.io/$DHUSER/dev-app:0.0.1

imagePullSecrets:

- name: dockerhub-secret

EOF

$ watch -d kubectl get deploy,pod -o wide

# 확인

$ kubectl get deploy,pod

# => NAME READY UP-TO-DATE AVAILABLE AGE

# deployment.apps/timeserver 2/2 2 2 39s

#

# NAME READY STATUS RESTARTS AGE

# pod/timeserver-549cc9bc89-k6n6g 1/1 Running 0 39s

# pod/timeserver-549cc9bc89-kdmgx 1/1 Running 0 39s

# <span style="color: green;">👉 배포가 잘 되었습니다!</span>

# 접속을 위한 curl 파드 생성

$ kubectl run curl-pod --image=curlimages/curl:latest --command -- sh -c "while true; do sleep 3600; done"

# => pod/curl-pod created

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 0 22s 10.244.2.6 myk8s-worker2 <none> <none>

# timeserver-549cc9bc89-k6n6g 1/1 Running 0 103s 10.244.2.5 myk8s-worker2 <none> <none>

# timeserver-549cc9bc89-kdmgx 1/1 Running 0 103s 10.244.1.6 myk8s-worker <none> <none>

# timeserver 파드 IP 1개 확인 후 접속 확인

#$ PODIP1=<timeserver-Y 파드 IP>

$ PODIP1=10.244.2.5

$ kubectl exec -it curl-pod -- curl $PODIP1

# => The time is 2:51:54 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-k6n6g

$ kubectl exec -it curl-pod -- curl $PODIP1

# => The time is 2:52:03 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-k6n6g

# 로그 확인

$ kubectl logs deploy/timeserver

$ kubectl logs deploy/timeserver -f

$ kubectl stern deploy/timeserver

$ kubectl stern -l pod=timeserver-pod

- kube-ops-view를 통해서도 배포가 잘 되었음을 확인할 수 있습니다.

- 파드 1개 삭제 후 동작을 확인해보겠습니다.

#

#$ POD1NAME=<파드 1개 이름>

$ POD1NAME=timeserver-549cc9bc89-k6n6g

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 0 22s 10.244.2.6 myk8s-worker2 <none> <none>

# timeserver-549cc9bc89-k6n6g 1/1 Running 0 103s 10.244.2.5 myk8s-worker2 <none> <none>

# timeserver-549cc9bc89-kdmgx 1/1 Running 0 103s 10.244.1.6 myk8s-worker <none> <none>

$ kubectl delete pod $POD1NAME && kubectl get pod -w

# => pod "timeserver-549cc9bc89-k6n6g" deleted # <span style="color: green;">👉 분명히 timeserver 파드 1개를 삭제하였는데</span>

# NAME READY STATUS RESTARTS AGE

# curl-pod 1/1 Running 0 115s

# timeserver-549cc9bc89-kdmgx 1/1 Running 0 7m20s

# timeserver-549cc9bc89-pvm5k 1/1 Running 0 31s # <span style="color: green;">👉 다시 새로운 파드가 생성되었습니다.</span>

# 셀프 힐링 , 파드 IP 변경 -> 고정 진입점(고정 IP/도메인네임) 필요 => Service

$ kubectl get deploy,rs,pod -owide

# => NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

# deployment.apps/timeserver 2/2 2 2 10m timeserver-container docker.io/sweetlittlebird/dev-app:0.0.1 pod=timeserver-pod

#

# NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

# replicaset.apps/timeserver-549cc9bc89 2 2 2 10m timeserver-container docker.io/sweetlittlebird/dev-app:0.0.1 pod=timeserver-pod,pod-template-hash=549cc9bc89

#

# NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# pod/curl-pod 1/1 Running 0 4m56s 10.244.2.7 myk8s-worker2 <none> <none>

# pod/timeserver-549cc9bc89-kdmgx 1/1 Running 0 10m 10.244.1.6 myk8s-worker <none> <none>

# pod/timeserver-549cc9bc89-pvm5k 1/1 Running 0 3m32s 10.244.2.8 myk8s-worker2 <none> <none>

- 위와 같이 파드가 삭제되면 ReplicaSet에 의해 새로운 파드가 생성되는 것을 확인할 수 있습니다.

- 이때 IP가 변경되어 새로운 파드가 생성되는데, 이렇게 되면 매번 파드가 생성될때 마다 IP가 변경되어 서비스를 제공하기 어렵습니다.

- 이를 해결하기 위해 Service를 사용합니다.

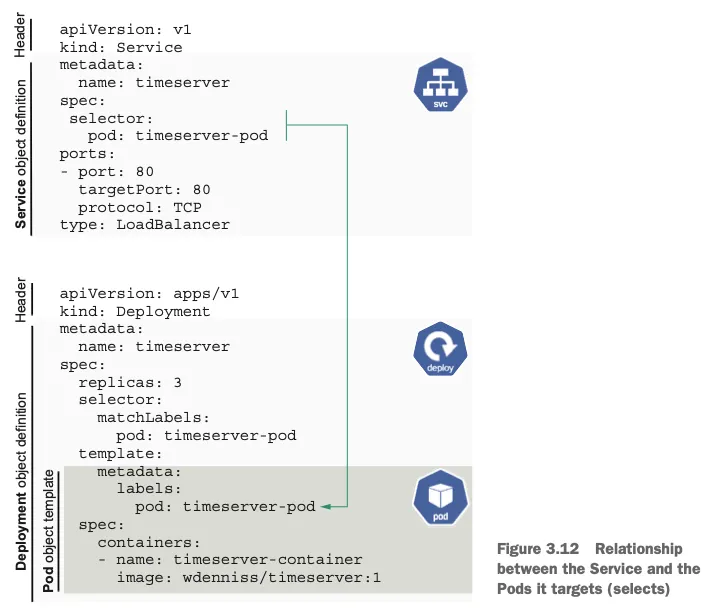

Service 소개

- Service는 Pod의 집합에 대한 고정된 진입점을 제공합니다. 앞서 살펴본것과 같이 Pod는 생성/삭제되면 IP가 변경되는데, 이를 Service를 통해 고정된 IP로 접근할 수 있습니다.

- Deployment를 통해 Pod가 여러 개 생성되면, Service는 이들을 하나의 집합으로 묶어서 부하분산(Load Balancing)을 제공합니다.

- Service는 Deployment를 대상으로 하지않고, Pod를 대상으로 합니다. Pod의 Label Selector를 통해 Service는 Pod를 선택합니다.

Service와 Deployment, Pod manifest의 관계

Service와 Deployment, Pod manifest의 관계

- Service는 다음과 같은 종류가 있습니다.

- ClusterIP : 클러스터 내부에서만 접근 가능합니다.

- NodePort : 클러스터 내부에서는 물론 외부에서 접근 가능합니다. 이때 파드가 동작하는 노드 IP의 지정된 포트로 접근 가능합니다.

- LoadBalancer : 클라우드 제공자의 로드밸런서를 사용하여 외부에서 접근 가능합니다.

Service 배포 실습

- 간단한 서비스를 배포해보겠습니다.

# 서비스 생성

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

name: timeserver

spec:

selector:

pod: timeserver-pod

ports:

- port: 80

targetPort: 80

protocol: TCP

nodePort: 30000

type: NodePort

EOF

# => service/timeserver created

#

$ kubectl get service,ep timeserver -owide

# => NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

# service/timeserver NodePort 10.96.204.127 <none> 80:30000/TCP 13s pod=timeserver-pod

#

# NAME ENDPOINTS AGE

# endpoints/timeserver 10.244.1.6:80,10.244.2.8:80 13s

# Service(ClusterIP)로 접속 확인 : 도메인네임 방식

$ kubectl exec -it curl-pod -- curl timeserver

# => The time is 3:29:30 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-pvm5k

$ kubectl exec -it curl-pod -- curl timeserver.default.svc.cluster.local

# => The time is 3:29:33 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-pvm5k

# Service(ClusterIP)로 접속 확인 : 클러스터 IP 방식

$ kubectl get svc timeserver -o jsonpath={.spec.clusterIP}

# => 10.96.204.127

$ kubectl exec -it curl-pod -- curl $(kubectl get svc timeserver -o jsonpath={.spec.clusterIP})

# => The time is 3:29:40 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-pvm5k

# Service(NodePort)로 접속 확인 "노드IP:NodePort"

$ curl http://127.0.0.1:30000

# => The time is 3:32:43 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-<span style="color: red;">kdmgx</span>

$ curl http://127.0.0.1:30000

# => The time is 3:32:59 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-<span style="color: red;">pvm5k</span>

# <span style="color: green;">👉 서비스가 2개의 Pod 사이를 Load balancing 하는것을 확인 할 수 있습니다.</span>

# 반복 접속 해두기 : 부하분산 확인

$ while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 | grep name ; sleep 1 ; done

# => Server hostname: timeserver-549cc9bc89-kdmgx

# Server hostname: timeserver-549cc9bc89-pvm5k

# Server hostname: timeserver-549cc9bc89-pvm5k

# Server hostname: timeserver-549cc9bc89-kdmgx

# Server hostname: timeserver-549cc9bc89-pvm5k

# ...

$ for i in {1..100}; do curl -s http://127.0.0.1:30000 | grep name; done | sort | uniq -c | sort -nr

# => 54 Server hostname: timeserver-549cc9bc89-pvm5k

# 46 Server hostname: timeserver-549cc9bc89-kdmgx

# 파드 복제복 증가 : service endpoint 대상에 자동 추가

$ kubectl scale deployment timeserver --replicas 4

# => deployment.apps/timeserver scaled

$ kubectl get service,ep timeserver -owide

# => NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

# service/timeserver NodePort 10.96.204.127 <none> 80:30000/TCP 37m pod=timeserver-pod

#

# NAME ENDPOINTS AGE

# endpoints/timeserver 10.244.1.6:80,10.244.1.7:80,10.244.2.8:80 + 1 more... 37m

# 반복 접속 해두기 : 부하분산 확인

$ while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 | grep name ; sleep 1 ; done

# => Server hostname: timeserver-549cc9bc89-pvm5k

# Server hostname: timeserver-549cc9bc89-h9q6p

# Server hostname: timeserver-549cc9bc89-pvm5k

# Server hostname: timeserver-549cc9bc89-h9q6p

# Server hostname: timeserver-549cc9bc89-xlbck

# Server hostname: timeserver-549cc9bc89-kdmgx

# Server hostname: timeserver-549cc9bc89-xlbck

# ...

$ for i in {1..100}; do curl -s http://127.0.0.1:30000 | grep name; done | sort | uniq -c | sort -nr

# => 29 Server hostname: timeserver-549cc9bc89-xlbck

# 27 Server hostname: timeserver-549cc9bc89-pvm5k

# 22 Server hostname: timeserver-549cc9bc89-kdmgx

# 22 Server hostname: timeserver-549cc9bc89-h9q6p

# <span style="color: green;">👉 파드 수 만큼 자동으로 로드밸런싱 되는것을 확인 할 수 있습니다.</span>

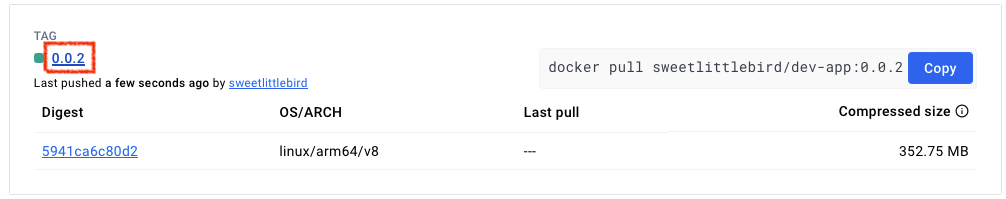

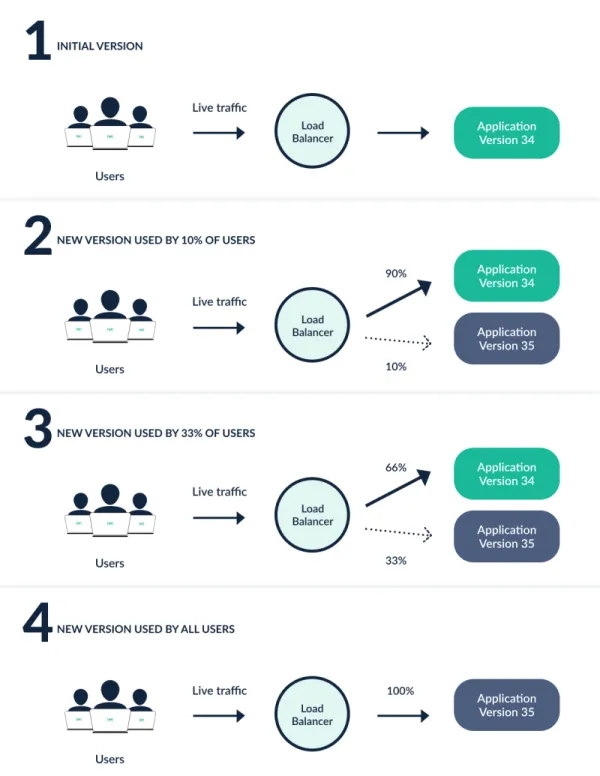

앱 업데이트 후 재배포

- 샘플 앱의 server.py와 VERSION 파일을 업데이트하고, Jenkins Pipeline을 통해 새로운 버전을 배포해보겠습니다.

- 먼저 샘플 앱의 업데이트를 진행합니다.

# 업데이트 $ sed -i -e 's/0.0.1/0.0.2/g' server.py $ echo "0.0.2" > VERSION $ git add . $ git commit -m "Update to 0.0.2" # => main c17ce89] Update to 0.0.2 # 2 files changed, 2 insertions(+), 2 deletions(-) $ git push origin main # => Enumerating objects: 7, done. # Counting objects: 100% (7/7), done. # Delta compression using up to 8 threads # Compressing objects: 100% (3/3), done. # Writing objects: 100% (4/4), 332 bytes | 332.00 KiB/s, done. # Total 4 (delta 2), reused 0 (delta 0), pack-reused 0 # To http://10.0.4.3:3000/devops/dev-app.git # 3531233..c17ce89 main -> main - Jenkins에서 Build Now를 클릭하여 통해 새로운 버전을 docker hub에 업로드 합니다.

- 새로운 버전이 docker hub에 업로드 되었으니, Kubernetes 클러스터에 배포해보겠습니다.

# 파드 복제복 증가

$ kubectl scale deployment timeserver --replicas 4

# => deployment.apps/timeserver scaled

$ kubectl get service,ep timeserver -owide

# => NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

# service/timeserver NodePort 10.96.204.127 <none> 80:30000/TCP 45m pod=timeserver-pod

#

# NAME ENDPOINTS AGE

# endpoints/timeserver 10.244.1.6:80,10.244.1.7:80,10.244.2.8:80 + 1 more... 45m

# 반복 접속 해두기 : 부하분산 확인

$ while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 | grep name ; sleep 1 ; done

# => Server hostname: timeserver-549cc9bc89-kdmgx

# Server hostname: timeserver-549cc9bc89-h9q6p

# Server hostname: timeserver-549cc9bc89-kdmgx

# Server hostname: timeserver-549cc9bc89-xlbck

# Server hostname: timeserver-549cc9bc89-pvm5k

# ...

$ for i in {1..100}; do curl -s http://127.0.0.1:30000 | grep name; done | sort | uniq -c | sort -nr

# => 32 Server hostname: timeserver-549cc9bc89-xlbck

# 27 Server hostname: timeserver-549cc9bc89-pvm5k

# 25 Server hostname: timeserver-549cc9bc89-kdmgx

# 16 Server hostname: timeserver-549cc9bc89-h9q6p

# 업데이트를 배포하기 위해서 kubectl set image를 통해 컨테이너 이미지를 변경합니다.

# $ kubectl set image deployment timeserver timeserver-container=$DHUSER/dev-app:0.0.Y && watch -d "kubectl get deploy,ep timeserver; echo; kubectl get rs,pod"

$ kubectl set image deployment timeserver timeserver-container=$DHUSER/dev-app:0.0.2 && watch -d "kubectl get deploy,ep timeserver; echo; kubectl get rs,pod"

# => Every 2.0s: kubectl get deploy,ep timeserver; echo; kubectl get rs,pod Balthazar.local: Sun Oct 01 01:17:30 2024

# ...

#

# NAME READY STATUS RESTARTS AGE

# pod/timeserver-549cc9bc89-h9q6p 1/1 Terminating 0 11m

# pod/timeserver-549cc9bc89-kdmgx 1/1 Terminating 0 88m

# pod/timeserver-549cc9bc89-pvm5k 1/1 Terminating 0 81m

# pod/timeserver-549cc9bc89-xlbck 1/1 Terminating 0 11m

# pod/timeserver-6f476fdbf-f8hw5 1/1 Running 0 9s

# pod/timeserver-6f476fdbf-k5fsn 1/1 Running 0 9s

# pod/timeserver-6f476fdbf-qq465 1/1 Running 0 4s

# pod/timeserver-6f476fdbf-tvrl5 1/1 Running 0 3s

# <span style="color: green;">👉 기존 버전의 파드가 종료되고 새로운 파드가 replica 수 만큼 생성 되는 것을 확인 할 수 있습니다.</span>

# 롤링업데이트를 확인하기 위해 별도의 터미널에서 다음의 명령을 입력합니다.

$ while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000; sleep 1 ; done

# => ...

# The time is 4:17:24 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-pvm5k

# The time is 4:17:25 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-xlbck

# The time is 4:17:26 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-xlbck

# The time is 4:17:27 PM, VERSION 0.0.1

# Server hostname: timeserver-549cc9bc89-pvm5k

# The time is 4:17:28 PM, VERSION 0.0.2

# Server hostname: timeserver-6f476fdbf-k5fsn

# The time is 4:17:29 PM, VERSION 0.0.2

# Server hostname: timeserver-6f476fdbf-qq465

# The time is 4:17:30 PM, VERSION 0.0.2

# Server hostname: timeserver-6f476fdbf-tvrl5

# The time is 4:17:31 PM, VERSION 0.0.2

# Server hostname: timeserver-6f476fdbf-f8hw5

# The time is 4:17:32 PM, VERSION 0.0.2

# ...

# <span style="color: green;">👉 롤링업데이트를 통해 서비스 중단 없이 배포가 잘 됨을 확인 할 수 있습니다.</span>

# 롤링 업데이트 확인

$ kubectl get deploy,rs,pod,svc,ep -owide

# kubectl get deploy $DEPLOYMENT_NAME

$ kubectl get deploy timeserver

# => NAME READY UP-TO-DATE AVAILABLE AGE

# timeserver 4/4 4 4 90m

# <span style="color: green;">👉 READY는 전체 replica 중 몇 개의 파드가 서비스가 가능한지 알려줍니다.</span>

# <span style="color: green;"> UP-TO-DATE는 몇 개의 파드가 현재의 버전(상태)인지 알려줍니다.</span>

$ kubectl get pods -l pod=timeserver-pod

# => NAME READY STATUS RESTARTS AGE

# timeserver-6f476fdbf-f8hw5 1/1 Running 0 3m3s

# timeserver-6f476fdbf-k5fsn 1/1 Running 0 3m3s

# timeserver-6f476fdbf-qq465 1/1 Running 0 2m58s

# timeserver-6f476fdbf-tvrl5 1/1 Running 0 2m57s

Gogs Webhook을 통해 Jenkins Pipeline 자동화

- Jenkins Pipeline을 통해 새로운 버전을 배포하는 과정을 자동화하기 위해 Gogs Webhook을 설정해보겠습니다.

- git push를 통해 새로운 버전을 업로드하면, Gogs Webhook을 통해 Jenkins Pipeline이 자동으로 실행되어 새로운 버전의 도커 이미지가 docker hub에 업로드되도록 합니다.

Gogs 설정 수정 및 Webhook 설정

-

먼저 gogs 컨테이너의 app.ini 파일을 수정하여 jenkins가 gogs에 접근할 수 있도록 설정합니다.

# app.ini 파일 수정 $ docker compose exec gogs vi /data/gogs/conf/app.ini# app.ini ... [security] INSTALL_LOCK = true SECRET_KEY = atxaUPQcbAEwpIu LOCAL_NETWORK_ALLOWLIST = 10.0.4.3 # 각자 자신의 PC IP ... -

Gogs 컨테이너를 재기동합니다.

$ docker compose restart gogs -

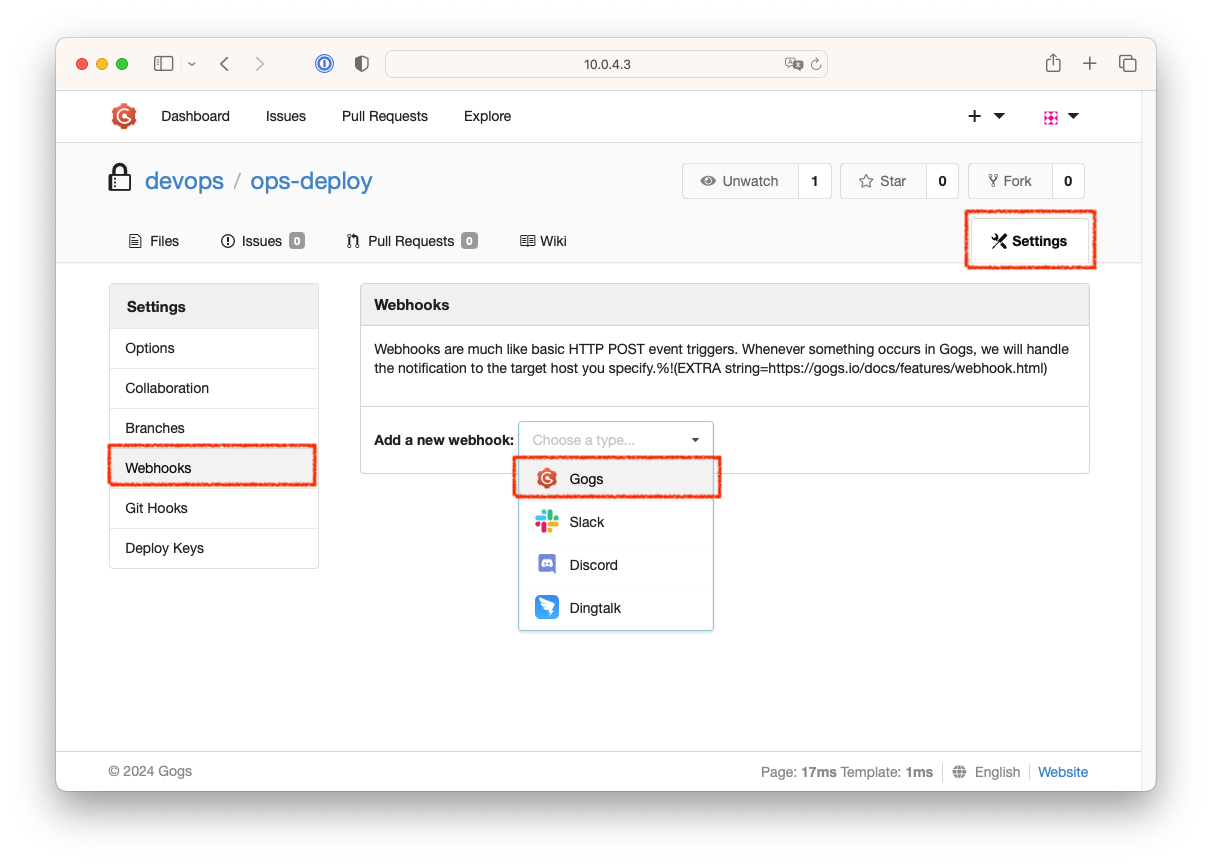

Gogs 에서 Webhook을 설정합니다.

- Repository를 선택후 우측의 Settings > Webhooks > Add a new webhook에서 Gogs를 선택합니다.

- Payload URL :

http://<Jenkins의 IP = PC의 IP>:8080/gogs-webhook/?job=SCM-Pipeline/ - Content Type :

application/json - Secret :

qwe123 - When should this webhook be triggered? : Just the push event

- Active : 체크

- Add Webhook을 클릭하여 웹훅을 저장합니다.

- Repository를 선택후 우측의 Settings > Webhooks > Add a new webhook에서 Gogs를 선택합니다.

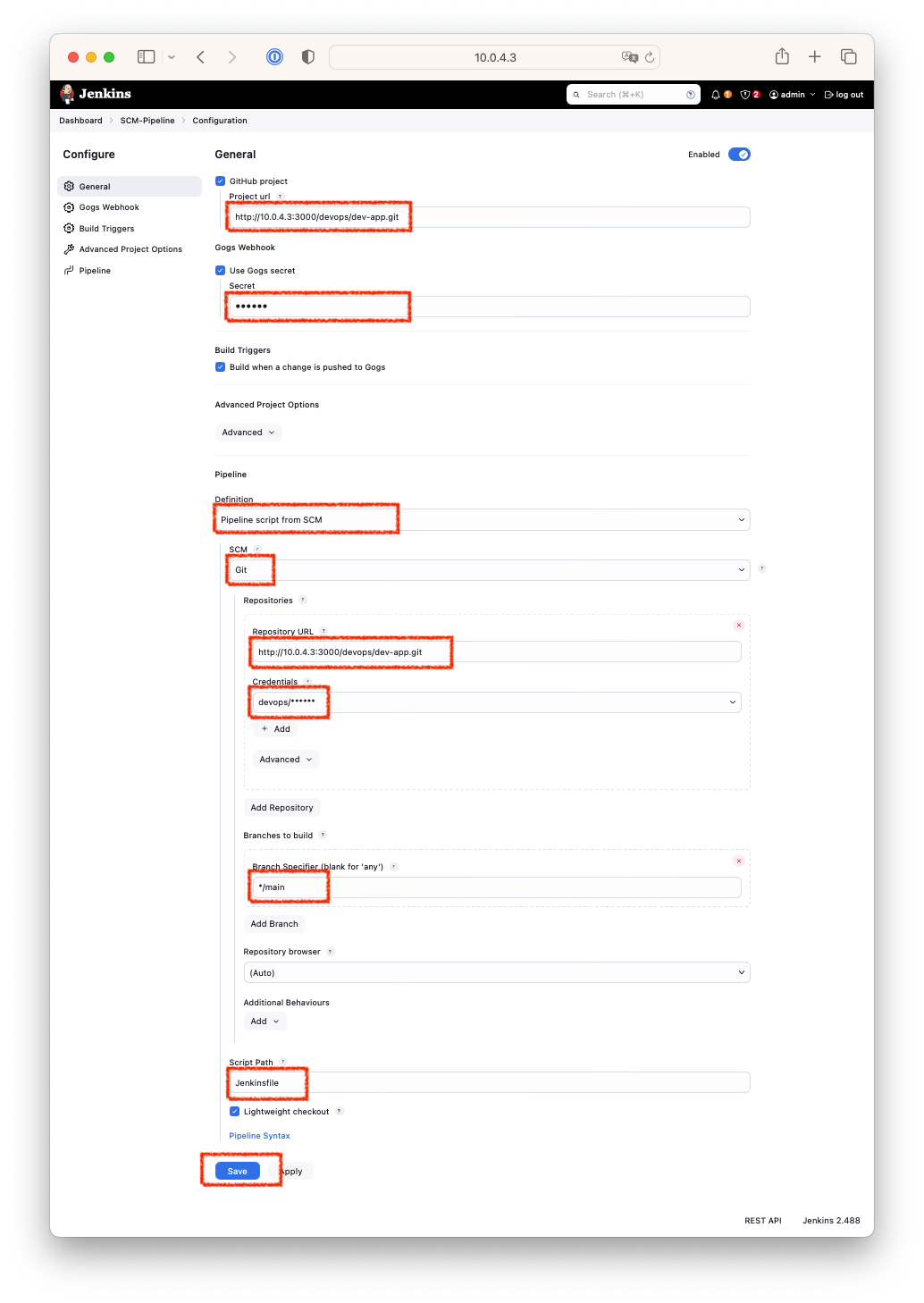

Jenkins에서 Gogs Webhook을 통한 Pipeline 생성

- 이번에는 Jenkins에서 앞서 생성한 Gogs Webhook을 통해 새로운 버전을 배포하는 Pipeline을 생성해보겠습니다.

- Dashboard > New Item 을 선택합니다.

- item name : SCM-Pipeline

- item type : Pipeline

- OK를 클릭합니다.

- Pipeline 설정을 다음과 같이 설정합니다.

- GitHub project :

http://<PC의 IP>:3000/<Gogs 계정명>/dev-app.git - Use Gogs secret :

qwe123 - Build Triggers : Build when a change is pushed to Gogs 체크

- Pipeline script from SCM

- SCM : Git

- Repo URL :

http://<PC의 IP>:3000/<Gogs 계정명>/dev-app.git - Credentials :

devops/* - Branch :

*/main

- Repo URL :

- Script Path :

Jenkinsfile

- SCM : Git

- GitHub project :

Jenkinsfile 작성 후 Git Push

- Jenkinsfile을 작성합니다.

pipeline {

agent any

environment {

DOCKER_IMAGE = '<자신의 도커 허브 계정>/dev-app' // Docker 이미지 이름

}

stages {

stage('Checkout') {

steps {

git branch: 'main',

url: 'http://<PC의 IP>:3000/devops/dev-app.git', // Git에서 코드 체크아웃

credentialsId: 'gogs-crd' // Credentials ID

}

}

stage('Read VERSION') {

steps {

script {

// VERSION 파일 읽기

def version = readFile('VERSION').trim()

echo "Version found: ${version}"

// 환경 변수 설정

env.DOCKER_TAG = version

}

}

}

stage('Docker Build and Push') {

steps {

script {

docker.withRegistry('https://index.docker.io/v1/', 'dockerhub-crd') {

// DOCKER_TAG 사용

def appImage = docker.build("${DOCKER_IMAGE}:${DOCKER_TAG}")

appImage.push()

appImage.push("latest")

}

}

}

}

}

post {

success {

echo "Docker image ${DOCKER_IMAGE}:${DOCKER_TAG} has been built and pushed successfully!"

}

failure {

echo "Pipeline failed. Please check the logs."

}

}

}

- VERSION 파일과 server.py 파일을 0.0.2 => 0.0.3으로 수정합니다.

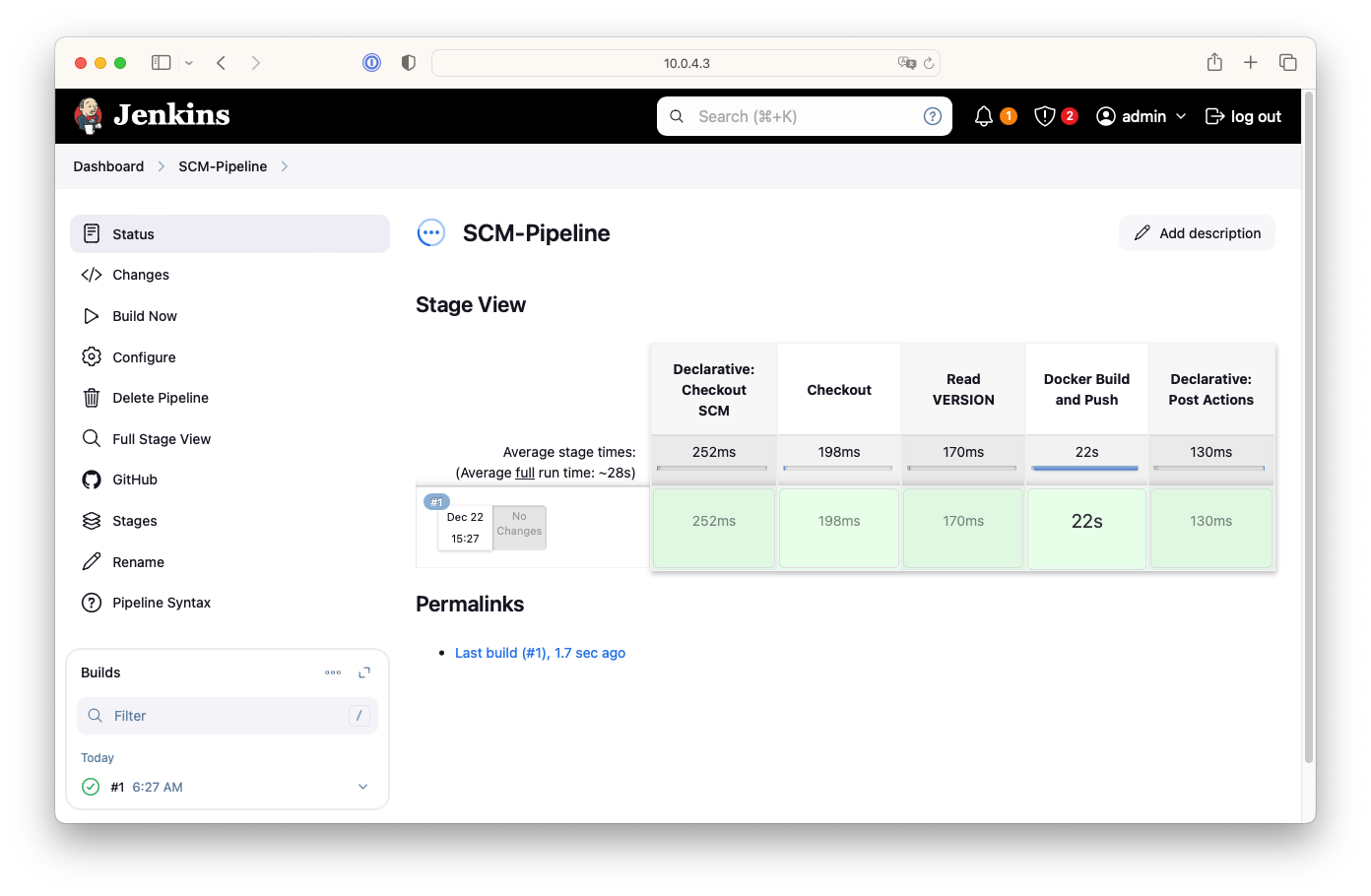

- 작성된 파일 push

$ git add . && git commit -m "VERSION $(cat VERSION) Changed" && git push -u origin main

- Push와 거의 동시에 Jenkins에서 Build가 시작되고 성공적으로 빌드가 되었습니다.

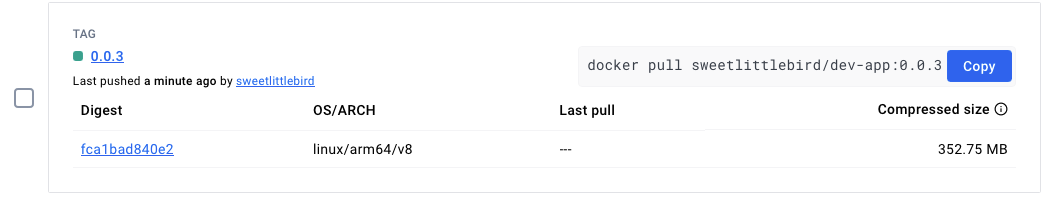

- Docker hub에도 0.0.3 버전이 업로드 잘 되었습니다.

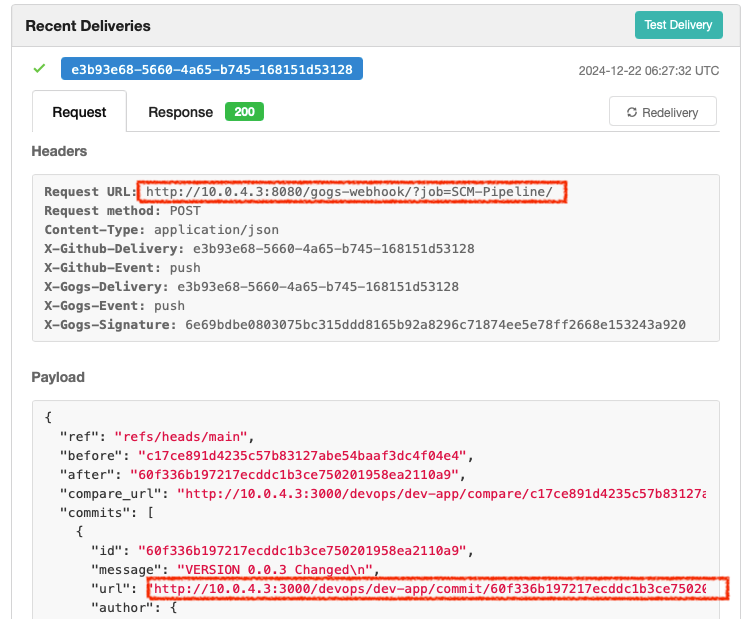

- Gogs에서 Repository > Settings > Webhooks > 웹훅 클릭하면 웹훅 전달 로그를 확인할 수 있습니다. Gogs => Jenkins의 방향으로 Webhook이 전달되었음을 확인할 수 있습니다.

- 마지막으로 Kubernetes 클러스터에서 새로운 버전을 배포하겠습니다.

$ kubectl set image deployment timeserver timeserver-container=$DHUSER/dev-app:0.0.3 && watch -d "kubectl get deploy,ep timeserver; echo; kubectl get rs,pod"

Jenkins CI/CD + K8S (Kind)

- 이번에는 Jenkins에서 바로 Kubernetes 클러스터에 배포할 수 있도록 하는 실습을 진행해보겠습니다.

- Jenkins 컨테이너 내부에 필요한 툴(kubectl, helm)을 설치 하겠습니다.

# Install kubectl, helm

$ docker compose exec --privileged -u root jenkins bash

--------------------------------------------

#curl -LO "https://dl.k8s.io/release/v1.31.0/bin/linux/amd64/kubectl"

$ curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/arm64/kubectl" # macOS

# $ curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl" # WindowOS

$ install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

$ kubectl version --client=true

# => Client Version: v1.32.0

# Kustomize Version: v5.5.0

#

$ curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

$ helm version

# => version.BuildInfo{Version:"v3.16.4", GitCommit:"7877b45b63f95635153b29a42c0c2f4273ec45ca", GitTreeState:"clean", GoVersion:"go1.22.7"}

$ exit

--------------------------------------------

$ docker compose exec jenkins kubectl version --client=true

# => Client Version: v1.32.0

# Kustomize Version: v5.5.0

$ docker compose exec jenkins helm version

# => version.BuildInfo{Version:"v3.16.4", GitCommit:"7877b45b63f95635153b29a42c0c2f4273ec45ca", GitTreeState:"clean", GoVersion:"go1.22.7"}

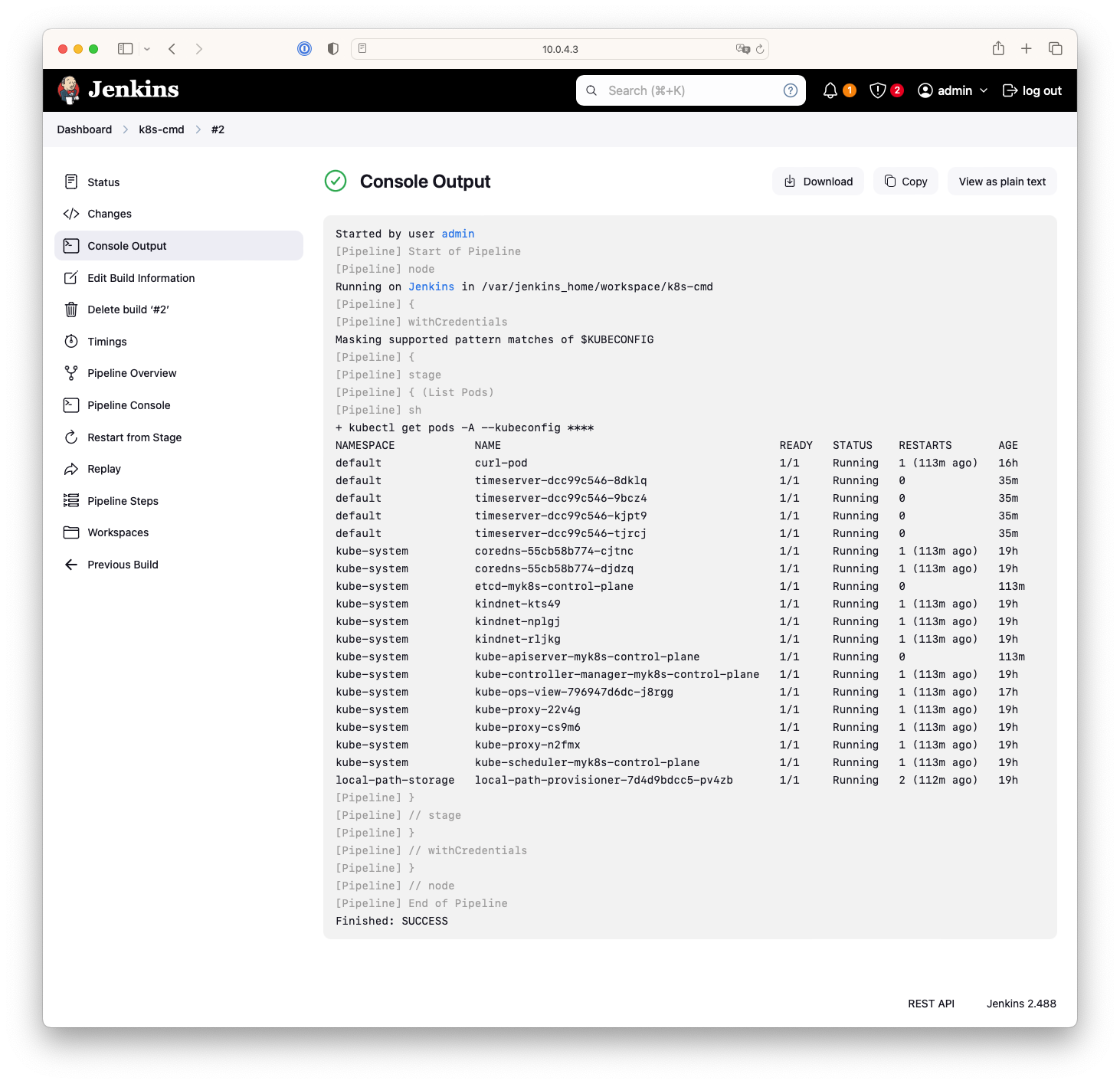

- Jenkins Item 생성(Pipeline) : item name(k8s-cmd)

pipeline {

agent any

environment {

KUBECONFIG = credentials('k8s-crd')

}

stages {

stage('List Pods') {

steps {

sh '''

# Fetch and display Pods

kubectl get pods -A --kubeconfig "$KUBECONFIG"

'''

}

}

}

}

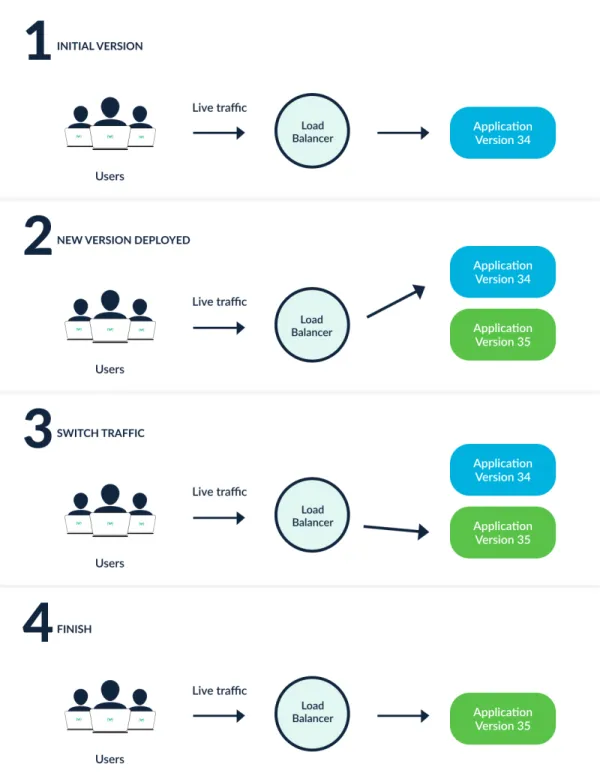

Jenkins를 이용한 blue-green 배포 실습

- 디플로이먼트 / 서비스 yaml 파일 작성 - http-echo 및 코드 push

#

$ cd dev-app

#

$ mkdir deploy

#

$ cat > deploy/echo-server-blue.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-server-blue

spec:

replicas: 2

selector:

matchLabels:

app: echo-server

version: blue

template:

metadata:

labels:

app: echo-server

version: blue

spec:

containers:

- name: echo-server

image: hashicorp/http-echo

args:

- "-text=Hello from Blue"

ports:

- containerPort: 5678

EOF

$ cat > deploy/echo-server-service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: echo-server-service

spec:

selector:

app: echo-server

version: blue

ports:

- protocol: TCP

port: 80

targetPort: 5678

nodePort: 30000

type: NodePort

EOF

$ cat > deploy/echo-server-green.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-server-green

spec:

replicas: 2

selector:

matchLabels:

app: echo-server

version: green

template:

metadata:

labels:

app: echo-server

version: green

spec:

containers:

- name: echo-server

image: hashicorp/http-echo

args:

- "-text=Hello from Green"

ports:

- containerPort: 5678

EOF

#

$ git add . && git commit -m "Add echo server yaml" && git push -u origin main

# => main 76adc73] Add echo server yaml

# 3 files changed, 60 insertions(+)

# create mode 100644 deploy/echo-server-blue.yaml

# create mode 100644 deploy/echo-server-green.yaml

# create mode 100644 deploy/echo-server-service.yaml

# Enumerating objects: 7, done.

# Counting objects: 100% (7/7), done.

# Delta compression using up to 8 threads

# Compressing objects: 100% (6/6), done.

# Writing objects: 100% (6/6), 789 bytes | 789.00 KiB/s, done.

# Total 6 (delta 2), reused 0 (delta 0), pack-reused 0

# To http://10.0.4.3:3000/devops/dev-app.git

# 60f336b..76adc73 main -> main

# branch 'main' set up to track 'origin/main'.

- Jenkins에서 Pipeline을 작성하여 배포해 보겠습니다.

- 먼저, 이전 실습에서 배포한 deployment와 service를 삭제합니다.

$ kubectl delete deploy,svc timeserver

# => deployment.apps "timeserver" deleted

# service "timeserver" deleted

- 반복접속을 미리 실행해둡니다.

# 별도의 터미널에서 실행

$ while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; echo ; sleep 1 ; kubectl get deploy -owide ; echo ; kubectl get svc,ep echo-server-service -owide ; echo "------------" ; done

# 혹은

$ while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; date ; echo "------------" ; sleep 1 ; done

- Jenkins에서 Pipeline 생성 : item name(k8s-bluegreen)

pipeline {

agent any

environment {

KUBECONFIG = credentials('k8s-crd')

}

stages {

stage('Checkout') {

steps {

git branch: 'main',

url: 'http://<PC의 IP>:3000/devops/dev-app.git', // Git에서 코드 체크아웃

credentialsId: 'gogs-crd' // Credentials ID

}

}

stage('container image build') {

steps {

echo "container image build"

}

}

stage('container image upload') {

steps {

echo "container image upload"

}

}

stage('k8s deployment blue version') {

steps {

sh "kubectl apply -f ./deploy/echo-server-blue.yaml --kubeconfig $KUBECONFIG"

sh "kubectl apply -f ./deploy/echo-server-service.yaml --kubeconfig $KUBECONFIG"

}

}

stage('approve green version') {

steps {

input message: 'approve green version', ok: "Yes"

}

}

stage('k8s deployment green version') {

steps {

sh "kubectl apply -f ./deploy/echo-server-green.yaml --kubeconfig $KUBECONFIG"

}

}

stage('approve version switching') {

steps {

script {

returnValue = input message: 'Green switching?', ok: "Yes", parameters: [booleanParam(defaultValue: true, name: 'IS_SWITCHED')]

if (returnValue) {

sh "kubectl patch svc echo-server-service -p '{\"spec\": {\"selector\": {\"version\": \"green\"}}}' --kubeconfig $KUBECONFIG"

}

}

}

}

stage('Blue Rollback') {

steps {

script {

returnValue = input message: 'Blue Rollback?', parameters: [choice(choices: ['done', 'rollback'], name: 'IS_ROLLBACk')]

if (returnValue == "done") {

sh "kubectl delete -f ./deploy/echo-server-blue.yaml --kubeconfig $KUBECONFIG"

}

if (returnValue == "rollback") {

sh "kubectl patch svc echo-server-service -p '{\"spec\": {\"selector\": {\"version\": \"blue\"}}}' --kubeconfig $KUBECONFIG"

}

}

}

}

}

}

- Build Now로 배포 후 동작을 확인합니다.

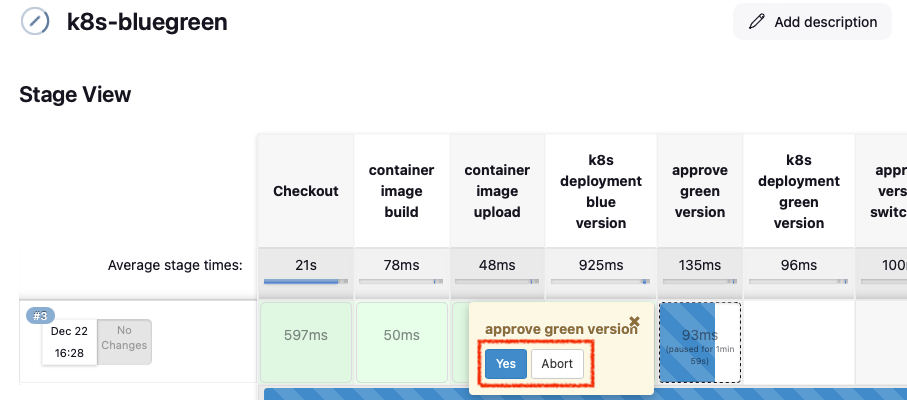

- blue 버전이 배포된 다음 green 버전을 배포할지 승인 여부를 묻습니다.

- 승인하면 green 버전이 배포됩니다. 하지만 아직 트래픽은 Blue로만 흐릅니다.

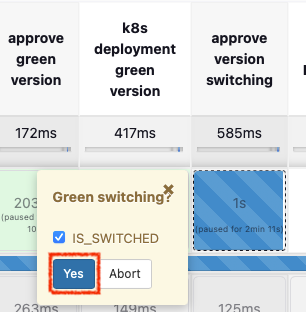

$ kubectl get deploy -owide ; echo ; kubectl get svc,ep echo-server-service -owide # => Hello from Blue # # NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR # echo-server-blue 2/2 2 2 3m46s echo-server hashicorp/http-echo app=echo-server,version=blue # echo-server-green 2/2 2 2 31s echo-server hashicorp/http-echo app=echo-server,version=green # # NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR # service/echo-server-service NodePort 10.96.40.148 <none> 80:30000/TCP 3m45s app=echo-server,version=blue # # NAME ENDPOINTS AGE # endpoints/echo-server-service 10.244.1.8:5678,10.244.2.8:5678 3m45s - green으로 배포할지 승인하면 마침내 green으로 트래픽이 전달 됩니다.

$ kubectl get deploy -owide ; echo ; kubectl get svc,ep echo-server-service -owide # => Hello from Green # # NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR # echo-server-blue 2/2 2 2 6m14s echo-server hashicorp/http-echo app=echo-server,version=blue # echo-server-green 2/2 2 2 2m59s echo-server hashicorp/http-echo app=echo-server,version=green # # NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR # service/echo-server-service NodePort 10.96.40.148 <none> 80:30000/TCP 6m13s app=echo-server,version=green # # NAME ENDPOINTS AGE # endpoints/echo-server-service 10.244.1.9:5678,10.244.2.9:5678 6m13s - 마지막으로 blue를 롤백할지 물으며, 승인하면 blue가 삭제 됩니다.

# => Hello from Green # # NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR # echo-server-green 2/2 2 2 4m55s echo-server hashicorp/http-echo app=echo-server,version=green # # NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR # service/echo-server-service NodePort 10.96.40.148 <none> 80:30000/TCP 8m9s app=echo-server,version=green # # NAME ENDPOINTS AGE # endpoints/echo-server-service 10.244.1.9:5678,10.244.2.9:5678 8m9s

- blue 버전이 배포된 다음 green 버전을 배포할지 승인 여부를 묻습니다.

- 실습 완료 후 삭제

$ kubectl delete deploy echo-server-blue echo-server-green

$ kubectl delete svc echo-server-service

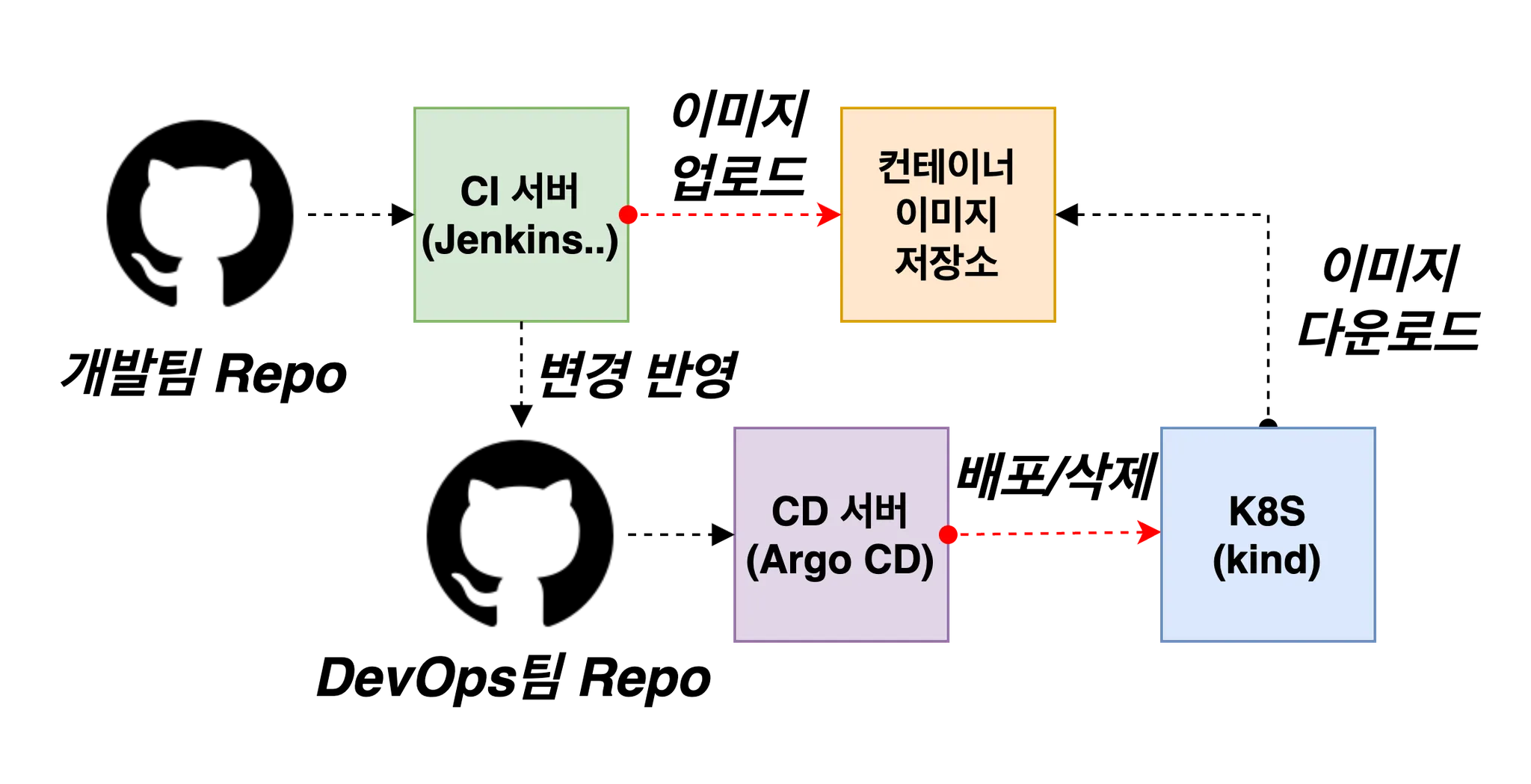

Jenkins CI + ArgoCD + K8S (Kind)

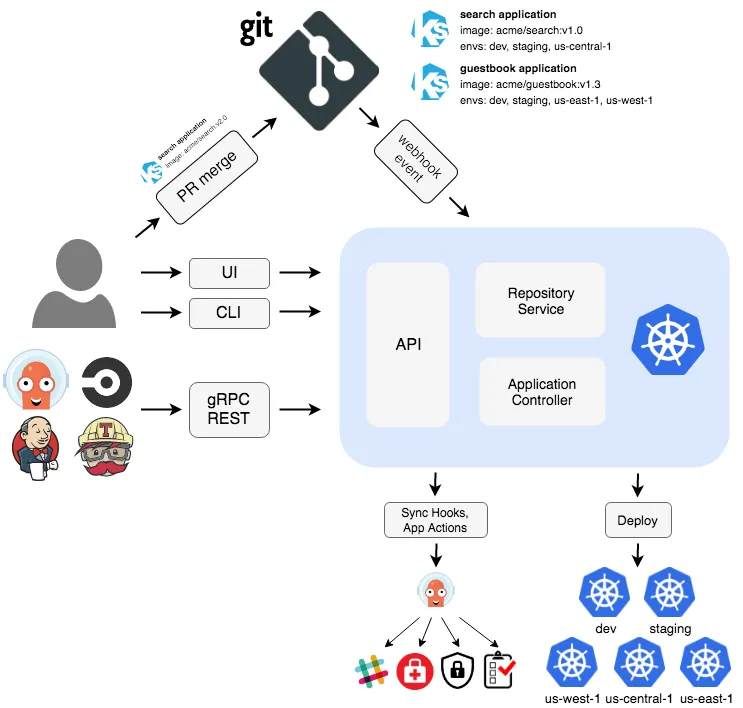

ArgoCD 소개

- ArgoCD는 GitOps를 지원하는 CD 도구로, Kubernetes 클러스터에 배포된 애플리케이션의 상태를 지속적으로 모니터링하고, Git 저장소에 정의된 상태와 실제 상태가 일치하지 않을 경우 자동으로 동기화하여 애플리케이션을 원하는 상태로 유지하는 툴입니다.

- ArgoCD의 아키텍쳐

- ArgoCD는 배포된 애플리케이션의 상태인 Kubernetes manifest를 다음의 방식들로 정의할 수 있습니다.

- 자세한 사항은 공식 홈페이지나 악분님 ArgoCD 정리 블로그를 참고해주세요.

ArgoCD 설치 및 기본설정

- ArgoCD를 설치하고 기본 설정을 진행해보겠습니다.

# 네임스페이스 생성 및 파라미터 파일 작성

$ kubectl create ns argocd

# => namespace/argocd created

$ cat <<EOF > argocd-values.yaml

dex:

enabled: false

server:

service:

type: NodePort

nodePortHttps: 30002

EOF

# 설치

$ helm repo add argo https://argoproj.github.io/argo-helm

# => "argo" has been added to your repositories

$ helm install argocd argo/argo-cd --version 7.7.10 -f argocd-values.yaml --namespace argocd

# => NAME: argocd

# LAST DEPLOYED: Sun Oct 01 16:53:42 2024

# NAMESPACE: argocd

# STATUS: deployed

# REVISION: 1

# TEST SUITE: None

# NOTES:

# In order to access the server UI you have the following options:

#

# 1. kubectl port-forward service/argocd-server -n argocd 8080:443

# and then open the browser on http://localhost:8080 and accept the certificate

#

# 2. enable ingress in the values file `server.ingress.enabled` and either

# - Add the annotation for ssl passthrough: https://argo-cd.readthedocs.io/en/stable/operator-manual/ingress/#option-1-ssl-passthrough

# - Set the `configs.params."server.insecure"` in the values file and terminate SSL at your ingress: https://argo-cd.readthedocs.io/en/stable/operator-manual/ingress/#option-2-multiple-ingress-objects-and-hosts

#

# After reaching the UI the first time you can login with username: admin and the random password generated during the installation. You can find the password by running:

# kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d

# (You should delete the initial secret afterwards as suggested by the Getting Started Guide: https://argo-cd.readthedocs.io/en/stable/getting_started/#4-login-using-the-cli)

# 확인

$ kubectl get pod,svc,ep -n argocd

# => NAME READY STATUS RESTARTS AGE

# pod/argocd-application-controller-0 1/1 Running 0 4m55s

# pod/argocd-applicationset-controller-856f6bd788-zvtd2 1/1 Running 0 4m55s

# pod/argocd-notifications-controller-764b9d6597-z4mrx 1/1 Running 0 4m55s

# pod/argocd-redis-5c67786686-qwx8f 1/1 Running 0 4m55s

# pod/argocd-repo-server-c9f8b6dbf-jpjcq 1/1 Running 0 4m55s

# pod/argocd-server-7bff46b6bd-7n6vx 1/1 Running 0 4m55s

#

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# service/argocd-applicationset-controller ClusterIP 10.96.166.245 <none> 7000/TCP 4m55s

# service/argocd-redis ClusterIP 10.96.46.103 <none> 6379/TCP 4m55s

# service/argocd-repo-server ClusterIP 10.96.117.132 <none> 8081/TCP 4m55s

# service/argocd-server NodePort 10.96.75.70 <none> 80:30080/TCP,443:30002/TCP 4m55s

#

# NAME ENDPOINTS AGE

# endpoints/argocd-applicationset-controller 10.244.2.13:7000 4m55s

# endpoints/argocd-redis 10.244.2.12:6379 4m55s

# endpoints/argocd-repo-server 10.244.1.11:8081 4m55s

# endpoints/argocd-server 10.244.1.10:8080,10.244.1.10:8080 4m55s

$ kubectl get crd | grep argo

# => applications.argoproj.io 2024-10-01T07:54:01Z

# applicationsets.argoproj.io 2024-10-01T07:54:01Z

# appprojects.argoproj.io 2024-10-01T07:54:01Z

# 최초 접속 암호 확인

$ kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d ;echo

# => ZaQfvE9xrehyKxvl

# Argo CD 웹 접속 주소 확인 : 초기 암호 입력 (admin 계정)

$ open "https://127.0.0.1:30002" # macOS

## Windows OS경우 직접 웹 브라우저에서 https://127.0.0.1:30002 접속

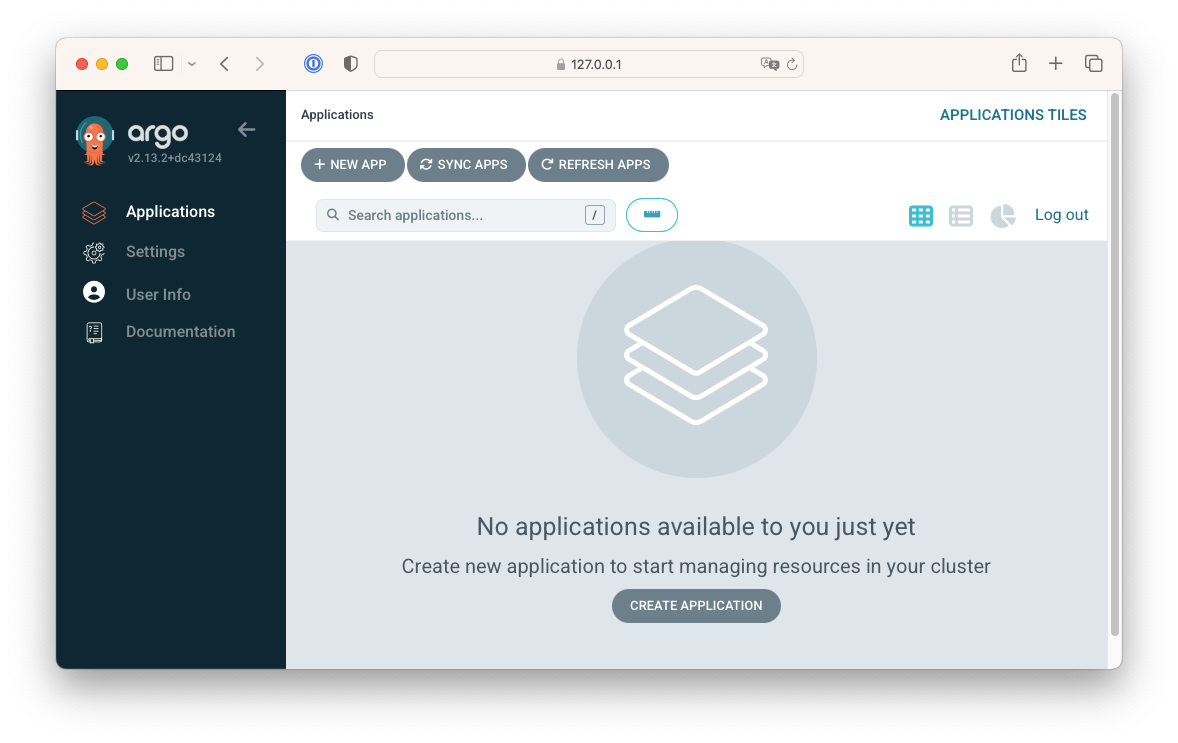

- Argo CD 웹 접속 확인 - 위에서 확인한 초기 비밀번호로 로그인합니다.

Argo CD 웹 초기 화면

Argo CD 웹 초기 화면

- User Info > Update password로 admin 계정 암호를 변경합니다. (qwe12345)

- Settings > Clusters, Projects, Accounts 등 기본정보를 확인해봅니다.

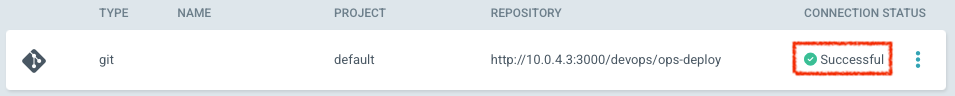

- 실습을 위해 ops-deploy Repo를 등록해보겠습니다.

- Settings > Repositories > Connect Repo 클릭

- connection method : VIA HTTPS

- Type : git

- Project : default

- Repo URL : http://

:3000/devops/ops-deploy - Username : devops

- Password :

=> 입력 후 CONNECT 클릭

- 모든 정보가 정확하여 연결이 되면 연결상태가 Successful로 등록됩니다.

- Settings > Repositories > Connect Repo 클릭

Helm chart를 통한 배포 실습

#

$ mkdir nginx-chart

$ cd nginx-chart

$ mkdir templates

$ cat > templates/configmap.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ .Release.Name }}

data:

index.html: |

{{ .Values.indexHtml | indent 4 }}

EOF

$ cat > templates/deployment.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .Release.Name }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app: {{ .Release.Name }}

template:

metadata:

labels:

app: {{ .Release.Name }}

spec:

containers:

- name: nginx

image: {{ .Values.image.repository }}:{{ .Values.image.tag }}

ports:

- containerPort: 80

volumeMounts:

- name: index-html

mountPath: /usr/share/nginx/html/index.html

subPath: index.html

volumes:

- name: index-html

configMap:

name: {{ .Release.Name }}

EOF

$ cat > templates/service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: {{ .Release.Name }}

spec:

selector:

app: {{ .Release.Name }}

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30000

type: NodePort

EOF

$ cat > values.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>Nginx version 1.26.1</p>

</body>

</html>

image:

repository: nginx

tag: 1.26.1

replicaCount: 1

EOF

$ cat > Chart.yaml <<EOF

apiVersion: v2

name: nginx-chart

description: A Helm chart for deploying Nginx with custom index.html

type: application

version: 1.0.0

appVersion: "1.26.1"

EOF

# 이전 timeserver/service(nodeport) 삭제

$ kubectl delete deploy,svc --all

# 직접 배포 해보기

$ helm install dev-nginx . -f values.yaml

# => NAME: dev-nginx

# LAST DEPLOYED: Sun Oct 01 19:54:23 2024

# NAMESPACE: default

# STATUS: deployed

# REVISION: 1

# TEST SUITE: None

$ helm list

# => NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# dev-nginx default 1 2024-10-01 19:54:23.03644 +0900 KST deployed nginx-chart-1.0.0 1.26.1

$ kubectl get deploy,svc,ep,cm dev-nginx -owide

# => NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

# deployment.apps/dev-nginx 1/1 1 1 4s nginx nginx:1.26.1 app=dev-nginx

#

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

# service/dev-nginx NodePort 10.96.79.233 <none> 80:30000/TCP 4s app=dev-nginx

#

# NAME ENDPOINTS AGE

# endpoints/dev-nginx 10.244.1.16:80 4s

#

# NAME DATA AGE

# configmap/dev-nginx 1 4s

#

$ curl http://127.0.0.1:30000

# => <!DOCTYPE html>

# <html>

# <head>

# <title>Welcome to Nginx!</title>

# </head>

# <body>

# <h1>Hello, Kubernetes!</h1>

# <p>Nginx version 1.26.1</p>

# </body>

# </html>

$ curl -s http://127.0.0.1:30000 | grep version

# => <p>Nginx version 1.26.1</p>

$ open http://127.0.0.1:30000

# value 값 변경 후 적용 해보기 : version/tag, replicaCount

$ cat > values.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>Nginx version 1.26.2</p>

</body>

</html>

image:

repository: nginx

tag: 1.26.2

replicaCount: 2

EOF

# helm chart 업그레이드 적용

$ helm upgrade dev-nginx . -f values.yaml

# => Release "dev-nginx" has been upgraded. Happy Helming!

# NAME: dev-nginx

# LAST DEPLOYED: Sun Oct 01 19:56:59 2024

# NAMESPACE: default

# STATUS: deployed

# REVISION: 2

# TEST SUITE: None

# 확인

$ helm list

# => NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# dev-nginx default 2 2024-10-01 19:56:59.30731 +0900 KST deployed nginx-chart-1.0.0 1.26.1

$ kubectl get deploy,svc,ep,cm dev-nginx -owide

# => NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

# deployment.apps/dev-nginx <span style="color: red;">2/2 2 2</span> 38s nginx <span style="color: red;">nginx:1.26.2</span> app=dev-nginx

# ...

# <span style="color: green;">👉 Replica가 2개로 늘어나서 파드가 2개가 되었고 버전 1.26.2가 적용되었습니다.</span>

$ curl http://127.0.0.1:30000

# => <!DOCTYPE html>

# <html>

# <head>

# <title>Welcome to Nginx!</title>

# </head>

# <body>

# <h1>Hello, Kubernetes!</h1>

# <p>Nginx version 1.26.2</p>

# </body>

# </html>

$ curl -s http://127.0.0.1:30000 | grep version

# => <p>Nginx version 1.26.2</p>

$ open http://127.0.0.1:30000

# 확인 후 삭제

$ helm uninstall dev-nginx

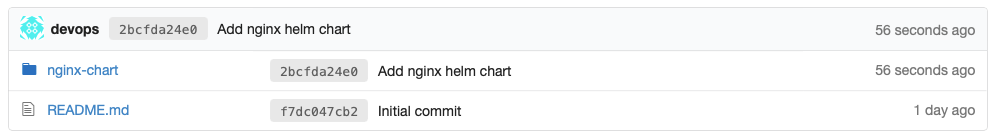

Repo(ops-deploy) 에 nginx helm chart 를 Argo CD를 통한 배포 1

- git 작업

#

$ mkdir cicd-labs

$ cd cicd-labs

$ git clone http://10.0.4.3:3000/devops/ops-deploy.git

# => Cloning into 'ops-deploy'...

# remote: Enumerating objects: 3, done.

# remote: Counting objects: 100% (3/3), done.

# remote: Total 3 (delta 0), reused 0 (delta 0), pack-reused 0

# Unpacking objects: 100% (3/3), 228 bytes | 114.00 KiB/s, done.

$ cd ops-deploy

#

$ git config user.name "devops"

$ git config user.email "a@a.com"

$ git config init.defaultBranch main

$ git config credential.helper store

#

$ VERSION=1.26.1

$ mkdir nginx-chart

$ mkdir nginx-chart/templates

$ cat > nginx-chart/VERSION <<EOF

$VERSION

EOF

$ cat > nginx-chart/templates/configmap.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name:

data:

index.html: |

EOF

$ cat > nginx-chart/templates/deployment.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name:

spec:

replicas:

selector:

matchLabels:

app:

template:

metadata:

labels:

app:

spec:

containers:

- name: nginx

image: :

ports:

- containerPort: 80

volumeMounts:

- name: index-html

mountPath: /usr/share/nginx/html/index.html

subPath: index.html

volumes:

- name: index-html

configMap:

name:

EOF

$ cat > nginx-chart/templates/service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name:

spec:

selector:

app:

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30000

type: NodePort

EOF

$ cat > nginx-chart/values-dev.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>DEV : Nginx version $VERSION</p>

</body>

</html>

image:

repository: nginx

tag: $VERSION

replicaCount: 1

EOF

$ cat > nginx-chart/values-prd.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

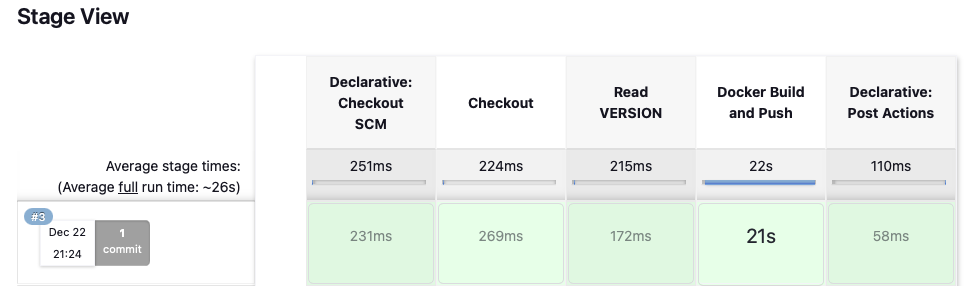

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>PRD : Nginx version $VERSION</p>

</body>

</html>

image:

repository: nginx

tag: $VERSION

replicaCount: 2

EOF

$ cat > nginx-chart/Chart.yaml <<EOF

apiVersion: v2

name: nginx-chart

description: A Helm chart for deploying Nginx with custom index.html

type: application

version: 1.0.0

appVersion: "$VERSION"

EOF

$ tree nginx-chart

# => nginx-chart

# ├── Chart.yaml

# ├── VERSION

# ├── templates

# │ ├── configmap.yaml

# │ ├── deployment.yaml

# │ └── service.yaml

# ├── values-dev.yaml

# └── values-prd.yaml

#

$ git status && git add . && git commit -m "Add nginx helm chart" && git push -u origin main

# => On branch main

# Your branch is up to date with 'origin/main'.

#

# Untracked files:

# (use "git add <file>..." to include in what will be committed)

# nginx-chart/

#

# nothing added to commit but untracked files present (use "git add" to track)

# [main 2bcfda2] Add nginx helm chart

# 7 files changed, 88 insertions(+)

# create mode 100644 nginx-chart/Chart.yaml

# create mode 100644 nginx-chart/VERSION

# create mode 100644 nginx-chart/templates/configmap.yaml

# create mode 100644 nginx-chart/templates/deployment.yaml

# create mode 100644 nginx-chart/templates/service.yaml

# create mode 100644 nginx-chart/values-dev.yaml

# create mode 100644 nginx-chart/values-prd.yaml

# Enumerating objects: 12, done.

# Counting objects: 100% (12/12), done.

# Delta compression using up to 8 threads

# Compressing objects: 100% (10/10), done.

# Writing objects: 100% (11/11), 1.44 KiB | 1.44 MiB/s, done.

# Total 11 (delta 1), reused 0 (delta 0), pack-reused 0

# To http://10.0.4.3:3000/devops/ops-deploy.git

# f7dc047..2bcfda2 main -> main

# branch 'main' set up to track 'origin/main'.

Argo CD에 App 등록

- ArgoCD에서 Application > New App을 클릭하여 애플리케이션을 등록합니다.

- GENERAL

- App Name : dev-nginx

- Project Name : default

- SYNC POLICY : Manual

- SYNC OPTIONS : AUTO-CREATE NAMESPACE(Check)

- Source

- Repo URL :

<설정되어 있는 것 선택>(http://10.0.4.3:3000/devops/ops-deploy) - Revision : HEAD

- PATH : nginx-chart

- Repo URL :

- DESTINATION

- Cluster URL :

<기본값> - NAMESPACE : dev-nginx

- Cluster URL :

- HELM

- Values files : values-dev.yaml => 작성 후 상단 CREATE 클릭

- GENERAL

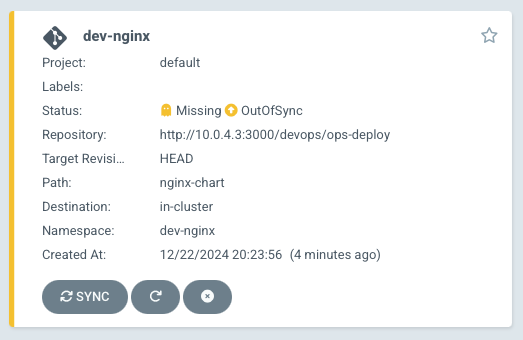

Argo CD Application 등록 직후

Argo CD Application 등록 직후

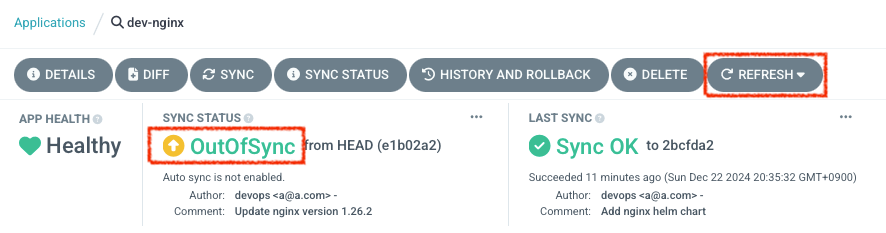

- 등록 직후에는 git에 등록된 manifest 파일의 내용과 현재 k8s의 상태가 다르기 때문에

OutOfSync상태로 표시됩니다. - dev-nginx를 클릭해서 보면 리소스의 배치와 어떤 리소스가

OutOfSync인지 표시가 됩니다. - 다음 명령을 입력해서 application의 배포상태를 확인해보겠습니다.

#

$ kubectl get applications -n argocd

# => NAME SYNC STATUS HEALTH STATUS

# dev-nginx OutOfSync Missing

$ kubectl describe applications -n argocd dev-nginx

# => ...

# Events:

# Type Reason Age From Message

# ---- ------ ---- ---- -------

# Normal ResourceCreated 7m38s argocd-server admin created application

# Normal ResourceUpdated 7m argocd-application-controller Updated sync status: -> Unknown

# Normal ResourceUpdated 6m58s argocd-application-controller Updated health status: -> Healthy

# Normal ResourceUpdated 3m35s argocd-application-controller Updated sync status: Unknown -> OutOfSync

# Normal ResourceUpdated 3m35s argocd-application-controller Updated health status: Healthy -> Missing

# 반복 접속 시도

$ while true; do curl -s --connect-timeout 1 http://127.0.0.1:30000 ; date ; echo "------------" ; sleep 1 ; done

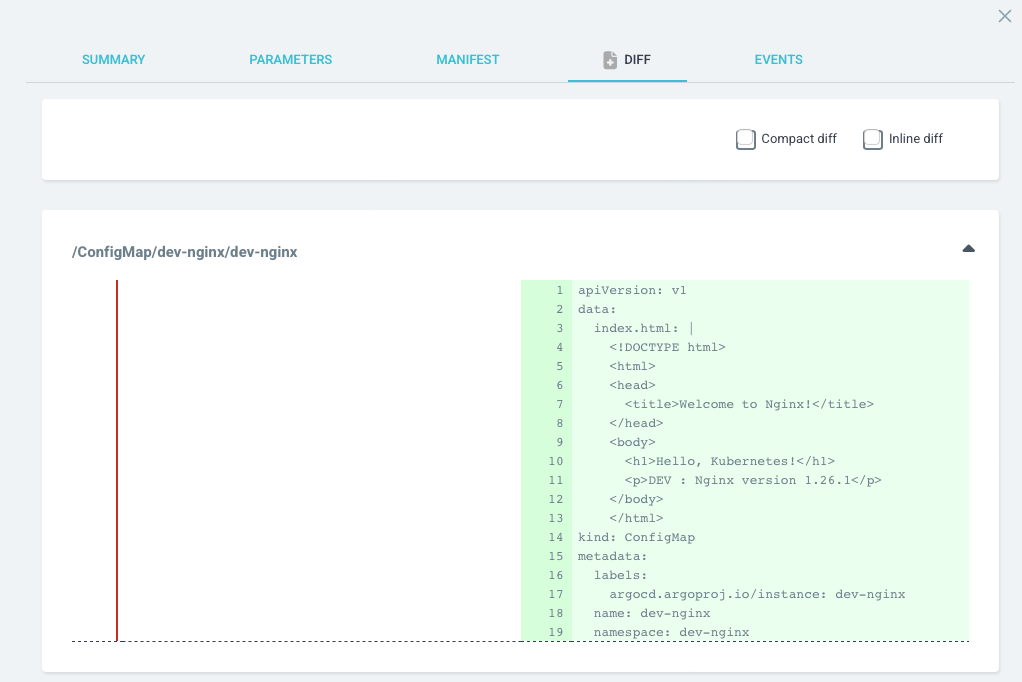

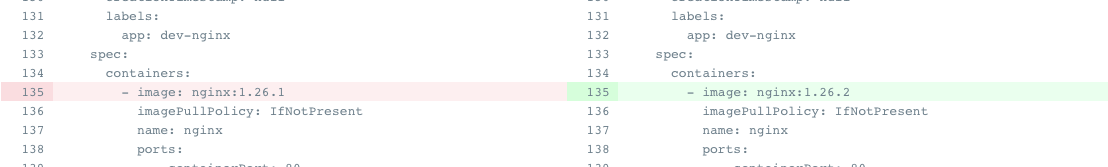

- ArgoCD에서 DIFF 버튼을 클릭하면 현재 상태와 git에 등록된 상태를 비교할 수 있습니다.

Argo CD Application DIFF 화면

Argo CD Application DIFF 화면

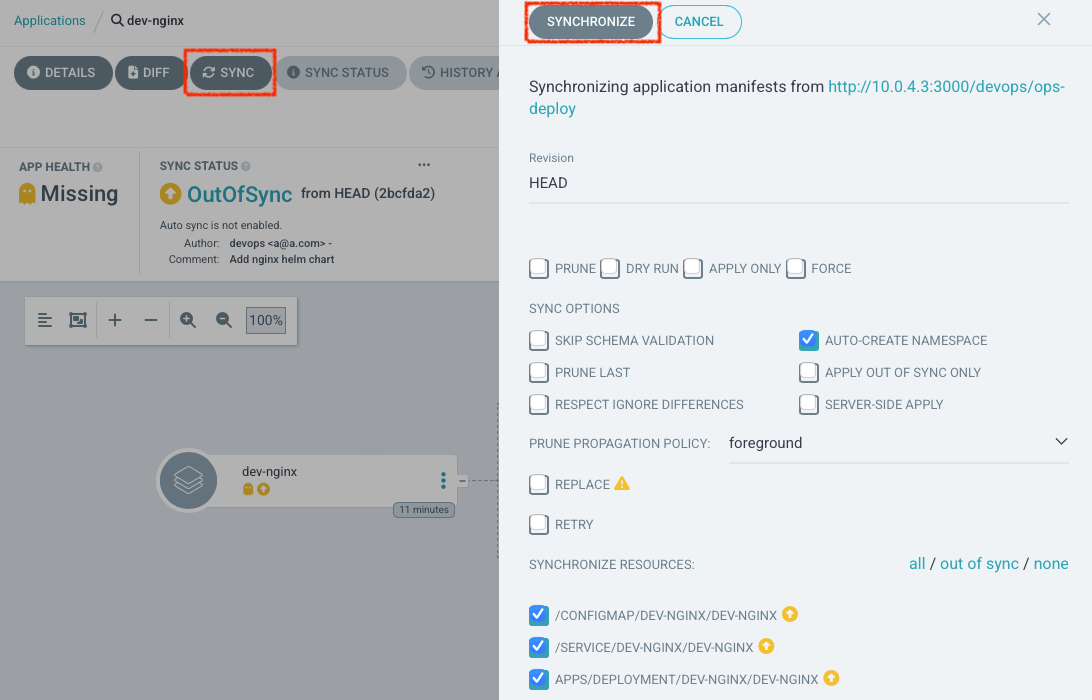

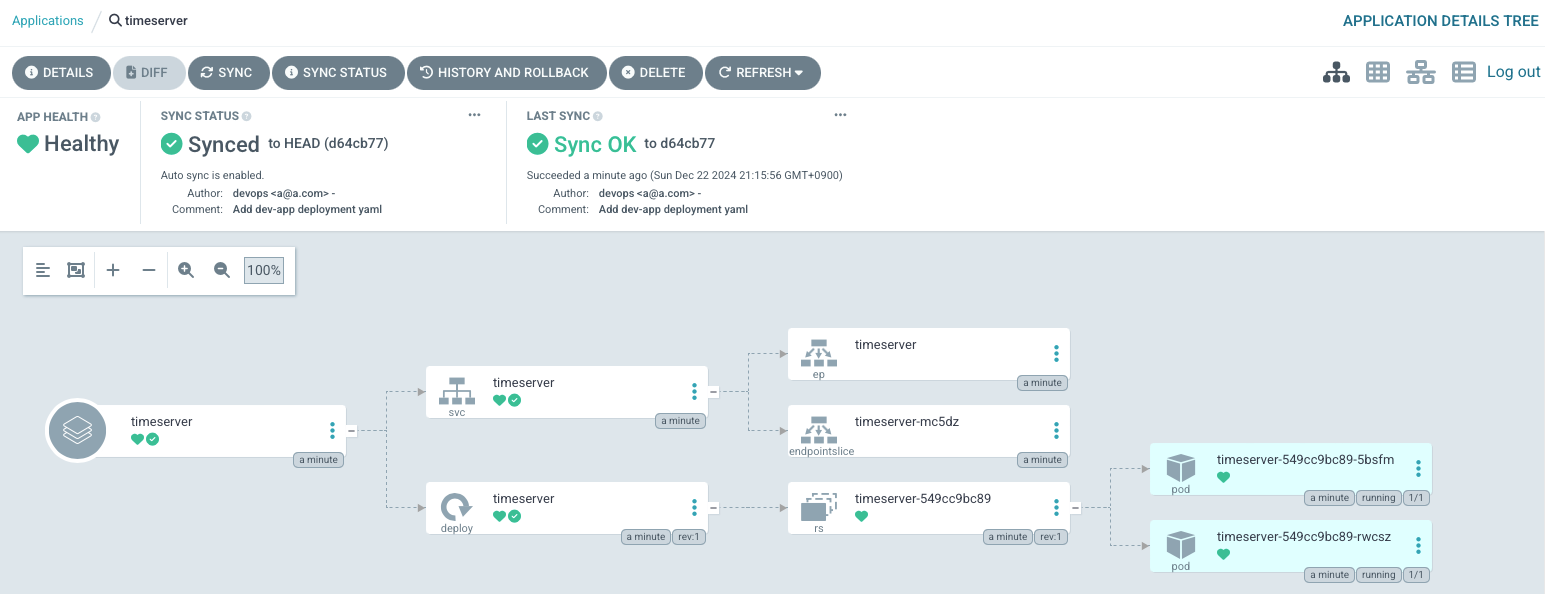

- SYNC 버튼을 클릭하여 git의 manifest에 지정된 상태와 k8s의 상태를 동기화 해보겠습니다.

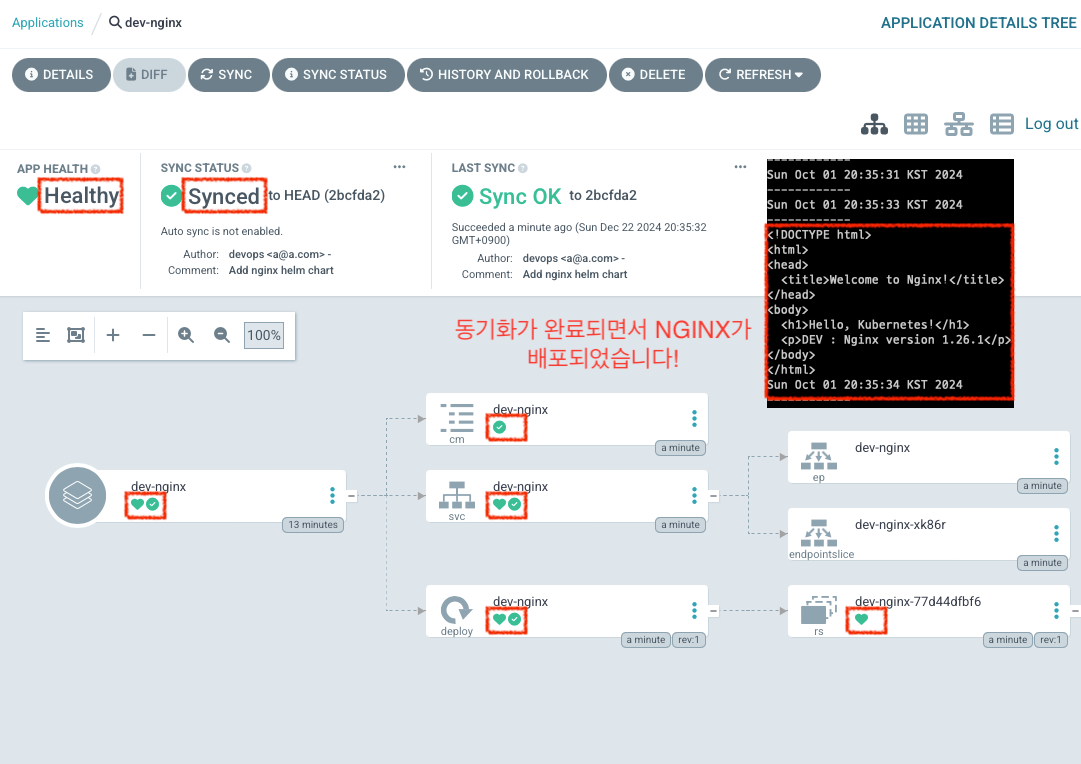

- 동기화가 완료되면 다음과 같이 노란색 화살표 아이콘 대신 녹색 체크표시가 나면서

Synced상태로 변경됩니다. 또한 동시에 Kubernetes에 NGINX가 배포가 잘 되었습니다. Argo CD Application 동기화 완료

Argo CD Application 동기화 완료

코드 수정 후 반영 확인

#

$ VERSION=1.26.2

$ cat > nginx-chart/VERSION <<EOF

$VERSION

EOF

$ cat > nginx-chart/values-dev.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>DEV : Nginx version $VERSION</p>

</body>

</html>

image:

repository: nginx

tag: $VERSION

replicaCount: 2

EOF

$ cat > nginx-chart/values-prd.yaml <<EOF

indexHtml: |

<!DOCTYPE html>

<html>

<head>

<title>Welcome to Nginx!</title>

</head>

<body>

<h1>Hello, Kubernetes!</h1>

<p>PRD : Nginx version $VERSION</p>

</body>

</html>

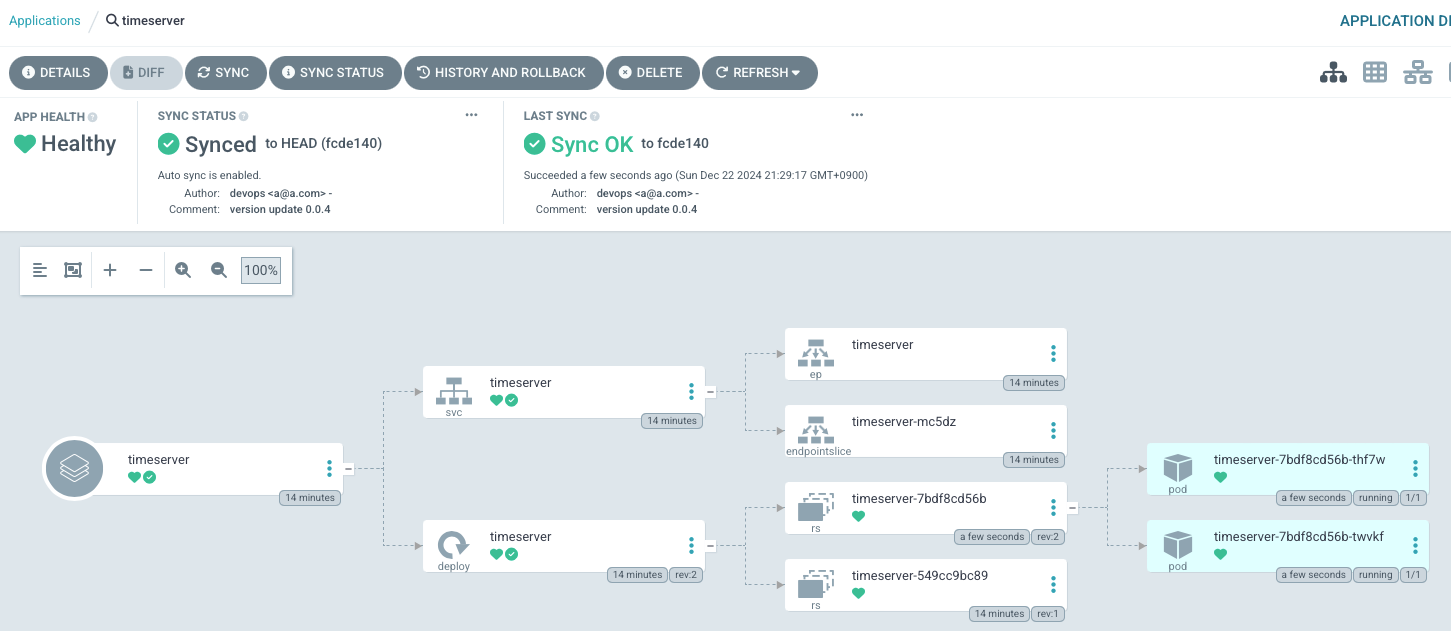

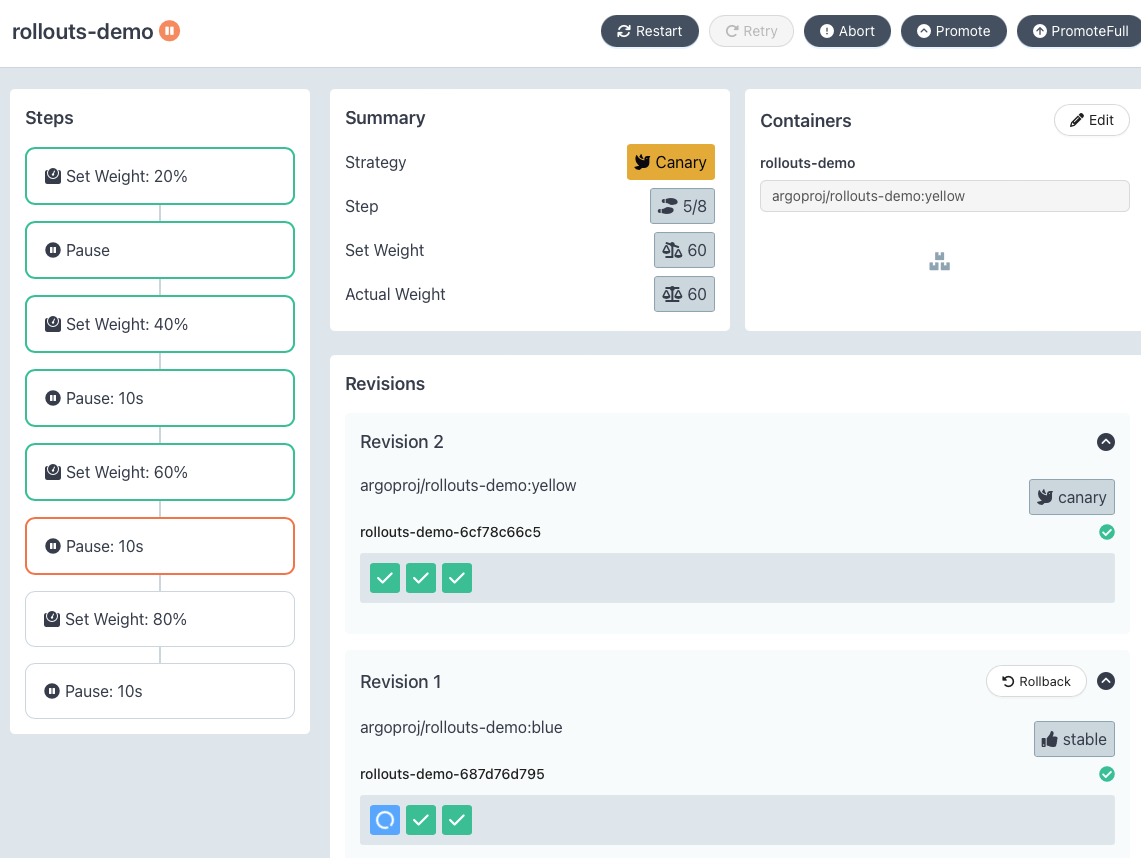

image: