[Cilium] 실습 환경 구성 및 Cilium 설치

들어가며

오랜만에 다시 스터디를 시작합니다. 이번에도 CloudNet@ 팀에서 진행하는 스터디로 고맙게도 스터디에 참여할 수 있게 되었습니다. 이번 스터디는 Cilium의 공식문서를 기반으로 실습해보는 스터디입니다. Cilium 한가지 주제로 진행되는 만큼 깊고 진하게 학습할 수 있을것 같아 기대가 됩니다.

첫주차에는 실습 환경을 구성하고 Cilium을 설치하는 방법을 알아보겠습니다.

실습 환경 구성

실습 환경 구성 준비

저는 MacOS를 사용하고 있기때문에 homebrew를 이용하여 VirtualBox와 Vagrant를 설치하였습니다.

- VirtualBox 설치

$ brew install --cask virtualbox

# => 🍺 virtualbox was successfully installed!

$ VBoxManage --version

# => 7.1.10r169112

- Vagrant 설치

$ brew install --cask vagrant

# => 🍺 vagrant was successfully installed!

$ vagrant version

# => Installed Version: 2.4.7

# Latest Version: 2.4.7

#

# You're running an up-to-date version of Vagrant!

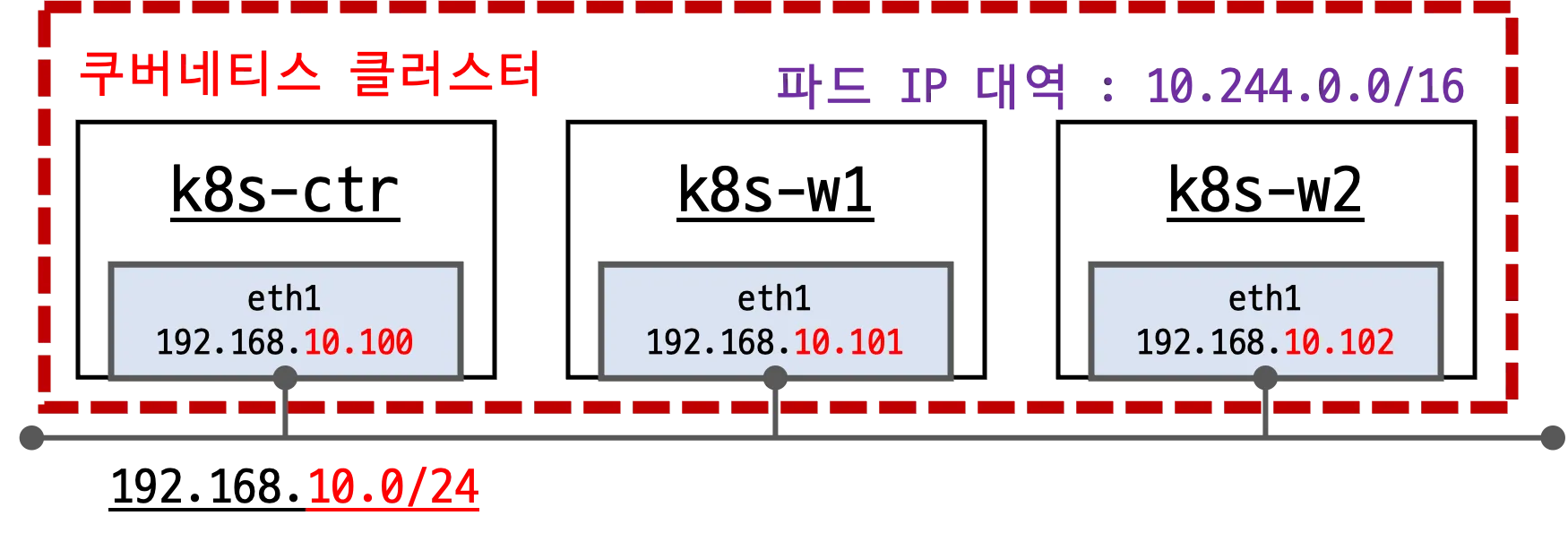

실습 환경 소개

실습 환경을 도식화하면 다음과 같습니다.

- 배포 가상 머신은 컨트롤플레인인 k8s-ctr, 워커노드 k8s-w1, k8s-w2로 구성되어 있습니다.

- eth0 : 10.0.2.15 (모든 노드가 동일)

- eth1 : 192.168.10.100~102

- 초기 프로비저닝시

kubeadm init과join을 실행하여 클러스터를 구성하며, 초기에는 CNI가 설치되어 있지 않습니다.

실습 환경 배포 파일 작성

Vagrantfile

- 가상머신을 정의하고 부팅시 실행할 프로비저닝 설정을 합니다.

# Variables

K8SV = '1.33.2-1.1' # Kubernetes Version : apt list -a kubelet , ex) 1.32.5-1.1

CONTAINERDV = '1.7.27-1' # Containerd Version : apt list -a containerd.io , ex) 1.6.33-1

N = 2 # max number of worker nodes

# Base Image https://portal.cloud.hashicorp.com/vagrant/discover/bento/ubuntu-24.04

## Rocky linux Image https://portal.cloud.hashicorp.com/vagrant/discover/rockylinux

BOX_IMAGE = "bento/ubuntu-24.04"

BOX_VERSION = "202502.21.0"

Vagrant.configure("2") do |config|

#-ControlPlane Node

config.vm.define "k8s-ctr" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "virtualbox" do |vb|

vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"]

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"]

vb.name = "k8s-ctr"

vb.cpus = 2

vb.memory = 2048

vb.linked_clone = true

end

subconfig.vm.host_name = "k8s-ctr"

subconfig.vm.network "private_network", ip: "192.168.10.100"

subconfig.vm.network "forwarded_port", guest: 22, host: 60000, auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "init_cfg.sh", args: [ K8SV, CONTAINERDV]

subconfig.vm.provision "shell", path: "k8s-ctr.sh", args: [ N ]

end

#-Worker Nodes Subnet1

(1..N).each do |i|

config.vm.define "k8s-w#{i}" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.box_version = BOX_VERSION

subconfig.vm.provider "virtualbox" do |vb|

vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"]

vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"]

vb.name = "k8s-w#{i}"

vb.cpus = 2

vb.memory = 1536

vb.linked_clone = true

end

subconfig.vm.host_name = "k8s-w#{i}"

subconfig.vm.network "private_network", ip: "192.168.10.10#{i}"

subconfig.vm.network "forwarded_port", guest: 22, host: "6000#{i}", auto_correct: true, id: "ssh"

subconfig.vm.synced_folder "./", "/vagrant", disabled: true

subconfig.vm.provision "shell", path: "init_cfg.sh", args: [ K8SV, CONTAINERDV]

subconfig.vm.provision "shell", path: "k8s-w.sh"

end

end

end

init_cfg.sh

- 프로비저닝시 vagrant가 실행할 초기 설정 스크립트입니다. arguments로 Kubernetes 버전과 Containerd 버전등을 받아서 설치합니다.

#!/usr/bin/env bash

echo ">>>> Initial Config Start <<<<"

echo "[TASK 1] Setting Profile & Change Timezone"

echo 'alias vi=vim' >> /etc/profile

echo "sudo su -" >> /home/vagrant/.bashrc

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime

echo "[TASK 2] Disable AppArmor"

systemctl stop ufw && systemctl disable ufw >/dev/null 2>&1

systemctl stop apparmor && systemctl disable apparmor >/dev/null 2>&1

echo "[TASK 3] Disable and turn off SWAP"

swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab

echo "[TASK 4] Install Packages"

apt update -qq >/dev/null 2>&1

apt-get install apt-transport-https ca-certificates curl gpg -y -qq >/dev/null 2>&1

# Download the public signing key for the Kubernetes package repositories.

mkdir -p -m 755 /etc/apt/keyrings

K8SMMV=$(echo $1 | sed -En 's/^([0-9]+\.[0-9]+)\..*/\1/p')

curl -fsSL https://pkgs.k8s.io/core:/stable:/v$K8SMMV/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v$K8SMMV/deb/ /" >> /etc/apt/sources.list.d/kubernetes.list

curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

# packets traversing the bridge are processed by iptables for filtering

echo 1 > /proc/sys/net/ipv4/ip_forward

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.d/k8s.conf

# enable br_netfilter for iptables

modprobe br_netfilter

modprobe overlay

echo "br_netfilter" >> /etc/modules-load.d/k8s.conf

echo "overlay" >> /etc/modules-load.d/k8s.conf

echo "[TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)"

# Update the apt package index, install kubelet, kubeadm and kubectl, and pin their version

apt update >/dev/null 2>&1

# apt list -a kubelet ; apt list -a containerd.io

apt-get install -y kubelet=$1 kubectl=$1 kubeadm=$1 containerd.io=$2 >/dev/null 2>&1

apt-mark hold kubelet kubeadm kubectl >/dev/null 2>&1

# containerd configure to default and cgroup managed by systemd

containerd config default > /etc/containerd/config.toml

sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

# avoid WARN&ERRO(default endpoints) when crictl run

cat <<EOF > /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

EOF

# ready to install for k8s

systemctl restart containerd && systemctl enable containerd

systemctl enable --now kubelet

echo "[TASK 6] Install Packages & Helm"

apt-get install -y bridge-utils sshpass net-tools conntrack ngrep tcpdump ipset arping wireguard jq tree bash-completion unzip kubecolor >/dev/null 2>&1

curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash >/dev/null 2>&1

echo ">>>> Initial Config End <<<<"

k8s-ctr.sh

-

kubeadm init으로 컨트롤플레인을 설정하고, 편의를 위한k,kc등의 alias를 설정합니다.

#!/usr/bin/env bash

echo ">>>> K8S Controlplane config Start <<<<"

echo "[TASK 1] Initial Kubernetes"

kubeadm init --token 123456.1234567890123456 --token-ttl 0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/16 --apiserver-advertise-address=192.168.10.100 --cri-socket=unix:///run/containerd/containerd.sock >/dev/null 2>&1

echo "[TASK 2] Setting kube config file"

mkdir -p /root/.kube

cp -i /etc/kubernetes/admin.conf /root/.kube/config

chown $(id -u):$(id -g) /root/.kube/config

echo "[TASK 3] Source the completion"

echo 'source <(kubectl completion bash)' >> /etc/profile

echo 'source <(kubeadm completion bash)' >> /etc/profile

echo "[TASK 4] Alias kubectl to k"

echo 'alias k=kubectl' >> /etc/profile

echo 'alias kc=kubecolor' >> /etc/profile

echo 'complete -F __start_kubectl k' >> /etc/profile

echo "[TASK 5] Install Kubectx & Kubens"

git clone https://github.com/ahmetb/kubectx /opt/kubectx >/dev/null 2>&1

ln -s /opt/kubectx/kubens /usr/local/bin/kubens

ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx

echo "[TASK 6] Install Kubeps & Setting PS1"

git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1 >/dev/null 2>&1

cat <<"EOT" >> /root/.bash_profile

source /root/kube-ps1/kube-ps1.sh

KUBE_PS1_SYMBOL_ENABLE=true

function get_cluster_short() {

echo "$1" | cut -d . -f1

}

KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short

KUBE_PS1_SUFFIX=') '

PS1='$(kube_ps1)'$PS1

EOT

kubectl config rename-context "kubernetes-admin@kubernetes" "HomeLab" >/dev/null 2>&1

echo "[TASK 6] Install Kubeps & Setting PS1"

echo "192.168.10.100 k8s-ctr" >> /etc/hosts

for (( i=1; i<=$1; i++ )); do echo "192.168.10.10$i k8s-w$i" >> /etc/hosts; done

echo ">>>> K8S Controlplane Config End <<<<"

k8s-w.sh

- 워커노드에서

kubeadm join을 실행하여 컨트롤플레인에 조인합니다.

#!/usr/bin/env bash

echo ">>>> K8S Node config Start <<<<"

echo "[TASK 1] K8S Controlplane Join"

kubeadm join --token 123456.1234567890123456 --discovery-token-unsafe-skip-ca-verification 192.168.10.100:6443 >/dev/null 2>&1

echo ">>>> K8S Node config End <<<<"

실습 환경 배포

- 실습 환경 배포를 위한 파일이 준비되었으니

vagrant up명령을 이용하여 가상 머신을 배포하겠습니다.

$ vagrant up

# => Bringing machine 'k8s-ctr' up with 'virtualbox' provider...

# Bringing machine 'k8s-w1' up with 'virtualbox' provider...

# Bringing machine 'k8s-w2' up with 'virtualbox' provider...

# ==> k8s-ctr: Box 'bento/ubuntu-24.04' could not be found. Attempting to find and install...

# k8s-ctr: Box Provider: virtualbox

# k8s-ctr: Box Version: 202502.21.0

# ==> k8s-ctr: Loading metadata for box 'bento/ubuntu-24.04'

# k8s-ctr: URL: https://vagrantcloud.com/api/v2/vagrant/bento/ubuntu-24.04

# ==> k8s-ctr: Adding box 'bento/ubuntu-24.04' (v202502.21.0) for provider: virtualbox (arm64)

# k8s-ctr: Downloading: https://vagrantcloud.com/bento/boxes/ubuntu-24.04/versions/202502.21.0/providers/virtualbox/arm64/vagrant.box

# ==> k8s-ctr: Successfully added box 'bento/ubuntu-24.04' (v202502.21.0) for 'virtualbox (arm64)'!

# ==> k8s-ctr: Preparing master VM for linked clones...

# ...

# k8s-w2: >>>> K8S Node config End <<<<

- 배포 후 각 노드에 ssh로 접속하여 ip를 확인해 보겠습니다.

$ for i in ctr w1 w2 ; do echo ">> node : k8s-$i <<"; vagrant ssh k8s-$i -c 'ip -c -4 addr show dev eth0'; echo; done #

# => >> node : k8s-ctr <<

# 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# altname enp0s8

# inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

# valid_lft 85500sec preferred_lft 85500sec

#

# >> node : k8s-w1 <<

# 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# altname enp0s8

# inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

# valid_lft 85707sec preferred_lft 85707sec

#

# >> node : k8s-w2 <<

# 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# altname enp0s8

# inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

# valid_lft 85781sec preferred_lft 85781sec

-

k8s-ctr노드에 접속하여 기본 정보를 확인해 보겠습니다.

$ vagrant ssh k8s-ctr

---

# => Welcome to Ubuntu 24.04.2 LTS (GNU/Linux 6.8.0-53-generic aarch64)

# ...

# (⎈|HomeLab:N/A) root@k8s-ctr:~#

$ whoami

# => root

$ pwd

# => /root

$ hostnamectl

# => Static hostname: k8s-ctr

# Icon name: computer-vm

# Chassis: vm

# Machine ID: 3d6bd65db7dd43d392b2d5229abb5654

# Boot ID: 2d9ede04fd294425988e58c588dd201c

# Virtualization: qemu

# Operating System: Ubuntu 24.04.2 LTS

# Kernel: Linux 6.8.0-53-generic

# Architecture: arm64

$ htop

$ cat /etc/hosts

# => 127.0.0.1 localhost

# 127.0.1.1 vagrant

# ...

# 127.0.2.1 k8s-ctr k8s-ctr

# 192.168.10.100 k8s-ctr

# 192.168.10.101 k8s-w1

# 192.168.10.102 k8s-w2

$ ping -c 1 k8s-w1

# => PING k8s-w1 (192.168.10.101) 56(84) bytes of data.

# 64 bytes from k8s-w1 (192.168.10.101): icmp_seq=1 ttl=64 time=0.795 ms

#

# --- k8s-w1 ping statistics ---

# 1 packets transmitted, 1 received, 0% packet loss, time 0ms

# rtt min/avg/max/mdev = 0.795/0.795/0.795/0.000 ms

$ ping -c 1 k8s-w2

# => PING k8s-w2 (192.168.10.102) 56(84) bytes of data.

# 64 bytes from k8s-w2 (192.168.10.102): icmp_seq=1 ttl=64 time=1.20 ms

# ...

$ sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w1 hostname

# => k8s-w1

$ sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-w2 hostname

# => k8s-w2

# vagrant ssh 로 접속 시 tcp 연결 정보 : NAT Mode 10.0.2.2(GateWay)

$ ss -tnp |grep sshd

# => ESTAB 0 0 [::ffff:10.0.2.15]:22 [::ffff:10.0.2.2]:63578 users:(("sshd",pid=5141,fd=4),("sshd",pid=5094,fd=4))

# nic 정보

$ ip -c addr

# => 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

# ...

# 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# link/ether 08:00:27:71:19:d8 brd ff:ff:ff:ff:ff:ff

# altname enp0s8

# inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

# valid_lft 82445sec preferred_lft 82445sec

# 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# link/ether 08:00:27:da:24:93 brd ff:ff:ff:ff:ff:ff

# altname enp0s9

# inet 192.168.10.100/24 brd 192.168.10.255 scope global eth1

# valid_lft forever preferred_lft forever

# default 라우팅 정보

$ ip -c route

# => default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

# dns 서버 정보 : NAT Mode 10.0.2.3

$ resolvectl

# => Global

# Protocols: -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

# resolv.conf mode: stub

#

# Link 2 (eth0)

# Current Scopes: DNS

# Protocols: +DefaultRoute -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

# Current DNS Server: 10.0.2.3

# DNS Servers: 10.0.2.3

#

# Link 3 (eth1)

# Current Scopes: none

# Protocols: -DefaultRoute -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

$ exit

---

-

k8s-ctrk8s 정보 확인

# 클러스터 정보 확인

$ kubectl cluster-info

# => Kubernetes control plane is running at https://192.168.10.100:6443

# CoreDNS is running at https://192.168.10.100:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

#

# To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# 노드 정보 : 상태, INTERNAL-IP 확인

$ kubectl get node -owide

# => NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# k8s-ctr NotReady control-plane 2d v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

# k8s-w1 NotReady <none> 2d v1.33.2 10.0.2.15 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

# k8s-w2 NotReady <none> 2d v1.33.2 10.0.2.15 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

# 파드 정보 : 상태, 파드 IP 확인 - kube-proxy 확인

$ kubectl get pod -A -owide

# => NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# kube-system coredns-674b8bbfcf-79mbb 0/1 Pending 0 2d <none> <none> <none> <none>

# kube-system coredns-674b8bbfcf-rtx95 0/1 Pending 0 2d <none> <none> <none> <none>

# kube-system etcd-k8s-ctr 1/1 Running 1 (12m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-apiserver-k8s-ctr 1/1 Running 1 (12m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-controller-manager-k8s-ctr 1/1 Running 1 (12m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-proxy-hdffr 1/1 Running 1 (11m ago) 2d 10.0.2.15 k8s-w1 <none> <none>

# kube-system kube-proxy-r96sz 1/1 Running 1 (12m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-proxy-swgmb 1/1 Running 1 (11m ago) 2d 10.0.2.15 k8s-w2 <none> <none>

# kube-system kube-scheduler-k8s-ctr 1/1 Running 1 (12m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# 단축어 확인(kc = kubecolor) & coredns 파드 상태 확인

$ k describe pod -n kube-system -l k8s-app=kube-dns

# => Name: coredns-674b8bbfcf-79mbb

# Namespace: kube-system

# Priority: 2000000000

# Priority Class Name: system-cluster-critical

# Service Account: coredns

# Node: <none>

# Labels: k8s-app=kube-dns

# pod-template-hash=674b8bbfcf

# Annotations: <none>

# Status: Pending

# IP:

# IPs: <none>

# Controlled By: ReplicaSet/coredns-674b8bbfcf

# Containers:

# coredns:

# Image: registry.k8s.io/coredns/coredns:v1.12.0

# Ports: 53/UDP, 53/TCP, 9153/TCP

# Host Ports: 0/UDP, 0/TCP, 0/TCP

# Args:

# -conf

# /etc/coredns/Corefile

# Limits:

# memory: 170Mi

# Requests:

# cpu: 100m

# memory: 70Mi

# Liveness: http-get http://:8080/health delay=60s timeout=5s period=10s #success=1 #failure=5

# Readiness: http-get http://:8181/ready delay=0s timeout=1s period=10s #success=1 #failure=3

# Environment: <none>

# Mounts:

# /etc/coredns from config-volume (ro)

# /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-vrqlj (ro)

# Conditions:

# Type Status

# PodScheduled False

# Volumes:

# config-volume:

# Type: ConfigMap (a volume populated by a ConfigMap)

# Name: coredns

# Optional: false

# kube-api-access-vrqlj:

# Type: Projected (a volume that contains injected data from multiple sources)

# TokenExpirationSeconds: 3607

# ConfigMapName: kube-root-ca.crt

# Optional: false

# DownwardAPI: true

# QoS Class: Burstable

# Node-Selectors: kubernetes.io/os=linux

# Tolerations: CriticalAddonsOnly op=Exists

# node-role.kubernetes.io/control-plane:NoSchedule

# node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

# node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

# Events:

# Type Reason Age From Message

# ---- ------ ---- ---- -------

# Warning FailedScheduling 7m18s (x2 over 12m) default-scheduler 0/3 nodes are available: 3 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

# Warning FailedScheduling 47h(x12 over 2d) default-scheduler 0/3 nodes are available: 3 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

# ...

$ kc describe pod -n kube-system -l k8s-app=kube-dns

-

k8s-ctrINTERNAL-IP 변경 설정

#

$ cat /var/lib/kubelet/kubeadm-flags.env

# => KUBELET_KUBEADM_ARGS="--container-runtime-endpoint=unix:///run/containerd/containerd.sock --pod-infra-container-image=registry.k8s.io/pause:3.10"

# INTERNAL-IP 변경 설정

$ NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}')

$ sed -i "s/^\(KUBELET_KUBEADM_ARGS=\"\)/\1--node-ip=${NODEIP} /" /var/lib/kubelet/kubeadm-flags.env

$ systemctl daemon-reexec && systemctl restart kubelet

$ cat /var/lib/kubelet/kubeadm-flags.env

# => KUBELET_KUBEADM_ARGS="--node-ip=192.168.10.100 --container-runtime-endpoint=unix:///run/containerd/containerd.sock --pod-infra-container-image=registry.k8s.io/pause:3.10"

#

$ kubectl get node -owide

# => NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# k8s-ctr NotReady control-plane 2d v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

# k8s-w1 NotReady <none> 2d v1.33.2 10.0.2.15 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

# k8s-w2 NotReady <none> 2d v1.33.2 10.0.2.15 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

-

k8s-w1,k8s-w2에도 위와 동일한 방법으로 INTERNAL-IP를 192.168.10.x로 변경합니다. -

k8s-w1/w2설정 완료 후 INTERNAL-IP 확인

$ kubectl get node -owide

# => NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

# k8s-ctr NotReady control-plane 2d v1.33.2 192.168.10.100 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

# k8s-w1 NotReady <none> 2d v1.33.2 192.168.10.101 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

# k8s-w2 NotReady <none> 2d v1.33.2 192.168.10.102 <none> Ubuntu 24.04.2 LTS 6.8.0-53-generic containerd://1.7.27

$ kubectl get pod -A -owide

# => NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# kube-system coredns-674b8bbfcf-79mbb 0/1 Pending 0 2d <none> <none> <none> <none>

# kube-system coredns-674b8bbfcf-rtx95 0/1 Pending 0 2d <none> <none> <none> <none>

# kube-system etcd-k8s-ctr 1/1 Running 1 (27m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-apiserver-k8s-ctr 1/1 Running 1 (27m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-controller-manager-k8s-ctr 1/1 Running 1 (27m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-proxy-hdffr 1/1 Running 1 (26m ago) 2d 192.168.10.101 k8s-w1 <none> <none>

# kube-system kube-proxy-r96sz 1/1 Running 1 (27m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-proxy-swgmb 1/1 Running 1 (26m ago) 2d 192.168.10.102 k8s-w2 <none> <none>

# kube-system kube-scheduler-k8s-ctr 1/1 Running 1 (27m ago) 2d 192.168.10.100 k8s-ctr <none> <none>

-

k8s-ctrstatic pod의 IP 변경 설정

#

$ tree /etc/kubernetes/manifests

# => /etc/kubernetes/manifests

# ├── etcd.yaml

# ├── kube-apiserver.yaml

# ├── kube-controller-manager.yaml

# └── kube-scheduler.yaml

# etcd 정보 확인

$ cat /etc/kubernetes/manifests/etcd.yaml

# => ...

# volumes:

# - hostPath:

# path: /etc/kubernetes/pki/etcd

# type: DirectoryOrCreate

# name: etcd-certs

# - hostPath:

# path: /var/lib/etcd

# type: DirectoryOrCreate

# name: etcd-data

# ...

$ tree /var/lib/etcd/

# => /var/lib/etcd/

# └── member

# ├── snap

# │ ├── 0000000000000003-0000000000002711.snap

# │ └── db

# └── wal

# ├── 0000000000000000-0000000000000000.wal

# └── 0.tmp

# k8s-ctr 재부팅

$ reboot

Flannel CNI

Flannel 소개

- Flannel은 쿠버네티스의 네트워크 요구사항을 충족하는 가장 간단하고 사용하기 쉬운 오버레이 네트워크 플러그인입니다.

- Flannel은 가상 네트워크를 생성하여 파드 간 통신을 가능하게 하며, VXLAN, UDP, Host-GW 등 다양한 백엔드를 지원합니다. 이 중에서는 VXLAN 사용이 가장 권장됩니다.

- VXLAN(Virtual eXtensible Local Area Network)은 물리적인 네트워크 환경 위에 논리적인 가상 네트워크를 구성하는 기술로, UDP 8472 포트를 통해 노드 간 터널링 방식으로 통신합니다.

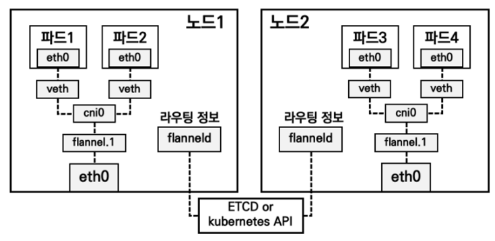

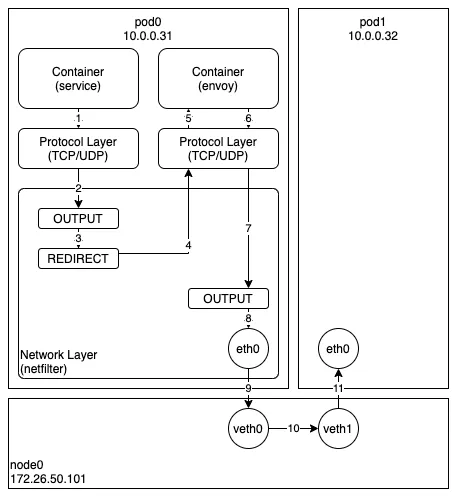

Flannel 구조 (출처: 추가예정)

Flannel 구조 (출처: 추가예정)

- 위 그림처럼 파드의 eth0 네트워크 인터페이스는 호스트 네임스페이스의 veth 인터페이스와 연결되고, veth는 cni0와 연결됩니다.

- 같은 노드 내에서는 cni0 브릿지를 통해 파드 간 통신이 이루어지며, 다른 노드와의 통신은 VXLAN을 통해 처리됩니다.

- VXLAN 경로에서는 cni0 브릿지를 거쳐 flannel.1 인터페이스로 패킷이 전달되고, flannel.1은 호스트의 eth0을 통해 다른 노드로 전송합니다. 이때 flannel.1은 VTEP(Vxlan Tunnel End Point) 역할을 하며, 패킷을 캡슐화하여 대상 노드의 IP로 전송하고, 도착한 노드에서는 캡슐을 해제해 해당 파드로 전달합니다.

- 각 노드는 파드에 할당할 수 있는 IP 네트워크 대역을 가지고 있으며, flannel을 통해 ETCD나 Kubernetes API에 전달된 정보를 바탕으로 모든 노드는 자신의 라우팅 테이블을 업데이트합니다. 이를 통해 서로 다른 노드의 파드끼리도 내부 IP 주소로 통신할 수 있습니다.

Flannel 설치 및 확인

- 설치 전 확인

# IP 주소 범위 확인

$ kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

# => "--service-cluster-ip-range=10.96.0.0/16",

# "--cluster-cidr=10.244.0.0/16",

# coredns 파드 상태 확인

$ kubectl get pod -n kube-system -l k8s-app=kube-dns -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# coredns-674b8bbfcf-79mbb 0/1 <span style="color: green;">Pending</span> 0 2d14h <none> <none> <none> <none>

# coredns-674b8bbfcf-rtx95 0/1 <span style="color: green;">Pending</span> 0 2d14h <none> <none> <none> <none>

# <span style="color: green;">👉 CNI가 설치되지 않아서 Pending 상태입니다</span>

#

$ ip -c link

# => ...

# 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

# link/ether 08:00:27:71:19:d8 brd ff:ff:ff:ff:ff:ff

# 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

# link/ether 08:00:27:da:24:93 brd ff:ff:ff:ff:ff:ff

$ ip -c route

# => default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

$ brctl show

# => <span style="color: green;">없음</span>

$ ip -c addr

# => ...

# 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# link/ether 08:00:27:71:19:d8 brd ff:ff:ff:ff:ff:ff

# inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

# valid_lft 85957sec preferred_lft 85957sec

# 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# link/ether 08:00:27:da:24:93 brd ff:ff:ff:ff:ff:ff

# inet 192.168.10.100/24 brd 192.168.10.255 scope global eth1

# valid_lft forever preferred_lft forever

$ ifconfig | grep -iEA1 'eth[0-9]:'

# => eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

# inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255

# --

# eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

# inet 192.168.10.100 netmask 255.255.255.0 broadcast 192.168.10.255

#

$ iptables-save

$ iptables -t nat -S

$ iptables -t filter -S

$ iptables -t mangle -S

# flannel 설치 후 비교를 위해 설치 전의 iptables 설정을 저장합니다.

$ iptables-save > iptables-before-flannel.txt

#

$ tree /etc/cni/net.d/

# => /etc/cni/net.d/

#

# 0 directories, 0 files

- Flannel 설치

# helm에 의한 namespace 생성 오류 방지를 위해 kube-flannel 네임스페이스를 수동으로 생성합니다.

$ kubectl create ns kube-flannel

# => namespace/kube-flannel created

$ kubectl label --overwrite ns kube-flannel pod-security.kubernetes.io/enforce=privileged

# => namespace/kube-flannel labeled

$ helm repo add flannel https://flannel-io.github.io/flannel/

# => "flannel" has been added to your repositories

$ helm repo list

# => NAME URL

# flannel https://flannel-io.github.io/flannel/

$ helm search repo flannel

# => NAME CHART VERSION APP VERSION DESCRIPTION

# flannel/flannel v0.27.1 v0.27.1 Install Flannel Network Plugin.

$ helm show values flannel/flannel

# => ...

# podCidr: "10.244.0.0/16"

# ...

# cniBinDir: "/opt/cni/bin"

# cniConfDir: "/etc/cni/net.d"

# skipCNIConfigInstallation: false

# enableNFTables: false

# args:

# - "--ip-masq"

# - "--kube-subnet-mgr"

# backend: "vxlan"

# ...

# k8s 관련 트래픽 통신 동작하는 nic 지정

$ cat << EOF > flannel-values.yaml

podCidr: "10.244.0.0/16"

flannel:

args:

- "--ip-masq"

- "--kube-subnet-mgr"

- "--iface=eth1"

EOF

# helm 설치

$ helm install flannel --namespace kube-flannel flannel/flannel -f flannel-values.yaml

# => NAME: flannel

# LAST DEPLOYED: Mon Jan 19 13:52:04 2025

# NAMESPACE: kube-flannel

# STATUS: deployed

# REVISION: 1

# TEST SUITE: None

$ helm list -A

# => NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# flannel kube-flannel 1 2025-01-19 13:52:04.427781204 +0900 KST deployed flannel-v0.27.1 v0.27.1

# 확인 : install-cni-plugin, install-cni

$ kc describe pod -n kube-flannel -l app=flannel

# => Name: kube-flannel-ds-5fm6l

# Namespace: kube-flannel

# Priority: 2000001000

# Priority Class Name: system-node-critical

# Service Account: flannel

# Node: <span style="color: green;">k8s-w1/192.168.10.101</span>

# Start Time: Sat, 19 Jul 2025 13:52:06 +0900

# Labels: app=flannel

# controller-revision-hash=66c5c78475

# pod-template-generation=1

# tier=node

# Annotations: <none>

# Status: Running

# IP: 192.168.10.101

# IPs:

# IP: 192.168.10.101

# Controlled By: DaemonSet/kube-flannel-ds

# Init Containers:

# install-cni-plugin:

# ...

# install-cni:

# ....

# Containers:

# kube-flannel:

# Container ID: containerd://c6a1e24ae6193491289908c4b10a8ce6f9a36e000114aaf61dc60da43bdc50ca

# Image: ghcr.io/flannel-io/flannel:v0.27.1

# Image ID: ghcr.io/flannel-io/flannel@sha256:0c95c822b690f83dc827189d691015f92ab7e249e238876b56442b580c492d85

# Port: <none>

# Host Port: <none>

# ...

# Name: kube-flannel-ds-dstmv

# Namespace: kube-flannel

# Priority: 2000001000

# Priority Class Name: system-node-critical

# Service Account: flannel

# Node: <span style="color: green;">k8s-w2/192.168.10.102</span>

# Start Time: Sat, 19 Jul 2025 13:52:05 +0900

# ...

# IP: 192.168.10.102

# ...

# Name: kube-flannel-ds-lsf7h

# Namespace: kube-flannel

# Priority: 2000001000

# Priority Class Name: system-node-critical

# Service Account: flannel

# Node: <span style="color: green;">k8s-ctr/192.168.10.100</span>

# Start Time: Sat, 19 Jul 2025 13:52:04 +0900

# Labels: app=flannel

# controller-revision-hash=66c5c78475

# pod-template-generation=1

# tier=node

# Annotations: <none>

# Status: Running

# IP: 192.168.10.100

# ...

$ tree /opt/cni/bin/ # flannel

# => /opt/cni/bin/

# ├── bandwidth

# ├── bridge

# ├── dhcp

# ├── dummy

# ├── firewall

# ├── flannel

# ├── host-device

# ├── host-local

# ├── ipvlan

# ├── LICENSE

# ├── loopback

# ├── macvlan

# ├── portmap

# ├── ptp

# ├── README.md

# ├── sbr

# ├── static

# ├── tap

# ├── tuning

# ├── vlan

# └── vrf

#

# 1 directory, 21 files

$ tree /etc/cni/net.d/

# => /etc/cni/net.d/

# └── 10-flannel.conflist

#

# 1 directory, 1 file

$ cat /etc/cni/net.d/10-flannel.conflist | jq

# => {

# "name": "cbr0",

# "cniVersion": "0.3.1",

# "plugins": [

# {

# "type": "flannel",

# "delegate": {

# "hairpinMode": true,

# "isDefaultGateway": true

# }

# },

# {

# "type": "portmap",

# "capabilities": {

# "portMappings": true

# }

# }

# ]

# }

$ kc describe cm -n kube-flannel kube-flannel-cfg

# => ...

# net-conf.json:

# ----

# {

# "Network": "10.244.0.0/16",

# "Backend": {

# "Type": "vxlan"

# }

# }

# 설치 전과 비교해보겠습니다.

$ ip -c link

# => ...

# 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

# link/ether 08:00:27:71:19:d8 brd ff:ff:ff:ff:ff:ff

# 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

# link/ether 08:00:27:da:24:93 brd ff:ff:ff:ff:ff:ff

# <span style="color: green;">4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default</span>

# <span style="color: green;"> link/ether aa:3f:e5:cd:ae:92 brd ff:ff:ff:ff:ff:ff</span>

$ ip -c route | grep 10.244.

# => 10.244.1.0/24 via 10.244.1.0 dev flannel.1 onlink

# 10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink

$ ping -c 1 10.244.1.0

# => PING 10.244.1.0 (10.244.1.0) 56(84) bytes of data.

# 64 bytes from 10.244.1.0: icmp_seq=1 ttl=64 time=1.31 ms

#

# --- 10.244.1.0 ping statistics ---

# 1 packets transmitted, 1 received, 0% packet loss, time 0ms

# rtt min/avg/max/mdev = 1.314/1.314/1.314/0.000 ms

$ ping -c 1 10.244.2.0

# => PING 10.244.2.0 (10.244.2.0) 56(84) bytes of data.

# 64 bytes from 10.244.2.0: icmp_seq=1 ttl=64 time=1.31 ms

#

# --- 10.244.2.0 ping statistics ---

# 1 packets transmitted, 1 received, 0% packet loss, time 0ms

# rtt min/avg/max/mdev = 1.312/1.312/1.312/0.000 ms

$ brctl show

$ iptables-save

$ iptables -t nat -S

$ iptables -t filter -S

$ iptables-save > iptables-after-flannel.txt

# 설치 전과 후의 iptables 설정을 비교합니다.

$ diff -u iptables-before-flannel.txt iptables-after-flannel.txt

# k8s-w1, k8s-w2 정보 확인

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i ip -c link ; echo; done

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i ip -c route ; echo; done

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i brctl show ; echo; done

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i sudo iptables -t nat -S ; echo; done

샘플 애플리케이션 배포 및 확인

# 샘플 애플리케이션 배포

$ cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 2

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

# => deployment.apps/webpod created

# service/webpod created

# k8s-ctr 노드에 curl-pod 파드 배포

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: alpine/curl

command: ["sleep", "36000"]

EOF

# => pod/curl-pod created

# 컨트롤플레인 노드(k8s-ctr)에서 파드 확인

$ crictl ps

# => CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD NAMESPACE

# ba7ddc59fa138 1fb7da88b3320 1 second ago Running curl 0 a87775d3d7098 <span style="color: green;">curl-pod</span> default

# 2c095a0d795b7 83a2e3e54aa1e 19 minutes ago Running kube-flannel 0 49d7f057d7491 kube-flannel-ds-lsf7h kube-flannel

# 13ff95772dd16 738e99dbd7325 35 minutes ago Running kube-proxy 3 0ce95a5226767 kube-proxy-r96sz kube-system

# 625a7ec089f93 c03972dff86ba 35 minutes ago Running kube-scheduler 3 7691ca47ac391 kube-scheduler-k8s-ctr kube-system

# 3b02267780926 ef439b94d49d4 35 minutes ago Running kube-controller-manager 3 232075758b77f kube-controller-manager-k8s-ctr kube-system

# f956731d12744 31747a36ce712 35 minutes ago Running etcd 3 7ac2514bac9cb etcd-k8s-ctr kube-system

# 9f50506b3ca66 c0425f3fe3fbf 35 minutes ago Running kube-apiserver 3 d5246dd2d31b9 kube-apiserver-k8s-ctr kube-system

# <span style="color: green;">👉 curl-pod는 nodeName: 을 통해 컨트롤플레인 노드(k8s-ctr)에 배포되었습니다.</span>

# 워커 노드(k8s-w1, k8s-w2)에서 파드 확인

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i sudo crictl ps ; echo; done

# => CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD NAMESPACE

# c934a221a4fec ab541801c8cc5 57 seconds ago Running webpod 0 7323e0f4eced8 <span style="color: green;">webpod-697b545f57-7j5vt</span> default

# c6a1e24ae6193 83a2e3e54aa1e 20 minutes ago Running kube-flannel 0 3a86bc505126a kube-flannel-ds-5fm6l kube-flannel

# b55a66b5cd0a6 738e99dbd7325 35 minutes ago Running kube-proxy 2 8bc7a54488b35 kube-proxy-hdffr kube-system

#

# >> node : k8s-w2 <<

# CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD NAMESPACE

# 54658fa9cfc29 ab541801c8cc5 58 seconds ago Running webpod 0 8dec7a5a7aed1 <span style="color: green;">webpod-697b545f57-sdv4l</span> default

# 9b2a414ee1acc f72407be9e08c 19 minutes ago Running coredns 0 23071bf7a21e4 coredns-674b8bbfcf-rtx95 kube-system

# e1c86c4fa20fe f72407be9e08c 20 minutes ago Running coredns 0 757397c6bcd8f coredns-674b8bbfcf-79mbb kube-system

# eeac62c8beba7 83a2e3e54aa1e 20 minutes ago Running kube-flannel 0 1b4ba4f721424 kube-flannel-ds-dstmv kube-flannel

# 0a6112c11e948 738e99dbd7325 35 minutes ago Running kube-proxy 2 f9f19975aed04 kube-proxy-swgmb kube-system

# <span style="color: green;">👉 webpod는 별도로 nodeName: 을 지정하지 않았기 때문에 워커 노드(k8s-w1, k8s-w2)에 배포되었습니다.</span>

- 확인

# 배포 확인

$ kubectl get deploy,svc,ep webpod -owide

# => NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

# deployment.apps/webpod 2/2 2 2 18m webpod traefik/whoami app=webpod

#

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

# service/webpod ClusterIP 10.96.62.184 <none> 80/TCP 18m app=webpod

#

# NAME ENDPOINTS AGE

# endpoints/webpod 10.244.1.2:80,10.244.2.4:80 18m

#

$ kubectl api-resources | grep -i endpoint

# => endpoints ep v1 true Endpoints

# endpointslices discovery.k8s.io/v1 true EndpointSlice

$ kubectl get endpointslices -l app=webpod

# => NAME ADDRESSTYPE PORTS ENDPOINTS AGE

# webpod-9pfs7 IPv4 80 10.244.2.4,10.244.1.2 18m

# 배포 전과 비교해보겠습니다.

$ ip -c link

# => ...

# 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

# link/ether 08:00:27:71:19:d8 brd ff:ff:ff:ff:ff:ff

# 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

# link/ether 08:00:27:da:24:93 brd ff:ff:ff:ff:ff:ff

# 4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default

# link/ether aa:3f:e5:cd:ae:92 brd ff:ff:ff:ff:ff:ff

# 5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

# link/ether b2:e2:a2:aa:4e:5c brd ff:ff:ff:ff:ff:ff

# <span style="color: green;">6: veth0911be7c@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000</span>

# <span style="color: green;"> link/ether 6a:8b:92:5e:74:b3 brd ff:ff:ff:ff:ff:ff link-netns cni-15400ffe-d5f7-c6c2-78d9-dbbbc2f08db7</span>

$ brctl show

# => bridge name bridge id STP enabled interfaces

# cni0 8000.b2e2a2aa4e5c no veth0911be7c

# <span style="color: green;">👉 veth 인터페이스를 통해 파드와 연결된 cni0 브릿지가 생성되었습니다.</span>

$ iptables-save

$ iptables -t nat -S

$ iptables-save > iptables-after-deployment.txt

$ diff iptables-after-flannel.txt iptables-after-deployment.txt

# => ...

# 62a63,64

# > :KUBE-SEP-PQBQBGZJJ5FKN3TB - [0:0]

# > :KUBE-SEP-R5LRHDMUTGTM635J - [0:0]

# 66a69

# > :KUBE-SVC-CNZCPOCNCNOROALA - [0:0]

# 92a96,99

# > -A KUBE-SEP-PQBQBGZJJ5FKN3TB -s 10.244.1.2/32 -m comment --comment "default/webpod" -j KUBE-MARK-MASQ

# > -A KUBE-SEP-PQBQBGZJJ5FKN3TB -p tcp -m comment --comment "default/webpod" -m tcp -j DNAT --to-destination 10.244.1.2:80

# > -A KUBE-SEP-R5LRHDMUTGTM635J -s 10.244.2.4/32 -m comment --comment "default/webpod" -j KUBE-MARK-MASQ

# > -A KUBE-SEP-R5LRHDMUTGTM635J -p tcp -m comment --comment "default/webpod" -m tcp -j DNAT --to-destination 10.244.2.4:80

# 98a106

# > -A KUBE-SERVICES -d 10.96.62.184/32 -p tcp -m comment --comment "default/webpod cluster IP" -m tcp --dport 80 -j KUBE-SVC-CNZCPOCNCNOROALA

# 103a112,114

# > -A KUBE-SVC-CNZCPOCNCNOROALA ! -s 10.244.0.0/16 -d 10.96.62.184/32 -p tcp -m comment --comment "default/webpod cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

# > -A KUBE-SVC-CNZCPOCNCNOROALA -m comment --comment "default/webpod -> 10.244.1.2:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-PQBQBGZJJ5FKN3TB

# > -A KUBE-SVC-CNZCPOCNCNOROALA -m comment --comment "default/webpod -> 10.244.2.4:80" -j KUBE-SEP-R5LRHDMUTGTM635J

# ...

# k8s-w1, k8s-w2 정보 확인

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i ip -c link ; echo; done

# => >> node : k8s-w1 <<

# ...

# 5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

# link/ether 56:8b:b8:09:e1:a0 brd ff:ff:ff:ff:ff:ff

# 6: veth52205e86@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether ba:b1:d5:a8:5e:c6 brd ff:ff:ff:ff:ff:ff link-netns cni-42b4483c-e253-de82-a5c3-2cbf657cc6ed

#

# >> node : k8s-w2 <<

# ...

# 5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

# link/ether b6:aa:16:04:b0:58 brd ff:ff:ff:ff:ff:ff

# 6: veth605dad7b@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether 36:5a:70:ff:de:e9 brd ff:ff:ff:ff:ff:ff link-netns cni-e020c420-373a-900d-bf44-34fbe4622f7e

# 7: veth002efe84@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether be:86:35:e2:1a:5d brd ff:ff:ff:ff:ff:ff link-netns cni-af271963-86ee-26b6-35b9-39173672cd1a

# 8: veth1dce6530@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether fa:d9:af:04:69:e2 brd ff:ff:ff:ff:ff:ff link-netns cni-ca1eedc6-43ff-e346-318d-ba345e0ba532

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i ip -c route ; echo; done

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i brctl show ; echo; done

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i sudo iptables -t nat -S ; echo; done

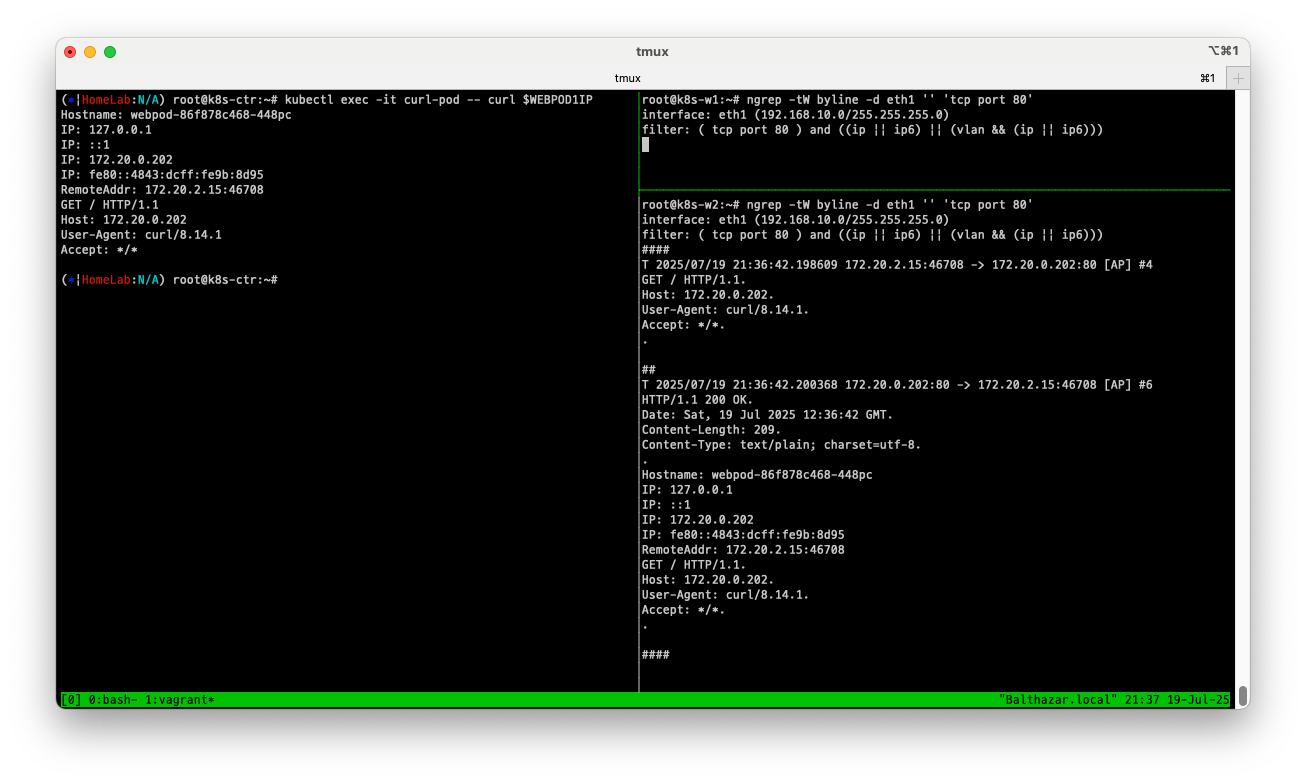

- 통신 확인

#

$ kubectl get pod -l app=webpod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# webpod-697b545f57-7j5vt 1/1 Running 0 24m 10.244.1.2 k8s-w1 <none> <none>

# webpod-697b545f57-sdv4l 1/1 Running 0 24m 10.244.2.4 k8s-w2 <none> <none>

$ POD1IP=10.244.1.2

$ kubectl exec -it curl-pod -- curl $POD1IP

# => Hostname: webpod-697b545f57-7j5vt

# IP: 127.0.0.1

# IP: ::1

# IP: 10.244.1.2

# IP: fe80::dc28:1fff:fe46:abd0

# RemoteAddr: 10.244.0.2:46774

# GET / HTTP/1.1

# Host: 10.244.1.2

# User-Agent: curl/8.14.1

# Accept: */*

#

$ kubectl get svc,ep webpod

# => NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# service/webpod ClusterIP 10.96.62.184 <none> 80/TCP 25m

#

# NAME ENDPOINTS AGE

# endpoints/webpod 10.244.1.2:80,10.244.2.4:80 25m

$ kubectl exec -it curl-pod -- curl webpod

# => Hostname: webpod-697b545f57-7j5vt

# IP: 127.0.0.1

# IP: ::1

# IP: 10.244.1.2

# IP: fe80::dc28:1fff:fe46:abd0

# RemoteAddr: 10.244.0.2:55684

# GET / HTTP/1.1

# Host: webpod

# User-Agent: curl/8.14.1

# Accept: */*

$ kubectl exec -it curl-pod -- curl webpod | grep Hostname

# => Hostname: webpod-697b545f57-7j5vt

$ kubectl exec -it curl-pod -- sh -c 'while true; do curl -s webpod | grep Hostname; sleep 1; done'

# => Hostname: webpod-697b545f57-7j5vt

# Hostname: webpod-697b545f57-sdv4l

# Hostname: webpod-697b545f57-7j5vt

# ...

# Service 동작 처리에 iptables 규칙 활용 확인 >> Service 가 100개 , 1000개 , 10000개 증가 되면???

$ kubectl get svc webpod -o jsonpath="{.spec.clusterIP}"

# => 10.96.62.184

$ SVCIP=$(kubectl get svc webpod -o jsonpath="{.spec.clusterIP}")

$ iptables -t nat -S | grep $SVCIP

# => -A KUBE-SERVICES -d 10.96.62.184/32 -p tcp -m comment --comment "default/webpod cluster IP" -m tcp --dport 80 -j KUBE-SVC-CNZCPOCNCNOROALA

# -A KUBE-SVC-CNZCPOCNCNOROALA ! -s 10.244.0.0/16 -d 10.96.62.184/32 -p tcp -m comment --comment "default/webpod cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i sudo iptables -t nat -S | grep $SVCIP ; echo; done

# => >> node : k8s-w1 <<

# -A KUBE-SERVICES -d 10.96.62.184/32 -p tcp -m comment --comment "default/webpod cluster IP" -m tcp --dport 80 -j KUBE-SVC-CNZCPOCNCNOROALA

# -A KUBE-SVC-CNZCPOCNCNOROALA ! -s 10.244.0.0/16 -d 10.96.62.184/32 -p tcp -m comment --comment "default/webpod cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

#

# >> node : k8s-w2 <<

# -A KUBE-SERVICES -d 10.96.62.184/32 -p tcp -m comment --comment "default/webpod cluster IP" -m tcp --dport 80 -j KUBE-SVC-CNZCPOCNCNOROALA

# -A KUBE-SVC-CNZCPOCNCNOROALA ! -s 10.244.0.0/16 -d 10.96.62.184/32 -p tcp -m comment --comment "default/webpod cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

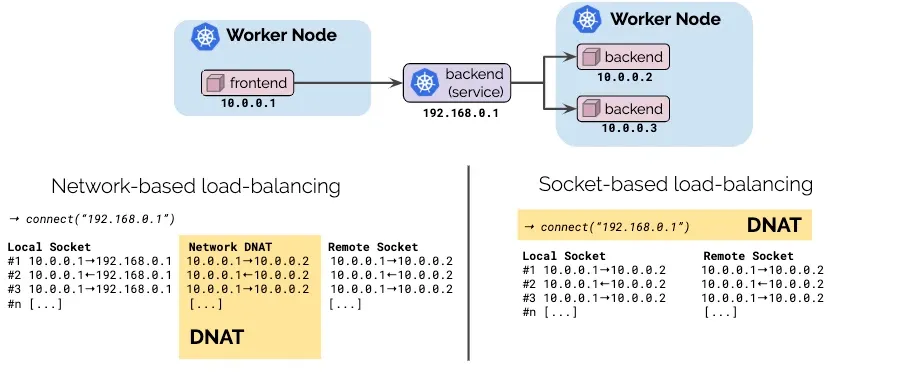

- 대규모 환경에서 iptables 단점

- kube-proxy에 의해 생성되는 iptables 규칙이 많아질수록 성능 저하가 발생할 수 있습니다.

- 특히, 많은 수의 서비스가 있는 경우 iptables 규칙이 급격히 증가하여 성능에 영향을 미칠 수 있습니다.

- 테스트 클러스터에서 3800개 노드의 19000개 파드를 배포한 결과, iptables 규칙이 24,000개 이상 생성되었습니다.

- 이로 인한 성능 저하는 다음과 같습니다.

- 통신 연결시 1.2ms의 지연이 발생했습니다.

- 클러스터의 iptables 규칙 갱신이 5분 이상 소요되었습니다.

- 53%의 CPU 오버헤드가 발생했습니다.

- 이러한 문제로 인해 iptables를 사용하지 않고 eBPF을 사용하는 cilium 과 같은 CNI 플러그인이 대안으로 인기를 얻고 있습니다.

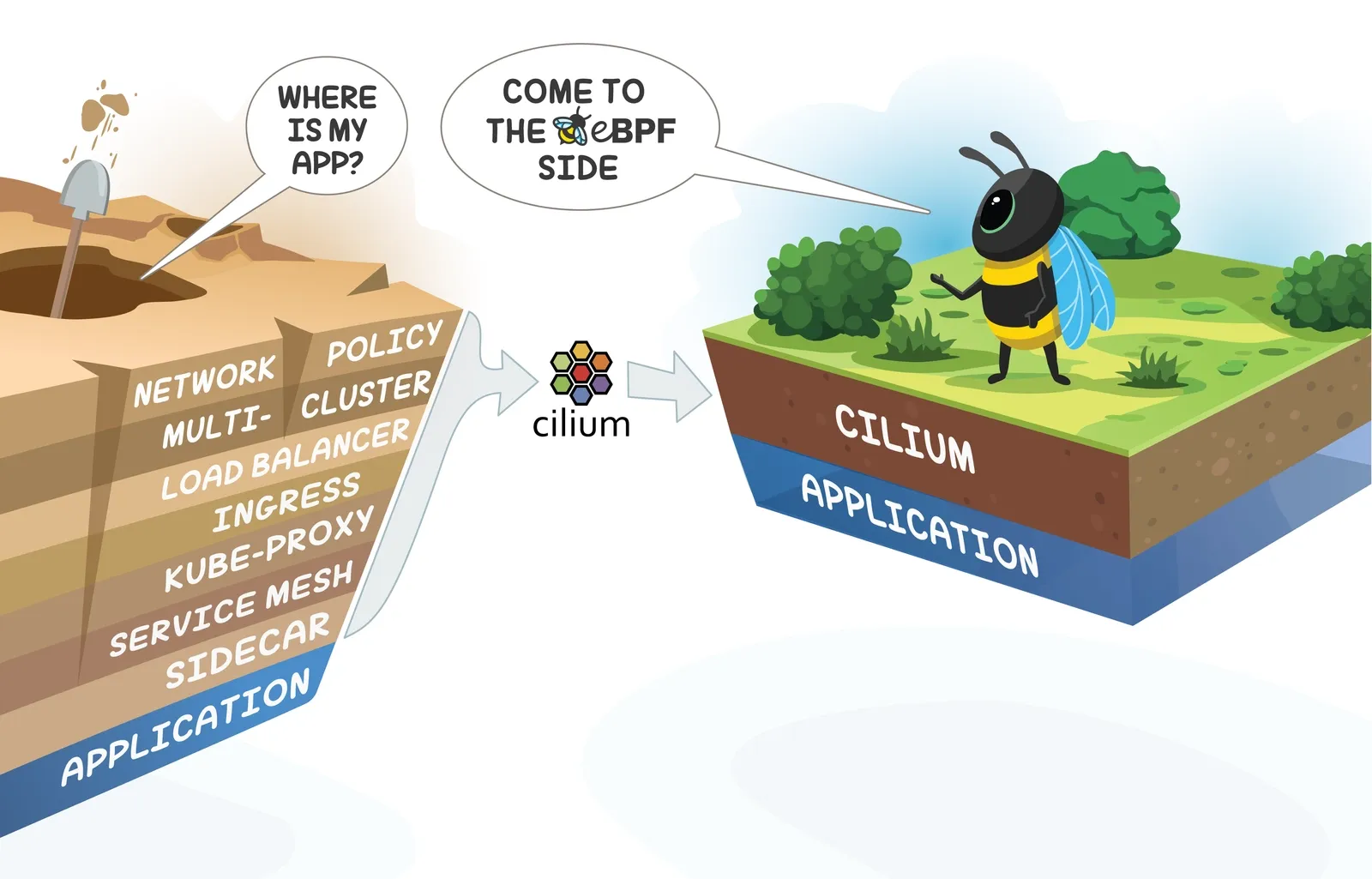

Cilium CNI

Cilium CNI 소개

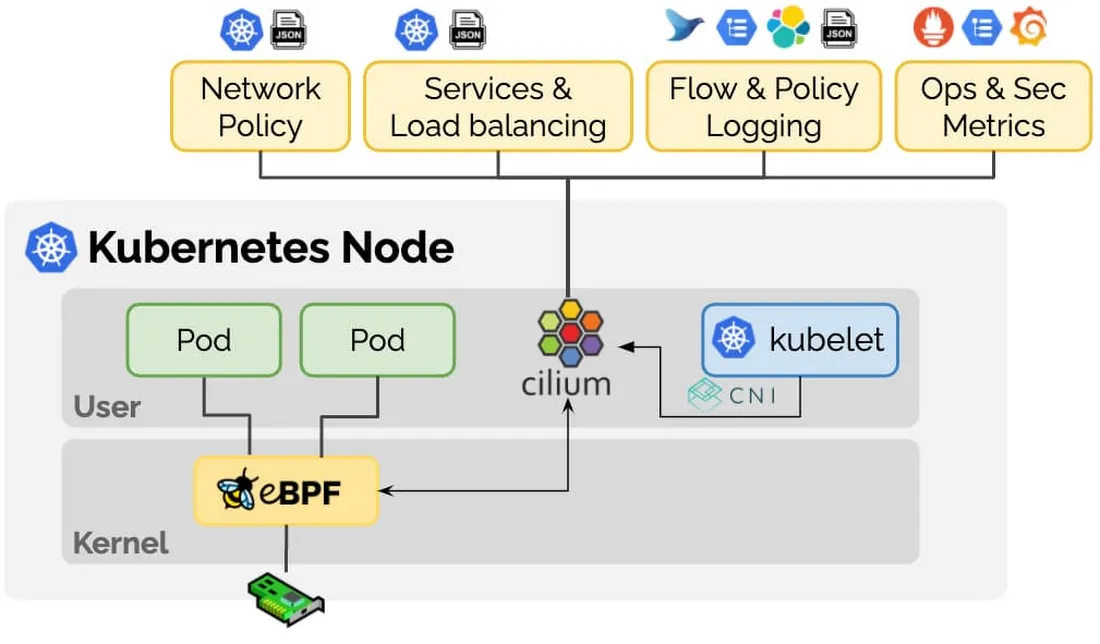

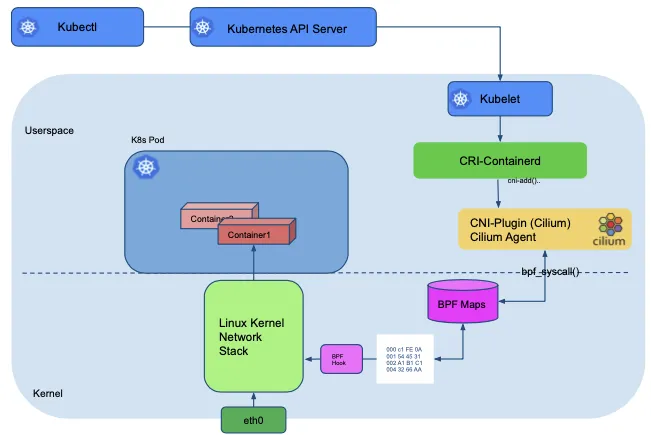

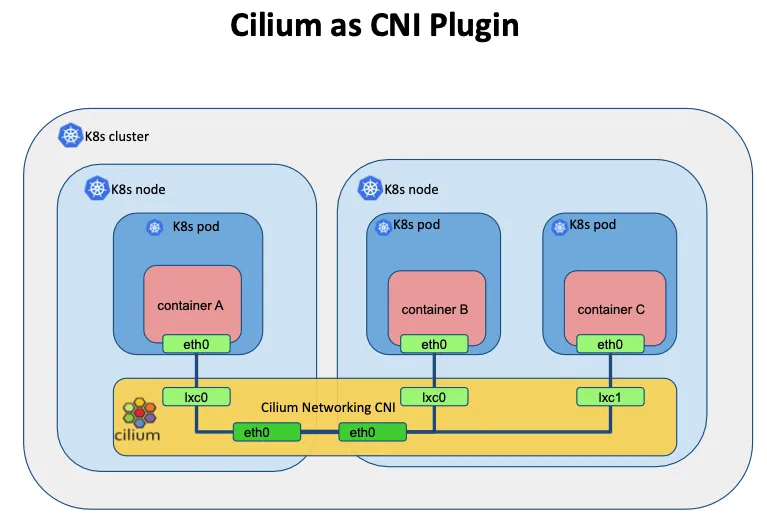

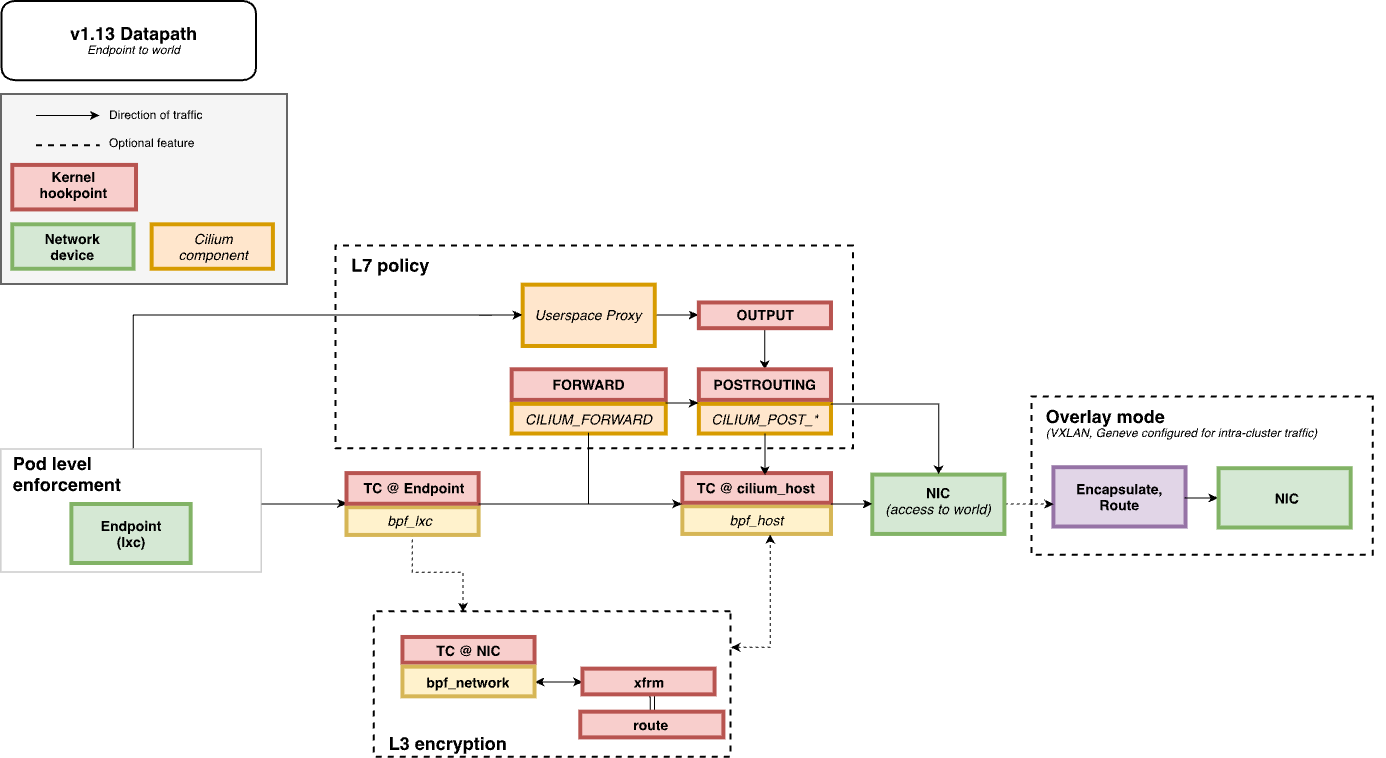

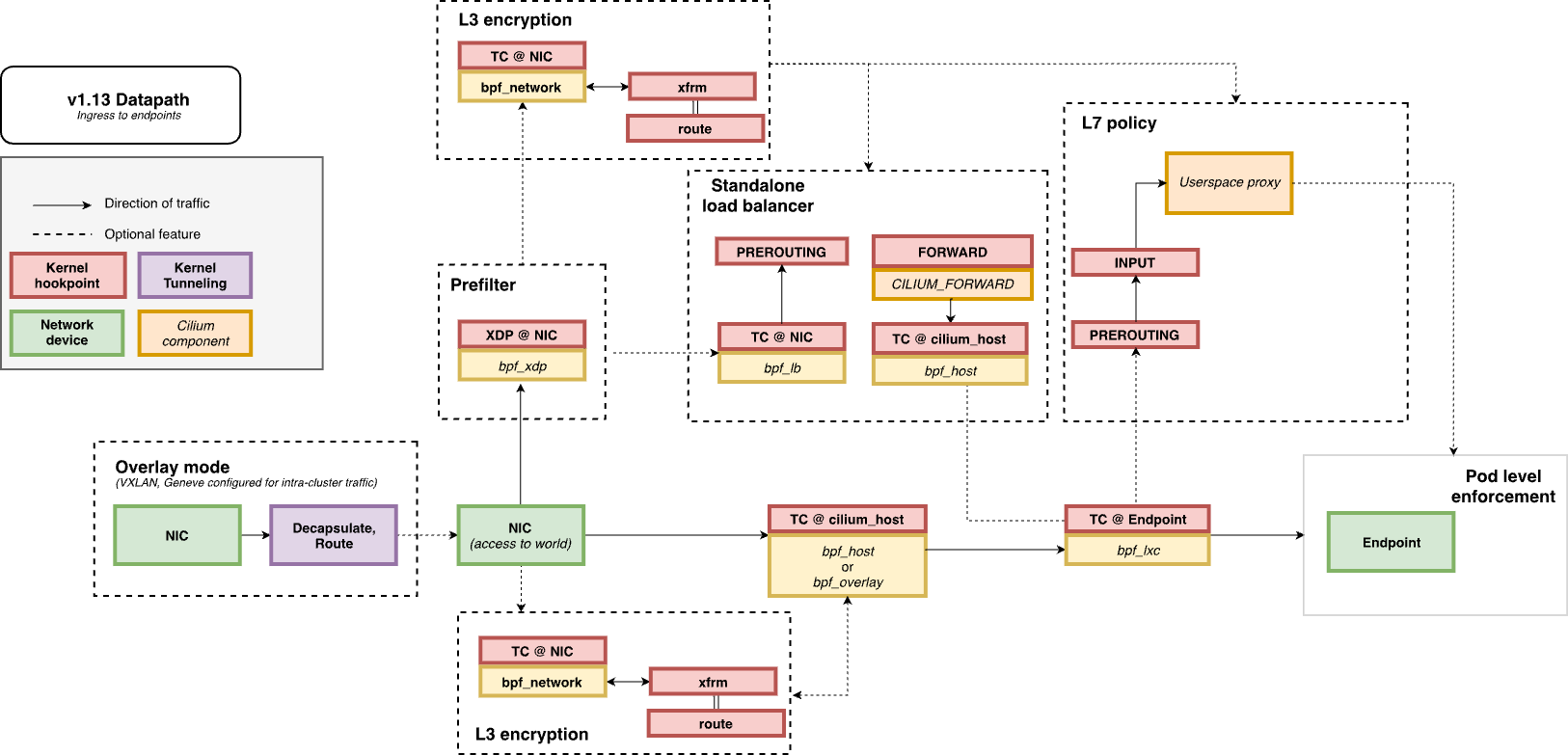

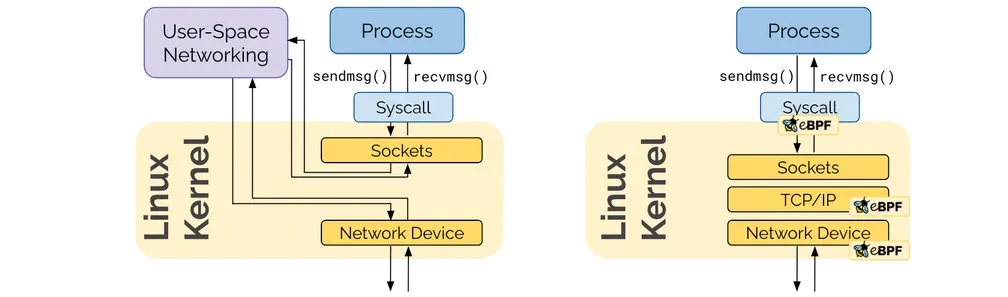

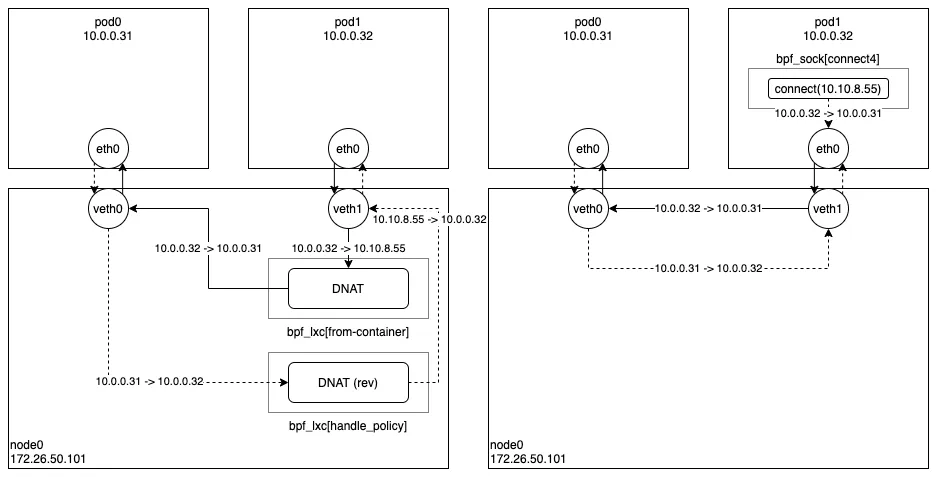

- Cilium은 기존의 복잡한 네트워크 스택을 eBPF를 통해 간소화하고, 빠르게 처리할 수 있도록 하는 CNI 플러그인입니다.

- iptables 기반의 kube-proxy를 대체하여, 앞서 살펴본 기존의 Iptables 기반의 CNI 플러그인 들의 단점을 대부분 해결할 수 있습니다.

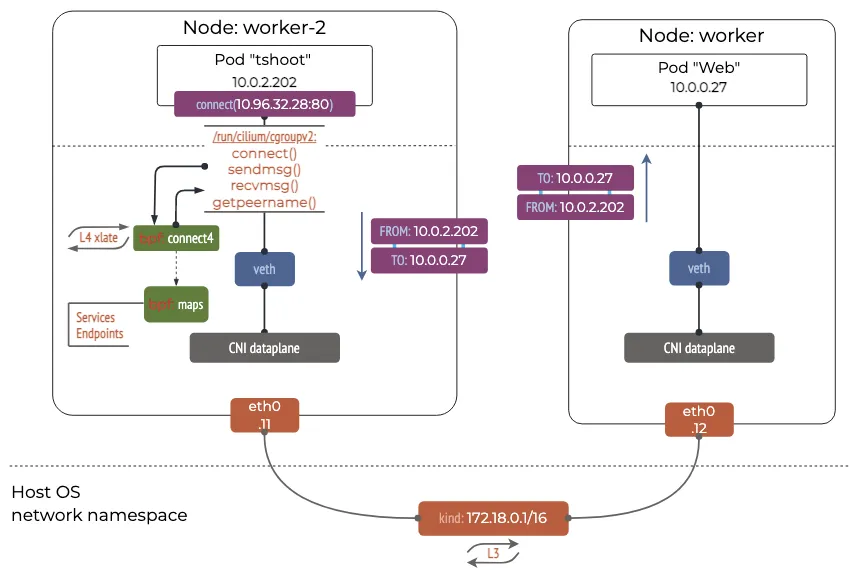

https://isovalent.com/blog/post/migrating-from-metallb-to-cilium/

https://isovalent.com/blog/post/migrating-from-metallb-to-cilium/

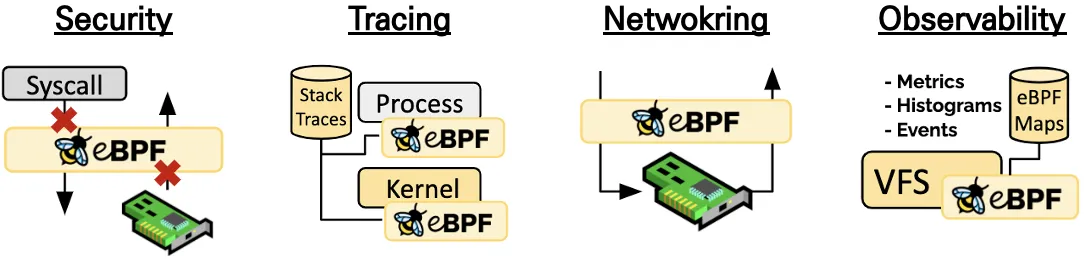

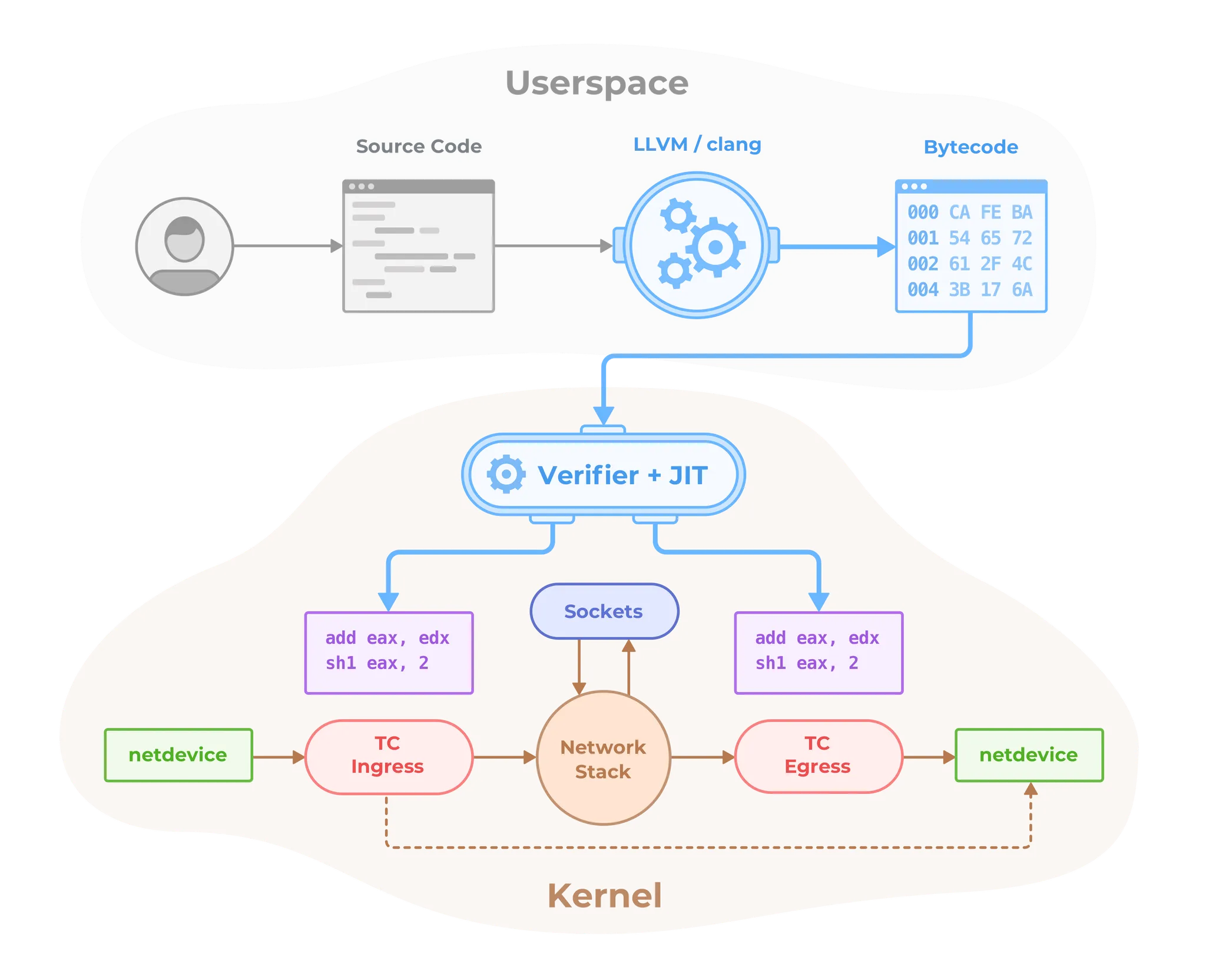

- Cilium eBPF는 추가적인 코드 수정이나 설정 변경없이, 리눅스 커널에서 동작하는 Bytecode를 자유롭게 프로그래밍하여 커널에 로딩시켜 동작이 가능합니다. 링크

- 또한 eBPF는 모든 패킷을 가로채기 위해서 수신 NIC의 ingress TC(Traffic Control) hooks를 사용할 수 있습니다.

NIC의 TC Hooks에 eBPF 프로그램이 attach 된 예

NIC의 TC Hooks에 eBPF 프로그램이 attach 된 예

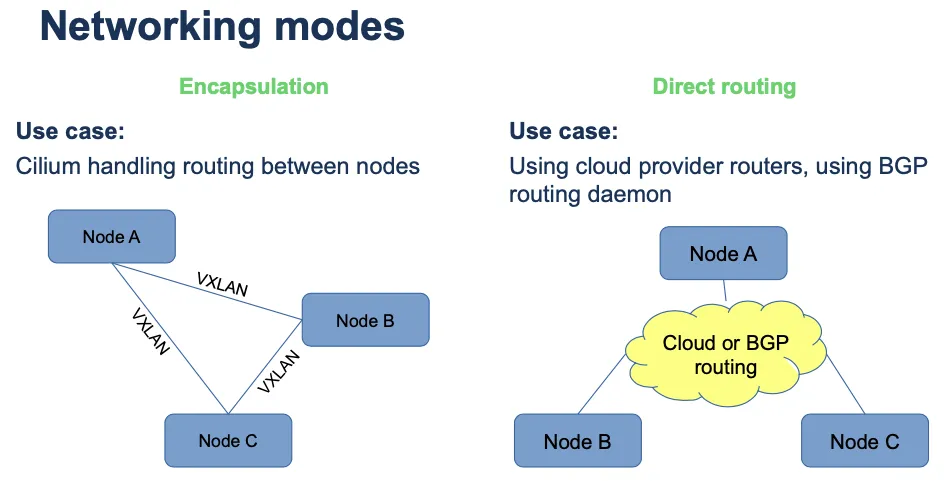

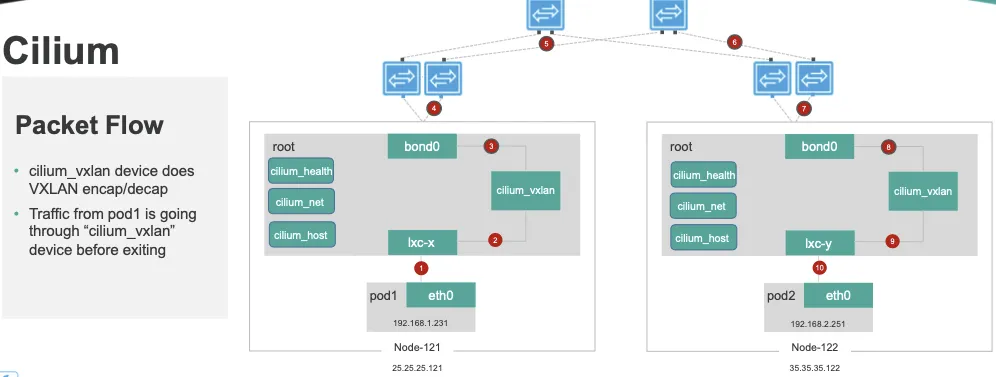

- Cilium은 터널모드(VXLAN, GENEVE), 네이티브 라우팅 모드의 2가지 네트워크 모드를 제공합니다. Docs

- 터널모드 : Cilium이 VXLAN(UDP 8472), GENEVE(UDP 6081) 인터페이스를 만들어서 이들을 통해 트래픽을 전달합니다. Encapsulation 모드라고도 합니다.

-

네이티브 라우팅 모드 : Cilium가 패킷 전달을 위해 구성을 변경하지 않고, 외부에서 제공되는 패킷 전달 방법(클라우드 또는 BGP 라우팅등)을 사용합니다. Direct Routing 모드라고도 합니다.

- 2021년 10월 Cilium은 CNCF에 채택되었습니다. 링크

- Googke GKE dataplane과 AWS EKS Anywhere에서 기본 CNI로 Cilium을 사용하고 있습니다. 링크

- Cilium은 Kube-Proxy를 100% 대체 가능합니다.

- 구성요소 - 링크

Cilium 아키텍쳐 - 출처

Cilium 아키텍쳐 - 출처

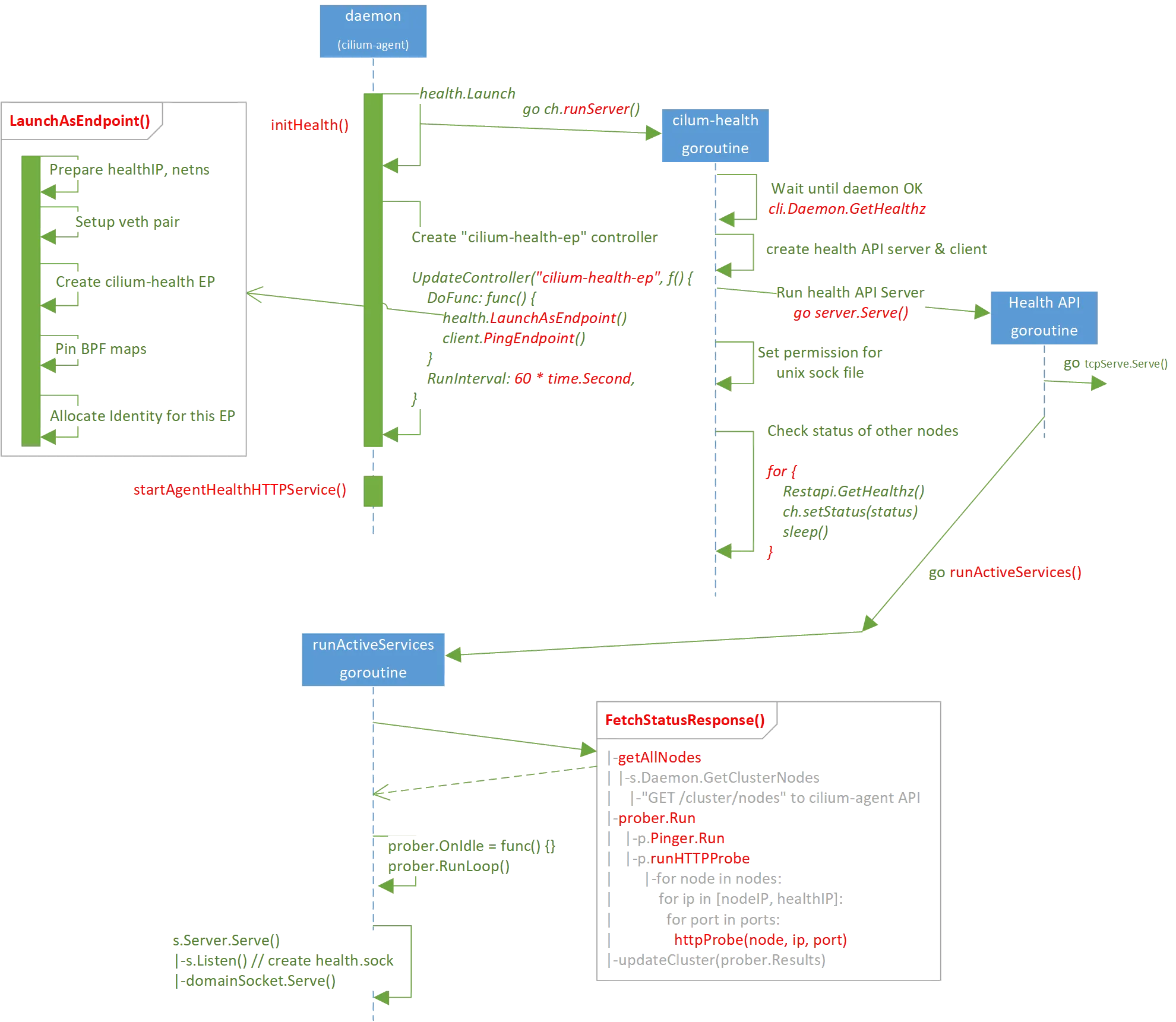

- Cilium Operator : K8S 클러스터에 대한 한 번씩 처리해야 하는 작업을 관리합니다.

- Cilium Agent : 데몬셋으로 실행, K8S API 설정으로 부터 ‘네트워크 설정, 네트워크 정책, 서비스 부하분산, 모니터링’ 등을 수행하며, eBPF 프로그램을 관리합니다.

- Cilium Client (CLI) : Cilium 커멘드툴로 eBPF maps 에 직접 접속하여 상태를 확인할 수 있습니다.

- Hubble : 네트워크와 보안 모니터링 플랫폼 역할을 하여, Server, Relay, Client, Graphical UI 로 구성되어 있습니다.

-

Data Store : Cilium Agent 간의 상태를 저장하고 전파하는 데이터 저장소, 2가지 종류 중 선택(K8S CRDs, Key-Value Store)할 수 있습니다.

Cilium CNI 설치

Cilium 시스템 요구 사항 확인 - 공식 문서

- AMD64 또는 AArch64 CPU 아키텍처를 사용하는 호스트

-

Linux 커널 5.4 이상 또는 동등 버전(예: RHEL 8.6의 경우 4.18)

$ arch # => aarch64 $ uname -r # => 6.8.0-53-generic - 커널 구성 옵션 활성화

# [커널 구성 옵션] 기본 요구 사항 $ grep -E 'CONFIG_BPF|CONFIG_BPF_SYSCALL|CONFIG_NET_CLS_BPF|CONFIG_BPF_JIT|CONFIG_NET_CLS_ACT|CONFIG_NET_SCH_INGRESS|CONFIG_CRYPTO_SHA1|CONFIG_CRYPTO_USER_API_HASH|CONFIG_CGROUPS|CONFIG_CGROUP_BPF|CONFIG_PERF_EVENTS|CONFIG_SCHEDSTATS' /boot/config-$(uname -r) # => CONFIG_BPF=y # CONFIG_BPF_SYSCALL=y # CONFIG_BPF_JIT=y # CONFIG_BPF_JIT_ALWAYS_ON=y # CONFIG_BPF_JIT_DEFAULT_ON=y # CONFIG_BPF_UNPRIV_DEFAULT_OFF=y # # CONFIG_BPF_PRELOAD is not set # CONFIG_BPF_LSM=y # CONFIG_CGROUPS=y # CONFIG_CGROUP_BPF=y # CONFIG_PERF_EVENTS=y # CONFIG_NET_SCH_INGRESS=m # CONFIG_NET_CLS_BPF=m # CONFIG_NET_CLS_ACT=y # CONFIG_BPF_STREAM_PARSER=y # CONFIG_CRYPTO_SHA1=y # CONFIG_CRYPTO_USER_API_HASH=m # CONFIG_CRYPTO_SHA1_ARM64_CE=m # CONFIG_SCHEDSTATS=y # CONFIG_BPF_EVENTS=y # CONFIG_BPF_KPROBE_OVERRIDE=y # [커널 구성 옵션] Requirements for Tunneling and Routing $ grep -E 'CONFIG_VXLAN=y|CONFIG_VXLAN=m|CONFIG_GENEVE=y|CONFIG_GENEVE=m|CONFIG_FIB_RULES=y' /boot/config-$(uname -r) $ CONFIG_FIB_RULES=y # 커널에 내장됨 $ CONFIG_VXLAN=m # 모듈로 컴파일됨 → 커널에 로드해서 사용 $ CONFIG_GENEVE=m # 모듈로 컴파일됨 → 커널에 로드해서 사용 ## (참고) 커널 로드 $ lsmod | grep -E 'vxlan|geneve' # => vxlan 147456 0 # ip6_udp_tunnel 16384 1 vxlan # udp_tunnel 36864 1 vxlan $ modprobe geneve $ lsmod | grep -E 'vxlan|geneve' # => geneve 45056 0 # vxlan 147456 0 # ip6_udp_tunnel 16384 2 geneve,vxlan # udp_tunnel 36864 2 geneve,vxlan # [커널 구성 옵션] Requirements for L7 and FQDN Policies $ grep -E 'CONFIG_NETFILTER_XT_TARGET_TPROXY|CONFIG_NETFILTER_XT_TARGET_MARK|CONFIG_NETFILTER_XT_TARGET_CT|CONFIG_NETFILTER_XT_MATCH_MARK|CONFIG_NETFILTER_XT_MATCH_SOCKET' /boot/config-$(uname -r) # => CONFIG_NETFILTER_XT_TARGET_CT=m # CONFIG_NETFILTER_XT_TARGET_MARK=m # CONFIG_NETFILTER_XT_TARGET_TPROXY=m # CONFIG_NETFILTER_XT_MATCH_MARK=m # CONFIG_NETFILTER_XT_MATCH_SOCKET=m # [커널 구성 옵션] Requirements for Netkit Device Mode $ grep -E 'CONFIG_NETKIT=y|CONFIG_NETKIT=m' /boot/config-$(uname -r) # => CONFIG_NETKIT=y -

고급 기능 동작을 위한 최소 커널 버전 - Docs

Cilium Feature Minimum Kernel Version WireGuard Transparent Encryption >= 5.6 Full support for Session Affinity >= 5.7 BPF-based proxy redirection >= 5.7 Socket-level LB bypass in pod netns >= 5.7 L3 devices >= 5.8 BPF-based host routing >= 5.10 Multicast Support in Cilium (Beta) (AMD64) >= 5.10 IPv6 BIG TCP support >= 5.19 Multicast Support in Cilium (Beta) (AArch64) >= 6.0 IPv4 BIG TCP support >= 6.3 - Cilium 동작(Node 간)을 위한 방화벽 규칙 : 해당 포트 인/아웃 허용 필요 - Docs

-

Mounted eBPF filesystem : 일부 배포판 마운트되어 있음, 혹은 Cilium 설치 시 마운트 시도 - Docs

# eBPF 파일 시스템 마운트 확인 $ mount | grep /sys/fs/bpf # => bpf on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700) -

Privileges : Cilium 동작을 위해서 관리자 수준 권한 필요 - Docs

- cilium은 네트워킹 작업과 보안 정책을 구현하는 eBPF 프로그램 설치를 위해 리눅스 커널과 상호작용합니다.

이 작업은 관리자 권한이 필요하며, cilium은 이를 위해

CAP_SYS_ADMIN권한을 사용합니다. 또한 해당 권한은 cilium-agent 컨테이너에 부여되어야 합니다. - 가장 편리한 방법은 cilium-agent를

root사용자나 privileged 모드로 실행하는 것입니다. - cilium은 또한 호스트 네트워킹 네임스페이스에 대한 접근을 필요로 합니다. 따라서 cilium 파드는 호스트 네트워킹 네임스페이스에 직접 사용할 수 있도록 설정되어야 합니다.

- cilium은 네트워킹 작업과 보안 정책을 구현하는 eBPF 프로그램 설치를 위해 리눅스 커널과 상호작용합니다.

이 작업은 관리자 권한이 필요하며, cilium은 이를 위해

kube-proxy 제거

- 기존 Flannel CNI를 제거합니다.

$ helm uninstall -n kube-flannel flannel # => release "flannel" uninstalled $ helm list -A # => NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION # $ kubectl get all -n kube-flannel $ => No resources found in kube-flannel namespace. $ kubectl delete ns kube-flannel # => namespace "kube-flannel" deleted $ kubectl get pod -A -owide - k8s-ctr, k8s-w1, k8s-w2 모든 노드에서 아래 실행하여 flannel 관련된 인터페이스를 제거합니다.

# 제거 전 확인

$ ip -c link

# => ...

# 4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default

# link/ether aa:3f:e5:cd:ae:92 brd ff:ff:ff:ff:ff:ff

# 5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

# link/ether 1e:a9:44:a0:00:e1 brd ff:ff:ff:ff:ff:ff

# 6: veth322e34b5@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether 8e:ea:40:c5:46:a7 brd ff:ff:ff:ff:ff:ff link-netns cni-62beabc5-97a9-e6cf-7f8f-bd4de413d33c

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i ip -c link ; echo; done

# => >> node : k8s-w1 <<

# ...

# 4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default

# link/ether c2:b2:62:af:c2:93 brd ff:ff:ff:ff:ff:ff

# 5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

# link/ether 06:8a:4c:62:12:de brd ff:ff:ff:ff:ff:ff

# 6: vethd8fb7cb1@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether e2:3f:03:c3:be:a2 brd ff:ff:ff:ff:ff:ff link-netns cni-81f15ae4-4a35-bce7-f755-657f3b8e39ea

#

# >> node : k8s-w2 <<

# ...

# 4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default

# link/ether 02:dd:56:d3:f6:3f brd ff:ff:ff:ff:ff:ff

# 5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

# link/ether 06:57:05:39:42:57 brd ff:ff:ff:ff:ff:ff

# 6: veth390f8e9e@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether 5a:23:ff:ba:28:90 brd ff:ff:ff:ff:ff:ff link-netns cni-a27cec88-43c0-acf5-0bc5-f64e945bded3

# 7: veth357a49b9@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether b6:6c:69:43:29:a6 brd ff:ff:ff:ff:ff:ff link-netns cni-36af9b39-bcb8-ad52-beb5-4b67475b404f

# 8: vethf9bb5584@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default qlen 1000

# link/ether 4a:7c:ab:42:e7:ca brd ff:ff:ff:ff:ff:ff link-netns cni-29e132ee-4860-b74c-c4f5-d5d27b341b83

$ brctl show

# => bridge name bridge id STP enabled interfaces

# cni0 8000.1ea944a000e1 no veth322e34b5

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i brctl show ; echo; done

# => >> node : k8s-w1 <<

# bridge name bridge id STP enabled interfaces

# cni0 8000.068a4c6212de no vethd8fb7cb1

#

# >> node : k8s-w2 <<

# bridge name bridge id STP enabled interfaces

# cni0 8000.065705394257 no veth357a49b9

# veth390f8e9e

# vethf9bb5584

$ ip -c route

# => default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.244.0.0/24 dev cni0 proto kernel scope link src 10.244.0.1

# 10.244.1.0/24 via 10.244.1.0 dev flannel.1 onlink

# 10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i ip -c route ; echo; done

# => >> node : k8s-w1 <<

# default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.244.0.0/24 via 10.244.0.0 dev flannel.1 onlink

# 10.244.1.0/24 dev cni0 proto kernel scope link src 10.244.1.1

# 10.244.2.0/24 via 10.244.2.0 dev flannel.1 onlink

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

#

# >> node : k8s-w2 <<

# default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.244.0.0/24 via 10.244.0.0 dev flannel.1 onlink

# 10.244.1.0/24 via 10.244.1.0 dev flannel.1 onlink

# 10.244.2.0/24 dev cni0 proto kernel scope link src 10.244.2.1

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.102

# vnic 제거

$ ip link del flannel.1

$ ip link del cni0

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i sudo ip link del flannel.1 ; echo; done

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i sudo ip link del cni0 ; echo; done

# 제거 확인

$ ip -c link

# => ...

# 6: veth322e34b5@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

# link/ether 8e:ea:40:c5:46:a7 brd ff:ff:ff:ff:ff:ff link-netns cni-62beabc5-97a9-e6cf-7f8f-bd4de413d33c

# <span style="color: green;">👉 flannel.1과 cni0가 삭제되었습니다.</span>

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i ip -c link ; echo; done

$ brctl show

# => <span style="color: green;">없음</span>

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i brctl show ; echo; done

# => >> node : k8s-w1 <<

# >> node : k8s-w2 <<

$ ip -c route

# => default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i ip -c route ; echo; done

# => >> node : k8s-w1 <<

# default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

#

# >> node : k8s-w2 <<

# default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.102

# <span style="color: green;">👉 flannel.1과 cni0가 삭제되어, 관련 라우팅 정보가 삭제되었습니다.</span>

- 기존 kube-proxy를 제거합니다.

#

$ kubectl -n kube-system delete ds kube-proxy

# => daemonset.apps "kube-proxy" deleted

$ kubectl -n kube-system delete cm kube-proxy

# => configmap "kube-proxy" deleted

# 배포된 파드의 IP는 남겨져 있습니다.

$ kubectl get pod -A -owide

# => NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# default curl-pod 1/1 Running 1 (143m ago) 3h1m 10.244.0.3 k8s-ctr <none> <none>

# default webpod-697b545f57-7j5vt 1/1 Running 1 (142m ago) 3h2m 10.244.1.3 k8s-w1 <none> <none>

# default webpod-697b545f57-sdv4l 1/1 Running 1 (142m ago) 3h2m 10.244.2.7 k8s-w2 <none> <none>

# kube-system coredns-674b8bbfcf-79mbb 0/1 CrashLoopBackOff 27 (4m ago) 2d17h 10.244.2.5 k8s-w2 <none> <none>

# kube-system coredns-674b8bbfcf-rtx95 0/1 CrashLoopBackOff 27 (4m26s ago) 2d17h 10.244.2.6 k8s-w2 <none> <none>

# kube-system etcd-k8s-ctr 1/1 Running 4 (143m ago) 2d17h 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-apiserver-k8s-ctr 1/1 Running 4 (143m ago) 2d17h 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-controller-manager-k8s-ctr 1/1 Running 4 (143m ago) 2d17h 192.168.10.100 k8s-ctr <none> <none>

# kube-system kube-scheduler-k8s-ctr 1/1 Running 4 (143m ago) 2d17h 192.168.10.100 k8s-ctr <none> <none>

#

$ kubectl exec -it curl-pod -- curl webpod

# => curl: (6) Could not resolve host: webpod

# command terminated with exit code 6

# <span style="color: green;">👉 kube-proxy와 CNI의 삭제로 coredns가 동작하지 않아서 webpod 서비스에 접근할 수 없습니다.</span>

#

$ iptables-save

# Run on each node with root permissions:

$ iptables-save | grep -v KUBE | grep -v FLANNEL | iptables-restore

$ iptables-save

$ sshpass -p 'vagrant' ssh vagrant@k8s-w1 "sudo iptables-save | grep -v KUBE | grep -v FLANNEL | sudo iptables-restore"

$ sshpass -p 'vagrant' ssh vagrant@k8s-w1 sudo iptables-save

$ sshpass -p 'vagrant' ssh vagrant@k8s-w2 "sudo iptables-save | grep -v KUBE | grep -v FLANNEL | sudo iptables-restore"

$ sshpass -p 'vagrant' ssh vagrant@k8s-w2 sudo iptables-save

#

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 1 (155m ago) 3h14m 10.244.0.3 k8s-ctr <none> <none>

# webpod-697b545f57-7j5vt 1/1 Running 1 (155m ago) 3h14m 10.244.1.3 k8s-w1 <none> <none>

# webpod-697b545f57-sdv4l 1/1 Running 1 (155m ago) 3h14m 10.244.2.7 k8s-w2 <none> <none>

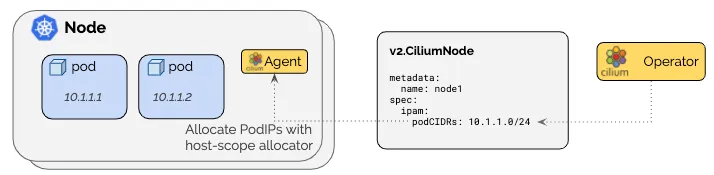

- 노드별 파드에 할당되는 IPAM(PodCIDR) 정보를 확인해보겠습니다.

#--allocate-node-cidrs=true 로 설정된 kube-controller-manager에서 CIDR을 자동 할당함

$ kubectl get nodes -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.podCIDR}{"\n"}{end}'

# => k8s-ctr 10.244.0.0/24

# k8s-w1 10.244.1.0/24

# k8s-w2 10.244.2.0/24

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 1 (157m ago) 3h15m 10.244.0.3 k8s-ctr <none> <none>

# webpod-697b545f57-7j5vt 1/1 Running 1 (156m ago) 3h16m 10.244.1.3 k8s-w1 <none> <none>

# webpod-697b545f57-sdv4l 1/1 Running 1 (156m ago) 3h16m 10.244.2.7 k8s-w2 <none> <none>

#

$ kc describe pod -n kube-system kube-controller-manager-k8s-ctr

# => ...

# Command:

# kube-controller-manager

# --allocate-node-cidrs=true

# --cluster-cidr=10.244.0.0/16

# --service-cluster-ip-range=10.96.0.0/16

# ...

Cilium CNI 설치 with Helm

- 관련 문서 : Helm, Masquering, ClusterScope, Routing

- Cilium 1.17.5 Helm Chart - ArtifactHub를 사용하여 설치합니다.

# Cilium 설치 with Helm

$ helm repo add cilium https://helm.cilium.io/

# => "cilium" has been added to your repositories

# 모든 NIC 지정 + bpf.masq=true + NoIptablesRules

$ helm install cilium cilium/cilium --version 1.17.5 --namespace kube-system \

--set k8sServiceHost=192.168.10.100 --set k8sServicePort=6443 \

--set kubeProxyReplacement=true \

--set routingMode=native \

--set autoDirectNodeRoutes=true \

--set ipam.mode="cluster-pool" \

--set ipam.operator.clusterPoolIPv4PodCIDRList={"172.20.0.0/16"} \

--set ipv4NativeRoutingCIDR=172.20.0.0/16 \

--set endpointRoutes.enabled=true \

--set installNoConntrackIptablesRules=true \

--set bpf.masquerade=true \

--set ipv6.enabled=false

# => NAME: cilium

# LAST DEPLOYED: Sat Jul 19 17:34:05 2025

# NAMESPACE: kube-system

# STATUS: deployed

# REVISION: 1

# TEST SUITE: None

# NOTES:

# You have successfully installed Cilium with Hubble.

#

# Your release version is 1.17.5.

# 확인

$ helm get values cilium -n kube-system

# => USER-SUPPLIED VALUES:

# autoDirectNodeRoutes: true

# bpf:

# masquerade: true

# endpointRoutes:

# enabled: true

# installNoConntrackIptablesRules: true

# ipam:

# mode: cluster-pool

# operator:

# clusterPoolIPv4PodCIDRList:

# - 172.20.0.0/16

# ipv4NativeRoutingCIDR: 172.20.0.0/16

# ipv6:

# enabled: false

# k8sServiceHost: 192.168.10.100

# k8sServicePort: 6443

# kubeProxyReplacement: true

# routingMode: native

$ helm list -A

# => NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# cilium kube-system 1 2025-07-19 17:34:05.700270399 +0900 KST deployed cilium-1.17.5 1.17.5

$ kubectl get crd

# => No resources found

$ watch -d kubectl get pod -A

# => Every 2.0s: kubectl get pod -A k8s-ctr: Sat Jul 19 17:36:42 2025

#

# NAMESPACE NAME READY STATUS RESTARTS AGE

# default curl-pod 1/1 Running 1 (166m ago) 3h24m

# default webpod-697b545f57-7j5vt 1/1 Running 1 (165m ago) 3h25m

# default webpod-697b545f57-sdv4l 1/1 Running 1 (165m ago) 3h25m

# kube-system <span style="color: green;">cilium-envoy-b2mn9</span> 1/1 Running 0 2m36s

# kube-system <span style="color: green;">cilium-envoy-dgdmn</span> 1/1 Running 0 2m36s

# kube-system <span style="color: green;">cilium-envoy-pjn95</span> 1/1 Running 0 2m36s

# kube-system <span style="color: green;">cilium-fl689</span> 1/1 Running 0 2m36s

# kube-system <span style="color: green;">cilium-mqnkn</span> 1/1 Running 0 2m36s

# kube-system <span style="color: green;">cilium-operator-865bc7f457-hpwvh</span> 1/1 Running 0 2m36s

# kube-system <span style="color: green;">cilium-operator-865bc7f457-v5k84</span> 1/1 Running 0 2m36s

# kube-system <span style="color: green;">cilium-zz9k4</span> 1/1 Running 0 2m36s

# kube-system coredns-674b8bbfcf-7q52c 1/1 <span style="color: green;">Running</span> 0 52s

# kube-system coredns-674b8bbfcf-bvsfb 1/1 <span style="color: green;">Running</span> 0 66s

# kube-system etcd-k8s-ctr 1/1 Running 4 (166m ago) 2d18h

# kube-system kube-apiserver-k8s-ctr 1/1 Running 4 (166m ago) 2d18h

# kube-system kube-controller-manager-k8s-ctr 1/1 Running 4 (166m ago) 2d18h

# kube-system kube-scheduler-k8s-ctr 1/1 Running 4 (166m ago) 2d18h

# <span style="color: green;">👉 cilium 관련된 파드가 배포되었고 coredns 파드도 정상적으로 동작합니다.</span>

$ kubectl exec -it -n kube-system ds/cilium -c cilium-agent -- cilium-dbg status --verbose

# => KubeProxyReplacement: True [eth0 10.0.2.15 fd17:625c:f037:2:a00:27ff:fe71:19d8 fe80::a00:27ff:fe71:19d8, eth1 192.168.10.102 fe80::a00:27ff:fe79:58ac (Direct Routing)]

# ...

# Routing: Network: Native Host: BPF

# ...

# Masquerading: BPF [eth0, eth1] 172.20.0.0/16 [IPv4: Enabled, IPv6: Disabled]

# ...

# 노드에 iptables 확인

$ iptables -t nat -S

# => -P PREROUTING ACCEPT

# -P INPUT ACCEPT

# -P OUTPUT ACCEPT

# -P POSTROUTING ACCEPT

# -N CILIUM_OUTPUT_nat

# -N CILIUM_POST_nat

# -N CILIUM_PRE_nat

# -N KUBE-KUBELET-CANARY

# -A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_nat" -j CILIUM_PRE_nat

# -A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_nat" -j CILIUM_OUTPUT_nat

# -A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_nat" -j CILIUM_POST_nat

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i sudo iptables -t nat -S ; echo; done

$ iptables-save

$ for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i sudo iptables-save ; echo; done

- PodCIDR IPAM 확인해보겠습니다. - ClusterScope

#

$ kubectl get nodes -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.podCIDR}{"\n"}{end}'

# => k8s-ctr 10.244.0.0/24

# k8s-w1 10.244.1.0/24

# k8s-w2 10.244.2.0/24

# 파드 IP 확인

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 1 (172m ago) 3h30m 10.244.0.3 k8s-ctr <none> <none>

# webpod-697b545f57-7j5vt 1/1 Running 1 (171m ago) 3h30m 10.244.1.3 k8s-w1 <none> <none>

# webpod-697b545f57-sdv4l 1/1 Running 1 (171m ago) 3h30m 10.244.2.7 k8s-w2 <none> <none>

#

$ kubectl get ciliumnodes

# => NAME CILIUMINTERNALIP INTERNALIP AGE

# k8s-ctr 172.20.2.68 192.168.10.100 6m11s

# k8s-w1 172.20.1.88 192.168.10.101 6m50s

# k8s-w2 172.20.0.235 192.168.10.102 7m8s

$ kubectl get ciliumnodes -o json | grep podCIDRs -A2

# => "podCIDRs": [

# "172.20.2.0/24"

# ],

# --

# "podCIDRs": [

# "172.20.1.0/24"

# ],

# --

# "podCIDRs": [

# "172.20.0.0/24"

# ],

#

$ kubectl rollout restart deployment webpod

# => deployment.apps/webpod restarted

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 1 (172m ago) 3h31m 10.244.0.3 k8s-ctr <none> <none>

# webpod-86f878c468-448pc 1/1 Running 0 11s 172.20.0.202 k8s-w2 <none> <none>

# webpod-86f878c468-ttbs2 1/1 Running 0 15s 172.20.1.123 k8s-w1 <none> <none>

# k8s-ctr 노드에 curl-pod 파드 배포

$ kubectl delete pod curl-pod --grace-period=0

# => pod "curl-pod" deleted

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# => pod/curl-pod created

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 0 33s 172.20.2.15 k8s-ctr <none> <none>

# webpod-86f878c468-448pc 1/1 Running 0 66s 172.20.0.202 k8s-w2 <none> <none>

# webpod-86f878c468-ttbs2 1/1 Running 0 70s 172.20.1.123 k8s-w1 <none> <none>

$ kubectl get ciliumendpoints

# => NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

# curl-pod 5180 ready 172.20.2.15

# webpod-86f878c468-448pc 34270 ready 172.20.0.202

# webpod-86f878c468-ttbs2 34270 ready 172.20.1.123

$ kubectl exec -it -n kube-system ds/cilium -c cilium-agent -- cilium-dbg endpoint list

# => ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

# ENFORCEMENT ENFORCEMENT

# 60 Disabled Disabled 20407 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 172.20.0.167 ready

# k8s:io.cilium.k8s.policy.cluster=default

# k8s:io.cilium.k8s.policy.serviceaccount=coredns

# k8s:io.kubernetes.pod.namespace=kube-system

# k8s:k8s-app=kube-dns

# 71 Disabled Disabled 4 reserved:health 172.20.0.114 ready

# 1368 Disabled Disabled 20407 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system 172.20.0.92 ready

# k8s:io.cilium.k8s.policy.cluster=default

# k8s:io.cilium.k8s.policy.serviceaccount=coredns

# k8s:io.kubernetes.pod.namespace=kube-system

# k8s:k8s-app=kube-dns

# 2533 Disabled Disabled 1 reserved:host ready

# 2605 Disabled Disabled 34270 k8s:app=webpod 172.20.0.202 ready

# k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

# k8s:io.cilium.k8s.policy.cluster=default

# k8s:io.cilium.k8s.policy.serviceaccount=default

# k8s:io.kubernetes.pod.namespace=default

# 통신 확인

$ kubectl exec -it curl-pod -- curl webpod | grep Hostname

# => Hostname: webpod-86f878c468-448pc

Cilium 설치 확인

- cilium cli를 설치하여 Cilium 상태를 확인해 보겠습니다.

# cilium cli 설치

$ CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

$ CLI_ARCH=amd64

$ if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

$ curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz >/dev/null 2>&1

$ tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

# => cilium

$ rm cilium-linux-${CLI_ARCH}.tar.gz

# cilium 상태 확인

$ which cilium

# => /usr/local/bin/cilium

$ cilium status

# => /¯¯\

# /¯¯\__/¯¯\ Cilium: OK

# \__/¯¯\__/ Operator: OK

# /¯¯\__/¯¯\ Envoy DaemonSet: OK

# \__/¯¯\__/ Hubble Relay: disabled

# \__/ ClusterMesh: disabled

#

# DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

# DaemonSet cilium-envoy Desired: 3, Ready: 3/3, Available: 3/3

# Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

# Containers: cilium Running: 3

# cilium-envoy Running: 3

# cilium-operator Running: 2

# clustermesh-apiserver

# hubble-relay

# Cluster Pods: 5/5 managed by Cilium

# Helm chart version: 1.17.5

# Image versions cilium quay.io/cilium/cilium:v1.17.5@sha256:baf8541723ee0b72d6c489c741c81a6fdc5228940d66cb76ef5ea2ce3c639ea6: 3

# cilium-envoy quay.io/cilium/cilium-envoy:v1.32.6-1749271279-0864395884b263913eac200ee2048fd985f8e626@sha256:9f69e290a7ea3d4edf9192acd81694089af048ae0d8a67fb63bd62dc1d72203e: 3

# cilium-operator quay.io/cilium/operator-generic:v1.17.5@sha256:f954c97eeb1b47ed67d08cc8fb4108fb829f869373cbb3e698a7f8ef1085b09e: 2

$ cilium config view

# => ...

# cluster-pool-ipv4-cidr 172.20.0.0/16

# default-lb-service-ipam lbipam

# ipam cluster-pool

# ipam-cilium-node-update-rate 15s

# iptables-random-fully false

# ipv4-native-routing-cidr 172.20.0.0/16

# kube-proxy-replacement true

# ...

$ kubectl get cm -n kube-system cilium-config -o json | jq

#

$ cilium config set debug true && watch kubectl get pod -A

# => ✨ Patching ConfigMap cilium-config with debug=true...

# ♻️ Restarted Cilium pods

$ cilium config view | grep -i debug

# => debug true

# debug-verbose

# cilium daemon = cilium-dbg

$ kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg config

# => ##### Read-write configurations #####

# ConntrackAccounting : Disabled

# ConntrackLocal : Disabled

# Debug : Disabled

# DebugLB : Disabled

# DebugPolicy : Enabled

# DropNotification : Enabled

# MonitorAggregationLevel : Medium

# PolicyAccounting : Enabled

# PolicyAuditMode : Disabled

# PolicyTracing : Disabled

# PolicyVerdictNotification : Enabled

# SourceIPVerification : Enabled

# TraceNotification : Enabled

# MonitorNumPages : 64

# PolicyEnforcement : default

$ kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg status --verbose

# => ...

# KubeProxyReplacement: True [eth0 10.0.2.15 fd17:625c:f037:2:a00:27ff:fe71:19d8 fe80::a00:27ff:fe71:19d8, eth1 192.168.10.102 fe80::a00:27ff:fe79:58ac (Direct Routing)]

# Routing: Network: Native Host: BPF

# Attach Mode: TCX

# Device Mode: veth

# ...

# KubeProxyReplacement Details:

# Status: True

# Socket LB: Enabled

# Socket LB Tracing: Enabled

# Socket LB Coverage: Full

# Devices: eth0 10.0.2.15 fd17:625c:f037:2:a00:27ff:fe71:19d8 fe80::a00:27ff:fe71:19d8, eth1 192.168.10.102 fe80::a00:27ff:fe79:58ac (Direct Routing)

# Mode: SNAT

# Backend Selection: Random

# Session Affinity: Enabled

# Graceful Termination: Enabled

# NAT46/64 Support: Disabled

# XDP Acceleration: Disabled

# Services:

# - ClusterIP: Enabled

# - NodePort: Enabled (Range: 30000-32767)

# - LoadBalancer: Enabled

# - externalIPs: Enabled

# - HostPort: Enabled

# ...

- cilium_host, cilium_net, cilium_health 등의 네트워크 기본 정보를 확인해보겠습니다.

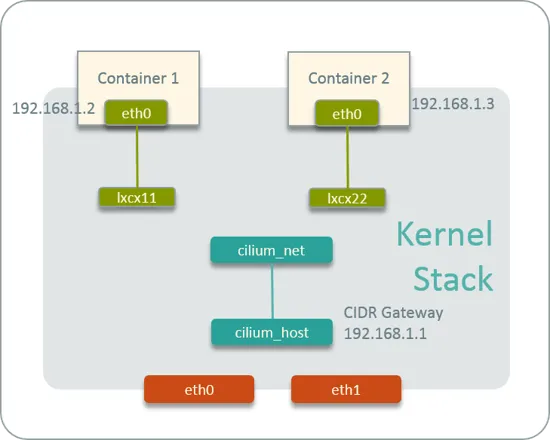

출처 : https://arthurchiao.art/blog/ctrip-network-arch-evolution/

출처 : https://arthurchiao.art/blog/ctrip-network-arch-evolution/

#

$ ip -c addr

# => ...

# 7: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

# link/ether 4e:28:ea:3e:83:e0 brd ff:ff:ff:ff:ff:ff

# inet6 fe80::4c28:eaff:fe3e:83e0/64 scope link

# valid_lft forever preferred_lft forever

# 8: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

# link/ether 22:ad:62:34:21:8e brd ff:ff:ff:ff:ff:ff

# inet 172.20.2.68/32 scope global cilium_host

# valid_lft forever preferred_lft forever

# inet6 fe80::20ad:62ff:fe34:218e/64 scope link

# valid_lft forever preferred_lft forever

# 12: lxcc4a3ffff7931@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

# link/ether 76:62:d3:8d:58:1f brd ff:ff:ff:ff:ff:ff link-netns cni-ca74ac02-08e1-9092-74ad-f60026576c19

# inet6 fe80::7462:d3ff:fe8d:581f/64 scope link

# valid_lft forever preferred_lft forever

# 14: lxc_health@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

# link/ether ca:67:c2:a6:88:89 brd ff:ff:ff:ff:ff:ff link-netnsid 2

# inet6 fe80::c867:c2ff:fea6:8889/64 scope link

# valid_lft forever preferred_lft forever