[Cilium] BGP Control Plane & ClusterMesh

들어가며

이번 포스트에서는 BGP를 통한 라우팅과 kind, cluster-mesh를 통한 멀티 클러스터 환경에서의 Cilium 동작을 살펴보겠습니다.

실습 환경 구성

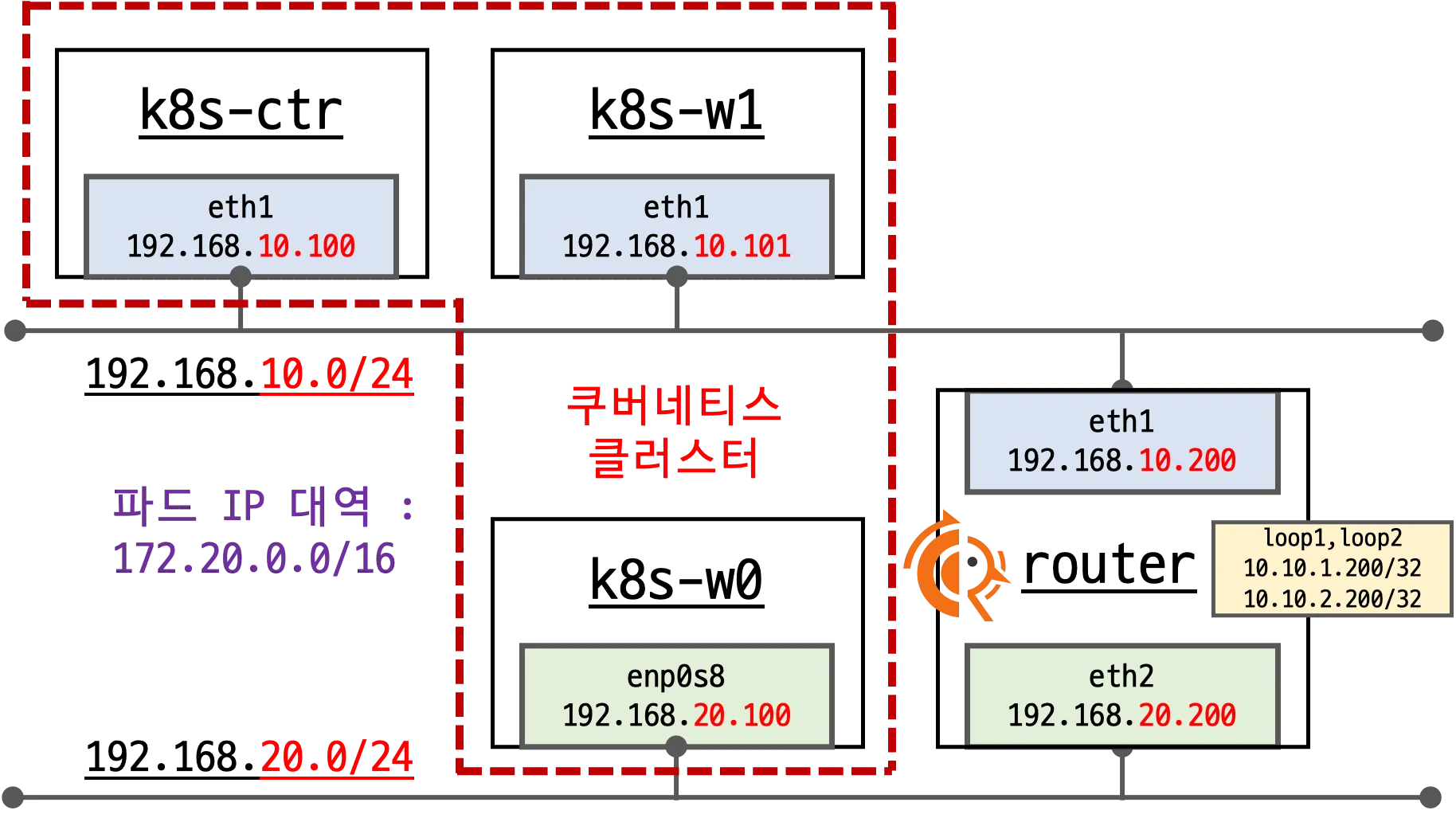

- 이번 실습에서는 지난 실습과 마찬가지로 k8s-w0를 별도의 네트워크에 배치하고 router를 통해 k8s-w0와 k8s-ctr/w1 노드간 통신을 확인합니다. 하지만 이번 실습에서는 frr을 설치하여 BGP 라우팅을 통해 통신을 확인해보겠습니다.

- 기본 배포 가상 머신 : k8s-ctr, k8s-w1, k8s-w0, router (frr 라우팅)

- router : router : 192.168.10.0/24 ↔ 192.168.20.0/24 대역 라우팅 역할, k8s 에 join 되지 않은 서버이며, BGP 동작을 위해 frr 툴이 설치되어있습니다.

- k8s-w0 : k8s-ctr/w1 노드와 다른 네트워크 대역에 배치됩니다.

- 실습 동작에 필요한 static routing이 설저된 상태로 배포 됩니다.

실습환경 배포 파일

-

Vagrantfile : 가상머신 정의, 부팅 시 초기 프로비저닝 설정을 포함하는 Vagrantfile입니다.

# Variables K8SV = '1.33.2-1.1' # Kubernetes Version : apt list -a kubelet , ex) 1.32.5-1.1 CONTAINERDV = '1.7.27-1' # Containerd Version : apt list -a containerd.io , ex) 1.6.33-1 CILIUMV = '1.18.0' # Cilium CNI Version : https://github.com/cilium/cilium/tags N = 1 # max number of worker nodes # Base Image https://portal.cloud.hashicorp.com/vagrant/discover/bento/ubuntu-24.04 BOX_IMAGE = "bento/ubuntu-24.04" BOX_VERSION = "202508.03.0" Vagrant.configure("2") do |config| #-ControlPlane Node config.vm.define "k8s-ctr" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "virtualbox" do |vb| vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"] vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"] vb.name = "k8s-ctr" vb.cpus = 2 vb.memory = 2560 vb.linked_clone = true end subconfig.vm.host_name = "k8s-ctr" subconfig.vm.network "private_network", ip: "192.168.10.100" subconfig.vm.network "forwarded_port", guest: 22, host: 60000, auto_correct: true, id: "ssh" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/init_cfg.sh", args: [ K8SV, CONTAINERDV ] subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/k8s-ctr.sh", args: [ N, CILIUMV, K8SV ] subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/route-add1.sh" end #-Worker Nodes Subnet1 (1..N).each do |i| config.vm.define "k8s-w#{i}" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "virtualbox" do |vb| vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"] vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"] vb.name = "k8s-w#{i}" vb.cpus = 2 vb.memory = 1536 vb.linked_clone = true end subconfig.vm.host_name = "k8s-w#{i}" subconfig.vm.network "private_network", ip: "192.168.10.10#{i}" subconfig.vm.network "forwarded_port", guest: 22, host: "6000#{i}", auto_correct: true, id: "ssh" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/init_cfg.sh", args: [ K8SV, CONTAINERDV] subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/k8s-w.sh" subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/route-add1.sh" end end #-Router Node config.vm.define "router" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "virtualbox" do |vb| vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"] vb.name = "router" vb.cpus = 1 vb.memory = 768 vb.linked_clone = true end subconfig.vm.host_name = "router" subconfig.vm.network "private_network", ip: "192.168.10.200" subconfig.vm.network "forwarded_port", guest: 22, host: 60009, auto_correct: true, id: "ssh" subconfig.vm.network "private_network", ip: "192.168.20.200", auto_config: false subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/router.sh" end #-Worker Nodes Subnet2 config.vm.define "k8s-w0" do |subconfig| subconfig.vm.box = BOX_IMAGE subconfig.vm.box_version = BOX_VERSION subconfig.vm.provider "virtualbox" do |vb| vb.customize ["modifyvm", :id, "--groups", "/Cilium-Lab"] vb.customize ["modifyvm", :id, "--nicpromisc2", "allow-all"] vb.name = "k8s-w0" vb.cpus = 2 vb.memory = 1536 vb.linked_clone = true end subconfig.vm.host_name = "k8s-w0" subconfig.vm.network "private_network", ip: "192.168.20.100" subconfig.vm.network "forwarded_port", guest: 22, host: 60010, auto_correct: true, id: "ssh" subconfig.vm.synced_folder "./", "/vagrant", disabled: true subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/init_cfg.sh", args: [ K8SV, CONTAINERDV] subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/k8s-w.sh" subconfig.vm.provision "shell", path: "https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/5w/route-add2.sh" end end -

init_cfg.sh : args 참고하여 초기 설정을 수행하는 스크립트입니다.

#!/usr/bin/env bash echo ">>>> Initial Config Start <<<<" echo "[TASK 1] Setting Profile & Bashrc" echo 'alias vi=vim' >> /etc/profile echo "sudo su -" >> /home/vagrant/.bashrc ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime # Change Timezone echo "[TASK 2] Disable AppArmor" systemctl stop ufw && systemctl disable ufw >/dev/null 2>&1 systemctl stop apparmor && systemctl disable apparmor >/dev/null 2>&1 echo "[TASK 3] Disable and turn off SWAP" swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab echo "[TASK 4] Install Packages" apt update -qq >/dev/null 2>&1 apt-get install apt-transport-https ca-certificates curl gpg -y -qq >/dev/null 2>&1 # Download the public signing key for the Kubernetes package repositories. mkdir -p -m 755 /etc/apt/keyrings K8SMMV=$(echo $1 | sed -En 's/^([0-9]+\.[0-9]+)\..*/\1/p') curl -fsSL https://pkgs.k8s.io/core:/stable:/v$K8SMMV/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v$K8SMMV/deb/ /" >> /etc/apt/sources.list.d/kubernetes.list curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null # packets traversing the bridge are processed by iptables for filtering echo 1 > /proc/sys/net/ipv4/ip_forward echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.d/k8s.conf # enable br_netfilter for iptables modprobe br_netfilter modprobe overlay echo "br_netfilter" >> /etc/modules-load.d/k8s.conf echo "overlay" >> /etc/modules-load.d/k8s.conf echo "[TASK 5] Install Kubernetes components (kubeadm, kubelet and kubectl)" # Update the apt package index, install kubelet, kubeadm and kubectl, and pin their version apt update >/dev/null 2>&1 # apt list -a kubelet ; apt list -a containerd.io apt-get install -y kubelet=$1 kubectl=$1 kubeadm=$1 containerd.io=$2 >/dev/null 2>&1 apt-mark hold kubelet kubeadm kubectl >/dev/null 2>&1 # containerd configure to default and cgroup managed by systemd containerd config default > /etc/containerd/config.toml sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml # avoid WARN&ERRO(default endpoints) when crictl run cat <<EOF > /etc/crictl.yaml runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock EOF # ready to install for k8s systemctl restart containerd && systemctl enable containerd systemctl enable --now kubelet echo "[TASK 6] Install Packages & Helm" export DEBIAN_FRONTEND=noninteractive apt-get install -y bridge-utils sshpass net-tools conntrack ngrep tcpdump ipset arping wireguard jq yq tree bash-completion unzip kubecolor termshark >/dev/null 2>&1 curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash >/dev/null 2>&1 echo ">>>> Initial Config End <<<<" -

k8s-ctr.sh : kubeadm init를 통하여 kubernetes controlplane 노드를 설정하고 Cilium CNI 설치, 편리성 설정(k, kc)하는 스크립트입니다. local-path-storageclass와 metrics-server도 설치합니다.

#!/usr/bin/env bash echo ">>>> K8S Controlplane config Start <<<<" echo "[TASK 1] Initial Kubernetes" curl --silent -o /root/kubeadm-init-ctr-config.yaml https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/kubeadm-init-ctr-config.yaml K8SMMV=$(echo $3 | sed -En 's/^([0-9]+\.[0-9]+\.[0-9]+).*/\1/p') sed -i "s/K8S_VERSION_PLACEHOLDER/v${K8SMMV}/g" /root/kubeadm-init-ctr-config.yaml kubeadm init --config="/root/kubeadm-init-ctr-config.yaml" >/dev/null 2>&1 echo "[TASK 2] Setting kube config file" mkdir -p /root/.kube cp -i /etc/kubernetes/admin.conf /root/.kube/config chown $(id -u):$(id -g) /root/.kube/config echo "[TASK 3] Source the completion" echo 'source <(kubectl completion bash)' >> /etc/profile echo 'source <(kubeadm completion bash)' >> /etc/profile echo "[TASK 4] Alias kubectl to k" echo 'alias k=kubectl' >> /etc/profile echo 'alias kc=kubecolor' >> /etc/profile echo 'complete -F __start_kubectl k' >> /etc/profile echo "[TASK 5] Install Kubectx & Kubens" git clone https://github.com/ahmetb/kubectx /opt/kubectx >/dev/null 2>&1 ln -s /opt/kubectx/kubens /usr/local/bin/kubens ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx echo "[TASK 6] Install Kubeps & Setting PS1" git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1 >/dev/null 2>&1 cat <<"EOT" >> /root/.bash_profile source /root/kube-ps1/kube-ps1.sh KUBE_PS1_SYMBOL_ENABLE=true function get_cluster_short() { echo "$1" | cut -d . -f1 } KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short KUBE_PS1_SUFFIX=') ' PS1='$(kube_ps1)'$PS1 EOT kubectl config rename-context "kubernetes-admin@kubernetes" "HomeLab" >/dev/null 2>&1 echo "[TASK 7] Install Cilium CNI" NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}') helm repo add cilium https://helm.cilium.io/ >/dev/null 2>&1 helm repo update >/dev/null 2>&1 helm install cilium cilium/cilium --version $2 --namespace kube-system \ --set k8sServiceHost=192.168.10.100 --set k8sServicePort=6443 \ --set ipam.mode="cluster-pool" --set ipam.operator.clusterPoolIPv4PodCIDRList={"172.20.0.0/16"} --set ipv4NativeRoutingCIDR=172.20.0.0/16 \ --set routingMode=native --set autoDirectNodeRoutes=false --set bgpControlPlane.enabled=true \ --set kubeProxyReplacement=true --set bpf.masquerade=true --set installNoConntrackIptablesRules=true \ --set endpointHealthChecking.enabled=false --set healthChecking=false \ --set hubble.enabled=true --set hubble.relay.enabled=true --set hubble.ui.enabled=true \ --set hubble.ui.service.type=NodePort --set hubble.ui.service.nodePort=30003 \ --set prometheus.enabled=true --set operator.prometheus.enabled=true --set hubble.metrics.enableOpenMetrics=true \ --set hubble.metrics.enabled="{dns,drop,tcp,flow,port-distribution,icmp,httpV2:exemplars=true;labelsContext=source_ip\,source_namespace\,source_workload\,destination_ip\,destination_namespace\,destination_workload\,traffic_direction}" \ --set operator.replicas=1 --set debug.enabled=true >/dev/null 2>&1 echo "[TASK 8] Install Cilium / Hubble CLI" CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt) CLI_ARCH=amd64 if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz >/dev/null 2>&1 tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin rm cilium-linux-${CLI_ARCH}.tar.gz HUBBLE_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/hubble/master/stable.txt) HUBBLE_ARCH=amd64 if [ "$(uname -m)" = "aarch64" ]; then HUBBLE_ARCH=arm64; fi curl -L --fail --remote-name-all https://github.com/cilium/hubble/releases/download/$HUBBLE_VERSION/hubble-linux-${HUBBLE_ARCH}.tar.gz >/dev/null 2>&1 tar xzvfC hubble-linux-${HUBBLE_ARCH}.tar.gz /usr/local/bin rm hubble-linux-${HUBBLE_ARCH}.tar.gz echo "[TASK 9] Remove node taint" kubectl taint nodes k8s-ctr node-role.kubernetes.io/control-plane- echo "[TASK 10] local DNS with hosts file" echo "192.168.10.100 k8s-ctr" >> /etc/hosts echo "192.168.10.200 router" >> /etc/hosts echo "192.168.20.100 k8s-w0" >> /etc/hosts for (( i=1; i<=$1; i++ )); do echo "192.168.10.10$i k8s-w$i" >> /etc/hosts; done echo "[TASK 11] Dynamically provisioning persistent local storage with Kubernetes" kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/v0.0.31/deploy/local-path-storage.yaml >/dev/null 2>&1 kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' >/dev/null 2>&1 # echo "[TASK 12] Install Prometheus & Grafana" # kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.18.0/examples/kubernetes/addons/prometheus/monitoring-example.yaml >/dev/null 2>&1 # kubectl patch svc -n cilium-monitoring prometheus -p '{"spec": {"type": "NodePort", "ports": [{"port": 9090, "targetPort": 9090, "nodePort": 30001}]}}' >/dev/null 2>&1 # kubectl patch svc -n cilium-monitoring grafana -p '{"spec": {"type": "NodePort", "ports": [{"port": 3000, "targetPort": 3000, "nodePort": 30002}]}}' >/dev/null 2>&1 # echo "[TASK 12] Install Prometheus Stack" # helm repo add prometheus-community https://prometheus-community.github.io/helm-charts >/dev/null 2>&1 # cat <<EOT > monitor-values.yaml # prometheus: # prometheusSpec: # scrapeInterval: "15s" # evaluationInterval: "15s" # service: # type: NodePort # nodePort: 30001 # grafana: # defaultDashboardsTimezone: Asia/Seoul # adminPassword: prom-operator # service: # type: NodePort # nodePort: 30002 # alertmanager: # enabled: false # defaultRules: # create: false # prometheus-windows-exporter: # prometheus: # monitor: # enabled: false # EOT # helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 75.15.1 \ # -f monitor-values.yaml --create-namespace --namespace monitoring >/dev/null 2>&1 echo "[TASK 13] Install Metrics-server" helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/ >/dev/null 2>&1 helm upgrade --install metrics-server metrics-server/metrics-server --set 'args[0]=--kubelet-insecure-tls' -n kube-system >/dev/null 2>&1 echo "[TASK 14] Install k9s" CLI_ARCH=amd64 if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi wget https://github.com/derailed/k9s/releases/latest/download/k9s_linux_${CLI_ARCH}.deb -O /tmp/k9s_linux_${CLI_ARCH}.deb >/dev/null 2>&1 apt install /tmp/k9s_linux_${CLI_ARCH}.deb >/dev/null 2>&1 echo ">>>> K8S Controlplane Config End <<<<"-

kubeadm-init-ctr-config.yaml

apiVersion: kubeadm.k8s.io/v1beta4 kind: InitConfiguration bootstrapTokens: - token: "123456.1234567890123456" ttl: "0s" usages: - signing - authentication localAPIEndpoint: advertiseAddress: "192.168.10.100" nodeRegistration: kubeletExtraArgs: - name: node-ip value: "192.168.10.100" criSocket: "unix:///run/containerd/containerd.sock" --- apiVersion: kubeadm.k8s.io/v1beta4 kind: ClusterConfiguration kubernetesVersion: "K8S_VERSION_PLACEHOLDER" networking: podSubnet: "10.244.0.0/16" serviceSubnet: "10.96.0.0/16"

-

-

k8s-w.sh : kubernetes worker 노드 설정, kubeadm join 등을 수행하는 스크립트입니다.

#!/usr/bin/env bash echo ">>>> K8S Node config Start <<<<" echo "[TASK 1] K8S Controlplane Join" curl --silent -o /root/kubeadm-join-worker-config.yaml https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/2w/kubeadm-join-worker-config.yaml NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}') sed -i "s/NODE_IP_PLACEHOLDER/${NODEIP}/g" /root/kubeadm-join-worker-config.yaml kubeadm join --config="/root/kubeadm-join-worker-config.yaml" > /dev/null 2>&1 echo ">>>> K8S Node config End <<<<"-

kubeadm-join-worker-config.yaml

apiVersion: kubeadm.k8s.io/v1beta4 kind: JoinConfiguration discovery: bootstrapToken: token: "123456.1234567890123456" apiServerEndpoint: "192.168.10.100:6443" unsafeSkipCAVerification: true nodeRegistration: criSocket: "unix:///run/containerd/containerd.sock" kubeletExtraArgs: - name: node-ip value: "NODE_IP_PLACEHOLDER"

-

-

route-add1.sh : k8s node 들이 내부망과 통신을 위한 route 설정 스크립트입니다.

#!/usr/bin/env bash echo ">>>> Route Add Config Start <<<<" chmod 600 /etc/netplan/01-netcfg.yaml chmod 600 /etc/netplan/50-vagrant.yaml cat <<EOT>> /etc/netplan/50-vagrant.yaml routes: - to: 192.168.20.0/24 via: 192.168.10.200 # - to: 172.20.0.0/16 # via: 192.168.10.200 EOT netplan apply echo ">>>> Route Add Config End <<<<" -

route-add2.sh : k8s node 들이 내부망과 통신을 위한 route 설정 스크립트입니다.

#!/usr/bin/env bash echo ">>>> Route Add Config Start <<<<" chmod 600 /etc/netplan/01-netcfg.yaml chmod 600 /etc/netplan/50-vagrant.yaml cat <<EOT>> /etc/netplan/50-vagrant.yaml routes: - to: 192.168.10.0/24 via: 192.168.20.200 # - to: 172.20.0.0/16 # via: 192.168.20.200 EOT netplan apply echo ">>>> Route Add Config End <<<<" -

router.sh : router(frr - BGP) 역할, 간단 웹 서버 역할

#!/usr/bin/env bash echo ">>>> Initial Config Start <<<<" echo "[TASK 0] Setting eth2" chmod 600 /etc/netplan/01-netcfg.yaml chmod 600 /etc/netplan/50-vagrant.yaml cat << EOT >> /etc/netplan/50-vagrant.yaml eth2: addresses: - 192.168.20.200/24 EOT netplan apply echo "[TASK 1] Setting Profile & Bashrc" echo 'alias vi=vim' >> /etc/profile echo "sudo su -" >> /home/vagrant/.bashrc ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime echo "[TASK 2] Disable AppArmor" systemctl stop ufw && systemctl disable ufw >/dev/null 2>&1 systemctl stop apparmor && systemctl disable apparmor >/dev/null 2>&1 echo "[TASK 3] Add Kernel setting - IP Forwarding" sed -i 's/#net.ipv4.ip_forward=1/net.ipv4.ip_forward=1/g' /etc/sysctl.conf sysctl -p >/dev/null 2>&1 echo "[TASK 4] Setting Dummy Interface" modprobe dummy ip link add loop1 type dummy ip link set loop1 up ip addr add 10.10.1.200/24 dev loop1 ip link add loop2 type dummy ip link set loop2 up ip addr add 10.10.2.200/24 dev loop2 echo "[TASK 5] Install Packages" export DEBIAN_FRONTEND=noninteractive apt update -qq >/dev/null 2>&1 apt-get install net-tools jq yq tree ngrep tcpdump arping termshark -y -qq >/dev/null 2>&1 echo "[TASK 6] Install Apache" apt install apache2 -y >/dev/null 2>&1 echo -e "<h1>Web Server : $(hostname)</h1>" > /var/www/html/index.html echo "[TASK 7] Configure FRR" apt install frr -y >/dev/null 2>&1 sed -i "s/^bgpd=no/bgpd=yes/g" /etc/frr/daemons NODEIP=$(ip -4 addr show eth1 | grep -oP '(?<=inet\s)\d+(\.\d+){3}') cat << EOF >> /etc/frr/frr.conf ! router bgp 65000 bgp router-id $NODEIP bgp graceful-restart no bgp ebgp-requires-policy bgp bestpath as-path multipath-relax maximum-paths 4 network 10.10.1.0/24 EOF systemctl daemon-reexec >/dev/null 2>&1 systemctl restart frr >/dev/null 2>&1 systemctl enable frr >/dev/null 2>&1 echo ">>>> Initial Config End <<<<"

실습환경 배포

실습환경 배포

$ vagrant up

# => k8s-w0: >>>> Initial Config End <<<<

# ==> k8s-w0: Running provisioner: shell...

# k8s-w0: Running: /var/folders/7k/qy6rsdds57z3tmyn9_7hhd8r0000gn/T/vagrant-shell20250815-16129-ssrxpm.sh

# k8s-w0: >>>> K8S Node config Start <<<<

# k8s-w0: [TASK 1] K8S Controlplane Join

# k8s-w0: >>>> K8S Node config End <<<<

# ==> k8s-w0: Running provisioner: shell...

# k8s-w0: Running: /var/folders/7k/qy6rsdds57z3tmyn9_7hhd8r0000gn/T/vagrant-shell20250815-16129-iotl22.sh

# k8s-w0: >>>> Route Add Config Start <<<<

# k8s-w0: >>>> Route Add Config End <<<<

기본정보 확인

# cilium 상태 확인 : bgp-control-plane 미리 활성화.

$ kubectl get cm -n kube-system cilium-config -o json | jq

$ cilium status

$ cilium config view | grep -i bgp

# => bgp-router-id-allocation-ip-pool

# bgp-router-id-allocation-mode default

# bgp-secrets-namespace kube-system

# enable-bgp-control-plane true

# enable-bgp-control-plane-status-report true

#

$ kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg status --verbose

$ kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg metrics list

# monitor

$ kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg monitor

$ kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg monitor -v

$ kubectl exec -n kube-system -c cilium-agent -it ds/cilium -- cilium-dbg monitor -v -v

...

네트워크 정보 확인 : autoDirectNodeRoutes=false

# router 네트워크 인터페이스 정보 확인

$ sshpass -p 'vagrant' ssh vagrant@router ip -br -c -4 addr

# k8s node 네트워크 인터페이스 정보 확인

$ ip -c -4 addr show dev eth1

# => 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# altname enp0s9

# inet <span style="color: green;">192.168.10.100/24</span> brd 192.168.10.255 scope global eth1

# valid_lft forever preferred_lft forever

$ for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c -4 addr show dev eth1; echo; done

# => 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# altname enp0s9

# inet <span style="color: green;">192.168.10.101/24</span> brd 192.168.10.255 scope global eth1

# valid_lft forever preferred_lft forever

# >> node : k8s-w0 <<

# 3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

# altname enp0s9

# inet <span style="color: green;">192.168.20.100/24</span> brd 192.168.20.255 scope global eth1

# valid_lft forever preferred_lft forever

# 라우팅 정보 확인

$ sshpass -p 'vagrant' ssh vagrant@router ip -c route

# => ...

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

# 192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

$ ip -c route | grep static

# => 192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

## 노드별 PodCIDR 라우팅이 없습니다!

$ ip -c route

# => default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 172.20.0.0/24 via 172.20.0.222 dev cilium_host proto kernel src 172.20.0.222

# 172.20.0.222 dev cilium_host proto kernel scope link

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

# 192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

$ for i in w1 w0 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c route; echo; done

# => >> node : k8s-w1 <<

# default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 172.20.1.0/24 via 172.20.1.44 dev cilium_host proto kernel src 172.20.1.44

# 172.20.1.44 dev cilium_host proto kernel scope link

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.101

# 192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

#

# >> node : k8s-w0 <<

# default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 172.20.2.0/24 via 172.20.2.246 dev cilium_host proto kernel src 172.20.2.246

# 172.20.2.246 dev cilium_host proto kernel scope link

# 192.168.10.0/24 via 192.168.20.200 dev eth1 proto static

# 192.168.20.0/24 dev eth1 proto kernel scope link src 192.168.20.100

# 통신 확인

$ ping -c 1 192.168.20.100 # k8s-w0 eth1

# => PING 192.168.20.100 (192.168.20.100) 56(84) bytes of data.

# 64 bytes from 192.168.20.100: icmp_seq=1 ttl=63 time=2.46 ms

#

# --- 192.168.20.100 ping statistics ---

# 1 packets transmitted, 1 received, 0% packet loss, time 0ms

# rtt min/avg/max/mdev = 2.461/2.461/2.461/0.000 ms

- 현재노드간 통신은 가능하지만 pod 간 통신은 불가능합니다.

-

autoDirectNodeRoutes=false을 통해 자동으로 같은 L2 네트워크에 있는 노드들간의 PodCIDR 라우팅하는 기능이 꺼져있어서 라우팅 룰이 없기때문입니다.

샘플 애플리케이션 배포 및 통신 문제 확인

- 샘플 애플리케이션 배포

# 샘플 애플리케이션 배포

$ cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: webpod

spec:

replicas: 3

selector:

matchLabels:

app: webpod

template:

metadata:

labels:

app: webpod

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- sample-app

topologyKey: "kubernetes.io/hostname"

containers:

- name: webpod

image: traefik/whoami

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: webpod

labels:

app: webpod

spec:

selector:

app: webpod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

EOF

# => deployment.apps/webpod created

# service/webpod created

# k8s-ctr 노드에 curl-pod 파드 배포

$ cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# => pod/curl-pod created

- 통신 문제 확인 : 노드 내의 파드간에만 통신이 되는 중입니다.

# 배포 확인

$ kubectl get deploy,svc,ep webpod -owide

# => NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

# deployment.apps/webpod 3/3 3 3 102s webpod traefik/whoami app=webpod

#

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

# service/webpod ClusterIP 10.96.28.4 <none> 80/TCP 102s app=webpod

#

# NAME ENDPOINTS AGE

# endpoints/webpod 172.20.0.72:80,172.20.1.7:80,172.20.2.190:80 102s

$ kubectl get endpointslices -l app=webpod

# => NAME ADDRESSTYPE PORTS ENDPOINTS AGE

# webpod-4pxps IPv4 80 172.20.0.72,172.20.1.7,172.20.2.190 2m23s

$ kubectl get ciliumendpoints # IP 확인

# => NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

# curl-pod 47416 ready 172.20.0.187

# <span style="color: green;">webpod-697b545f57-j6b7t</span> 9975 ready <span style="color: green;">172.20.0.72</span>

# webpod-697b545f57-n7fk7 9975 ready 172.20.2.190

# webpod-697b545f57-rl4d2 9975 ready 172.20.1.7

# 통신 문제 확인 : 노드 내의 파드들 끼리만 통신되는 중!

$ kubectl exec -it curl-pod -- curl -s --connect-timeout 1 webpod | grep Hostname

# => Hostname: webpod-697b545f57-j6b7t

# <span style="color: green;">👉 k8s-ctr에서 실행중이어서 같은 k8s-ctr에 있는 파드와는 통신이 됩니다.</span>

$ kubectl exec -it curl-pod -- curl -s --connect-timeout 1 webpod | grep Hostname

# <span style="color: green;">👉 다시 실행했을때는 Kubernetes Service에 의해 다른 노드의 파드로 로드밸런싱되어 통신이 실패합니다.</span>

# => command terminated with exit code 28

$ kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# => Hostname: webpod-697b545f57-j6b7t

# ---

# ---

# Hostname: webpod-697b545f57-j6b7t

# ---

# ---

# Hostname: webpod-697b545f57-j6b7t

# ...

# <span style="color: green;">👉 여러번 실행해도 k8s-ctr 노드에 있는 파드만 통신이 되는것을 확인할 수 있습니다.</span>

# cilium-dbg, map

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg ip list

# => IP IDENTITY SOURCE

# ...

# <span style="color: green;">172.20.1.7/32</span> k8s:app=webpod custom-resource

# ...

# <span style="color: green;">172.20.2.190/32</span> k8s:app=webpod custom-resource

# ...

# 192.168.10.101/32 reserved:remote-node

# 192.168.20.100/32 reserved:remote-node

# <span style="color: green;">👉 cilium도 다른 노드의 파드 IP를 알고 있지만, 라우팅이 되지 않아서 통신이 되지 않습니다.</span>

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg endpoint list

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg service list

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg bpf lb list

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg bpf nat list

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg map list | grep -v '0 0'

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_services_v2

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_backends_v3

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_reverse_nat

$ kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_ipcache_v2

Cilium BGP Control Plane

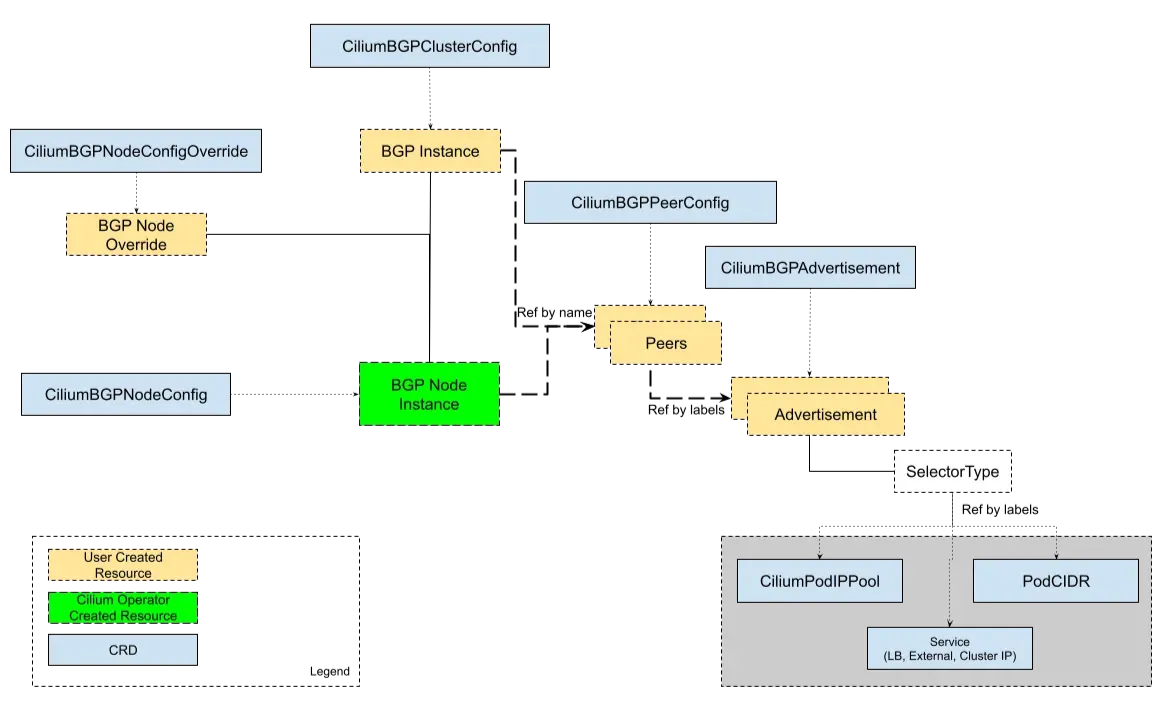

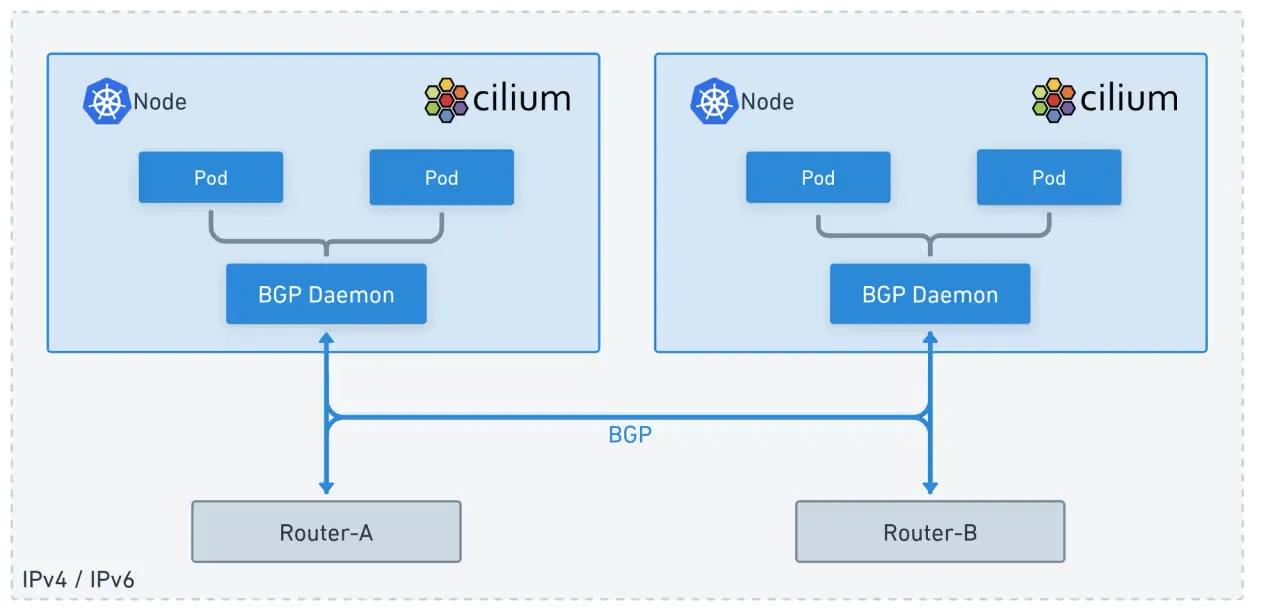

- Cilium BGP Control Plane (BGPv2) : Cilium Custom Resources 를 통해 BGP 설정이 관리 가능합니다. - Docs, Code

https://docs.cilium.io/en/stable/network/bgp-control-plane/bgp-control-plane-v2/

https://docs.cilium.io/en/stable/network/bgp-control-plane/bgp-control-plane-v2/

-

CiliumBGPClusterConfig: BGP 인스턴스와 여러 노드에 적용되는 피어 구성을 정의합니다. -

CiliumBGPPeerConfig: 공통 BGP 피어링 설정을 정의합니다. 여러 피어에서 사용할 수 있습니다. -

CiliumBGPAdvertisement: BGP 라우팅 테이블에 삽입되는 접두사를 정의합니다. -

CiliumBGPNodeConfigOverride: 세밀한 제어를 제공하기 위해 특정 노드에 대한 BGP 설정 오버라이드을 정의합니다.

-

BGP 설정 후 통신 확인

Cilium의 BGP는 기본적으로 외부 경로를 커널 라우팅 테이블에 주입하지 않습니다.

- [router] router 접속 후 설정 :

sshpass -p 'vagrant' ssh vagrant@router

# k8s-ctr 에서 router 로 ssh 접속

$ sshpass -p 'vagrant' ssh vagrant@router

# BGP 관련 프로세스를 확인합니다.

$ ss -tnlp | grep -iE 'zebra|bgpd'

# => LISTEN 0 3 127.0.0.1:2601 0.0.0.0:* users:(("zebra",pid=4144,fd=23))

# LISTEN 0 3 127.0.0.1:2605 0.0.0.0:* users:(("bgpd",pid=4149,fd=18))

# LISTEN 0 4096 0.0.0.0:179 0.0.0.0:* users:(("bgpd",pid=4149,fd=22))

# LISTEN 0 4096 [::]:179 [::]:* users:(("bgpd",pid=4149,fd=23))

$ ps -ef |grep frr

# => root 4131 1 0 16:49 ? 00:00:03 /usr/lib/frr/watchfrr -d -F traditional zebra bgpd staticd

# frr 4144 1 0 16:49 ? 00:00:01 /usr/lib/frr/zebra -d -F traditional -A 127.0.0.1 -s 90000000

# frr 4149 1 0 16:49 ? 00:00:00 /usr/lib/frr/bgpd -d -F traditional -A 127.0.0.1

# frr 4156 1 0 16:49 ? 00:00:00 /usr/lib/frr/staticd -d -F traditional -A 127.0.0.1

# frr 설정을 확인합니다.

$ vtysh -c 'show running' # 명령을 통해 현재 설정을 확인할 수 있습니다.

# => uilding configuration...

#

# Current configuration:

# !

# frr version 8.4.4

# frr defaults traditional

# hostname router

# log syslog informational

# no ipv6 forwarding

# service integrated-vtysh-config

# !

# router bgp 65000

# bgp router-id 192.168.10.200

# no bgp ebgp-requires-policy

# bgp graceful-restart

# bgp bestpath as-path multipath-relax

# !

# address-family ipv4 unicast

# network 10.10.1.0/24

# maximum-paths 4

# exit-address-family

# exit

# !

# end

$ cat /etc/frr/frr.conf

# => ...

# log syslog informational

# !

# router bgp 65000

# bgp router-id 192.168.10.200

# bgp graceful-restart

# no bgp ebgp-requires-policy

# bgp bestpath as-path multipath-relax

# maximum-paths 4

# network 10.10.1.0/24

#

$ vtysh -c 'show running'

$ vtysh -c 'show ip bgp summary'

# => % No BGP neighbors found in VRF default

$ vtysh -c 'show ip bgp'

# => BGP table version is 1, local router ID is 192.168.10.200, vrf id 0

# Default local pref 100, local AS 65000

# Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

# i internal, r RIB-failure, S Stale, R Removed

# Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

# Origin codes: i - IGP, e - EGP, ? - incomplete

# RPKI validation codes: V valid, I invalid, N Not found

#

# Network Next Hop Metric LocPrf Weight Path

# *> 10.10.1.0/24 0.0.0.0 0 32768 i

$ ip -c route

# => default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200

# 10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

# 192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

$ vtysh -c 'show ip route'

...

K>* 0.0.0.0/0 [0/100] via 10.0.2.2, eth0, src 10.0.2.15, 00:34:45

...

C>* 192.168.10.0/24 is directly connected, eth1, 00:34:45

C>* 192.168.20.0/24 is directly connected, eth2, 00:34:45

# => ...

# K>* 0.0.0.0/0 [0/100] via 10.0.2.2, eth0, src 10.0.2.15, 06:52:38

# ...

# C>* 192.168.10.0/24 is directly connected, eth1, 06:52:38

# C>* 192.168.20.0/24 is directly connected, eth2, 06:52:38

# Cilium node 연동 설정 방안 1

# <span style="color: green;">👉 설정 파일을 직접 수정하는 방법입니다.</span>

$ cat << EOF >> /etc/frr/frr.conf

neighbor CILIUM peer-group

neighbor CILIUM remote-as external

neighbor 192.168.10.100 peer-group CILIUM

neighbor 192.168.10.101 peer-group CILIUM

neighbor 192.168.20.100 peer-group CILIUM

EOF

$ cat /etc/frr/frr.conf

# => log syslog informational

# !

# router bgp 65000

# bgp router-id 192.168.10.200

# bgp graceful-restart

# no bgp ebgp-requires-policy

# bgp bestpath as-path multipath-relax

# maximum-paths 4

# network 10.10.1.0/24

# neighbor CILIUM peer-group

# neighbor CILIUM remote-as external

# neighbor 192.168.10.100 peer-group CILIUM

# neighbor 192.168.10.101 peer-group CILIUM

# neighbor 192.168.20.100 peer-group CILIUM

$ systemctl daemon-reexec && systemctl restart frr

$ systemctl status frr --no-pager --full

# => ...

# Aug 15 23:43:00 router zebra[5215]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

# Aug 15 23:43:00 router staticd[5227]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

# Aug 15 23:43:00 router bgpd[5220]: [VTVCM-Y2NW3] Configuration Read in Took: 00:00:00

# Aug 15 23:43:00 router watchfrr[5201]: [QDG3Y-BY5TN] zebra state -> up : connect succeeded

# Aug 15 23:43:00 router watchfrr[5201]: [QDG3Y-BY5TN] bgpd state -> up : connect succeeded

# Aug 15 23:43:00 router watchfrr[5201]: [QDG3Y-BY5TN] staticd state -> up : connect succeeded

# Aug 15 23:43:00 router watchfrr[5201]: [KWE5Q-QNGFC] all daemons up, doing startup-complete notify

# Aug 15 23:43:00 router frrinit.sh[5190]: * Started watchfrr

# Aug 15 23:43:00 router systemd[1]: Started frr.service - FRRouting.

# 모니터링 걸어두기!

$ journalctl -u frr -f

# Cilium node 연동 설정 방안 2

# <span style="color: green;">👉 frr에서 제공하는 vtysh 명령어를 통해 설정하는 방법입니다.</span>

$ vtysh

---------------------------

?

show ?

show running

show ip route

# config 모드 진입

conf

?

## bgp 65000 설정 진입

router bgp 65000

?

neighbor CILIUM peer-group

neighbor CILIUM remote-as external

neighbor 192.168.10.100 peer-group CILIUM

neighbor 192.168.10.101 peer-group CILIUM

neighbor 192.168.20.100 peer-group CILIUM

end

# Write configuration to the file (same as write file)

write memory

# <span style="color: green;">👉 실제로 frr.conf 파일에 설정이 추가됩니다.</span>

exit

---------------------------

$ cat /etc/frr/frr.conf

- cilium에 bgp 설정

# 신규 터미널 1 (router) : 모니터링 걸어두기!

$ journalctl -u frr -f

# 신규 터미널 2 (k8s-ctr) : 반복 호출

$ kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# BGP 동작할 노드를 위한 label 설정

$ kubectl label nodes k8s-ctr k8s-w0 k8s-w1 enable-bgp=true

# => node/k8s-w0 labeled

# node/k8s-w1 labeled

$ kubectl get node -l enable-bgp=true

# => NAME STATUS ROLES AGE VERSION

# k8s-ctr Ready control-plane 7h35m v1.33.2

# k8s-w0 Ready <none> 7h30m v1.33.2

# k8s-w1 Ready <none> 7h33m v1.33.2

# Config Cilium BGP

$ cat << EOF | kubectl apply -f -

apiVersion: cilium.io/v2

kind: CiliumBGPAdvertisement

metadata:

name: bgp-advertisements

labels:

advertise: bgp

spec:

advertisements:

- advertisementType: "PodCIDR"

---

apiVersion: cilium.io/v2

kind: CiliumBGPPeerConfig

metadata:

name: cilium-peer

spec:

timers:

holdTimeSeconds: 9

keepAliveTimeSeconds: 3

ebgpMultihop: 2

gracefulRestart:

enabled: true

restartTimeSeconds: 15

families:

- afi: ipv4

safi: unicast

advertisements:

matchLabels:

advertise: "bgp"

---

apiVersion: cilium.io/v2

kind: CiliumBGPClusterConfig

metadata:

name: cilium-bgp

spec:

nodeSelector:

matchLabels:

"enable-bgp": "true"

bgpInstances:

- name: "instance-65001"

localASN: 65001

peers:

- name: "tor-switch"

peerASN: 65000

peerAddress: 192.168.10.200 # router ip address

peerConfigRef:

name: "cilium-peer"

EOF

# => ciliumbgpadvertisement.cilium.io/bgp-advertisements created

# ciliumbgppeerconfig.cilium.io/cilium-peer created

# ciliumbgpclusterconfig.cilium.io/cilium-bgp created

- 통신확인

# [k8s-ctr]에서 확인

# BGP 연결 확인

$ ss -tnlp | grep 179

# => (없음)

# <span style="color: green;">👉 k8s-ctr 노드에는 BGP 데몬이 실행되고 있지 않습니다.</span>

$ ss -tnp | grep 179

# => ESTAB 0 0 192.168.10.100:46459 192.168.10.200:179 users:(("cilium-agent",pid=5674,fd=193))

# <span style="color: green;">👉 cilium-agent가 BGP 연결을 위해 router와 연결되어 있습니다.</span>

# cilium bgp 정보 확인

$ cilium bgp peers

# => Node Local AS Peer AS Peer Address Session State Uptime Family Received Advertised

# k8s-ctr 65001 65000 192.168.10.200 established 2m20s ipv4/unicast 4 2

# k8s-w0 65001 65000 192.168.10.200 established 2m20s ipv4/unicast 4 2

# k8s-w1 65001 65000 192.168.10.200 established 2m19s ipv4/unicast 4 2

$ cilium bgp routes available ipv4 unicast

# => Node VRouter Prefix NextHop Age Attrs

# k8s-ctr 65001 172.20.0.0/24 0.0.0.0 2m30s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w0 65001 172.20.2.0/24 0.0.0.0 2m30s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w1 65001 172.20.1.0/24 0.0.0.0 2m30s [{Origin: i} {Nexthop: 0.0.0.0}]

$ kubectl get ciliumbgpadvertisements,ciliumbgppeerconfigs,ciliumbgpclusterconfigs

# => NAME AGE

# ciliumbgpadvertisement.cilium.io/bgp-advertisements 2m40s

#

# NAME AGE

# ciliumbgppeerconfig.cilium.io/cilium-peer 2m40s

#

# NAME AGE

# ciliumbgpclusterconfig.cilium.io/cilium-bgp 2m40s

$ kubectl get ciliumbgpnodeconfigs -o yaml | yq

# => ...

# "peeringState": "established",

# "routeCount": [

# {

# "advertised": 2,

# "afi": "ipv4",

# "received": 1,

# "safi": "unicast"

# }

# ],

# ...

# 신규 터미널 1 (router) : 모니터링 걸어두기!

$ journalctl -u frr -f

# => Aug 16 00:21:44 router bgpd[5422]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.10.101 in vrf default

# Aug 16 00:21:44 router bgpd[5422]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.20.100 in vrf default

# Aug 16 00:21:44 router bgpd[5422]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.10.100 in vrf default

$ ip -c route | grep bgp

# => 172.20.0.0/24 nhid 32 via 192.168.10.100 dev eth1 proto bgp metric 20

# 172.20.1.0/24 nhid 30 via 192.168.10.101 dev eth1 proto bgp metric 20

# 172.20.2.0/24 nhid 31 via 192.168.20.100 dev eth2 proto bgp metric 20

$ vtysh -c 'show ip bgp summary'

# => ...

# Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

# 192.168.10.100 4 65001 88 91 0 0 0 00:04:12 1 4 N/A

# 192.168.10.101 4 65001 88 91 0 0 0 00:04:12 1 4 N/A

# 192.168.20.100 4 65001 88 91 0 0 0 00:04:12 1 4 N/A

$ vtysh -c 'show ip bgp'

# => ...

# Network Next Hop Metric LocPrf Weight Path

# *> 10.10.1.0/24 0.0.0.0 0 32768 i

# *> 172.20.0.0/24 192.168.10.100 0 65001 i

# *> 172.20.1.0/24 192.168.10.101 0 65001 i

# *> 172.20.2.0/24 192.168.20.100 0 65001 i

# 신규 터미널 2 (k8s-ctr) : 반복 호출???

$ kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# => Hostname: webpod-697b545f57-j6b7t

# ---

# ---

# ---

# Hostname: webpod-697b545f57-j6b7t

# ...

# <span style="color: green;">👉 여전히 k8s-ctr 노드에 있는 파드만 통신이 되고, 다른 노드의 파드와는 통신이 안 됩니다.</span>

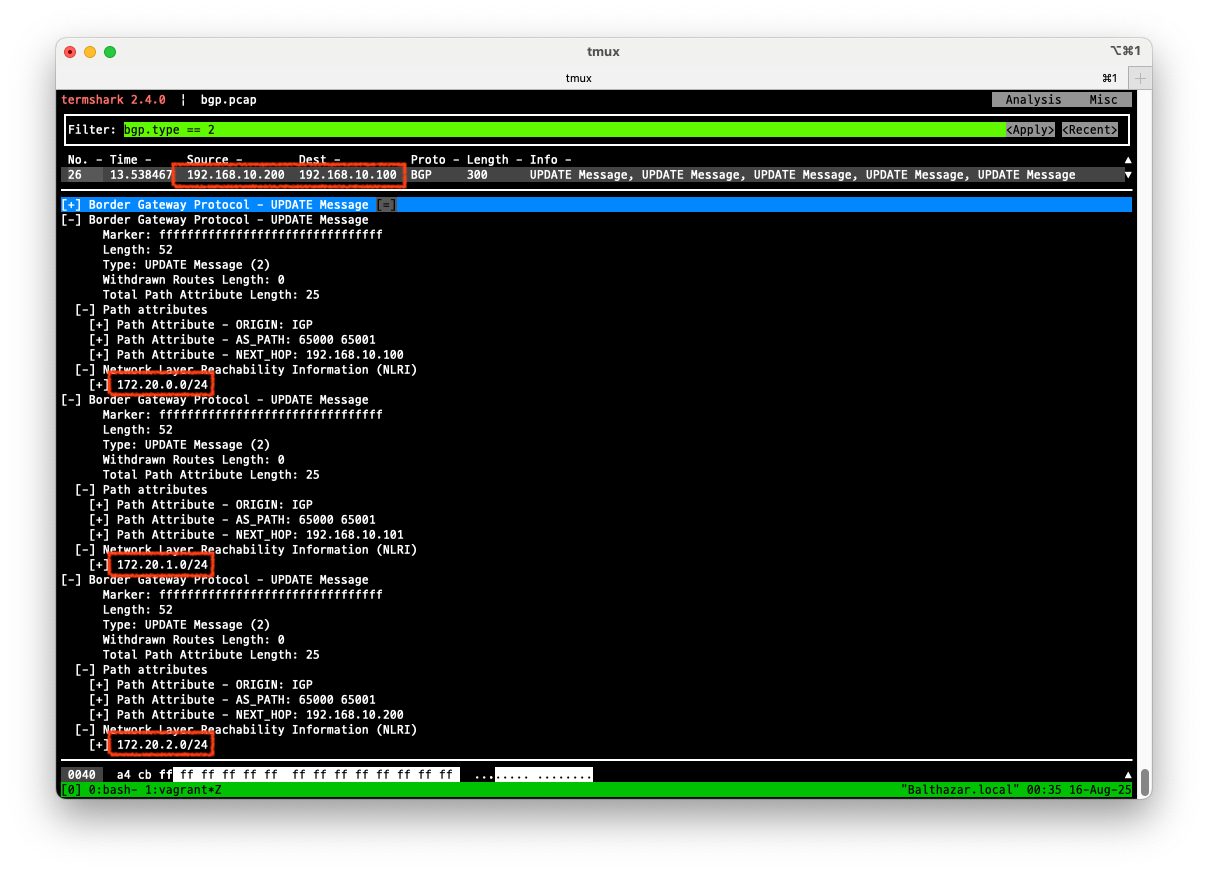

- BGP 정보 전달 확인

# k8s-ctr tcpdump 해두기

$ tcpdump -i eth1 tcp port 179 -w /tmp/bgp.pcap

# router : frr 재시작

$ systemctl restart frr && journalctl -u frr -f

# => ...

# Aug 16 00:28:35 router watchfrr[5625]: [KWE5Q-QNGFC] all daemons up, doing startup-complete notify

# Aug 16 00:28:41 router bgpd[5643]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.20.100 in vrf default

# Aug 16 00:28:41 router bgpd[5643]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.10.100 in vrf default

# Aug 16 00:28:41 router bgpd[5643]: [M59KS-A3ZXZ] bgp_update_receive: rcvd End-of-RIB for IPv4 Unicast from 192.168.10.101 in vrf default

# termshark 실행후 filter에 `bgp.type == 2` 입력해서 bgp update 패킷을 확인합니다.

$ termshark -r /tmp/bgp.pcap

# 분명 Router 장비를 통해 BGP UPDATE로 받음을 확인.

$ cilium bgp routes

# => Node VRouter Prefix NextHop Age Attrs

# k8s-ctr 65001 172.20.0.0/24 0.0.0.0 15m44s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w0 65001 172.20.2.0/24 0.0.0.0 15m44s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w1 65001 172.20.1.0/24 0.0.0.0 15m44s [{Origin: i} {Nexthop: 0.0.0.0}]

# <span style="color: green;">👉 cilium 라우팅 정보에는 BGP UPDATE로 받은 정보가 있습니다.</span>

$ ip -c route

# => default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 172.20.0.0/24 via 172.20.0.222 dev cilium_host proto kernel src 172.20.0.222

# 172.20.0.222 dev cilium_host proto kernel scope link

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.100

# 192.168.20.0/24 via 192.168.10.200 dev eth1 proto static

# <span style="color: green;">👉 k8s-ctr 노드의 커널 라우팅 테이블에는 추가되지 않았습니다.</span>

termshark에서 BGP UPDATE 패킷 확인

termshark에서 BGP UPDATE 패킷 확인

- cilium에 BGP 설정이되어 이제 라우팅이 가능해질것 같습니다.

- 하지만 커널 라우팅 테이블에는 추가되지 않았습니다. 그 이유는 다음과 같습니다.

- Cilium BGP는 eBPF를 통해 라우팅하기 때문에 커널 라우팅 테이블에 추가할 필요가 없습니다.

- Cilium BGP Speaker가 기반하는 GoBGP 라이브러리는

disable-fib상태로 빌드되어 Linux 커널(FIB)에 주입되도록 설정 되어있습니다.

문제 해결 후 통신

- 현재 실습 환경은 2개의 NIC(eth0, eth1)을 사용하고 있는 상황으로, default GW가 eth0 경로로 설정 되어 있습니다.

- eth1은 k8s 통신 용도로 사용 중이며, 현재 k8s 파드 사용 대역 통신 전체는 eth1을 통해서 라우팅 설정해야 합니다.

- 해당 라우팅을 상단에 네트워크 장비가 받게 되고, 해당 장비는 Cilium Node를 통해 모든 PodCIDR 정보를 알고 있기에, 목적지로 전달 가능합니다.

- 결론은 Cilium 으로 BGP 사용 시, 2개 이상의 NIC 사용할 경우에는 Node에 직접 라우팅 설정 및 관리가 필요합니다.

# k8s 파드 사용 대역 통신 전체는 eth1을 통해서 라우팅 설정

$ ip route add 172.20.0.0/16 via 192.168.10.200

$ sshpass -p 'vagrant' ssh vagrant@k8s-w1 sudo ip route add 172.20.0.0/16 via 192.168.10.200

$ sshpass -p 'vagrant' ssh vagrant@k8s-w0 sudo ip route add 172.20.0.0/16 via 192.168.20.200

# router 가 bgp로 학습한 라우팅 정보 한번 더 확인 :

$ sshpass -p 'vagrant' ssh vagrant@router ip -c route | grep bgp

# => 172.20.0.0/24 nhid 63 via 192.168.10.100 dev eth1 proto bgp metric 20

# 172.20.1.0/24 nhid 64 via 192.168.10.101 dev eth1 proto bgp metric 20

# 172.20.2.0/24 nhid 60 via 192.168.20.100 dev eth2 proto bgp metric 20

# 정상 통신 확인!

$ kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# => Hostname: webpod-697b545f57-rl4d2

# ---

# Hostname: webpod-697b545f57-n7fk7

# ---

# Hostname: webpod-697b545f57-j6b7t

# ...

# <span style="color: green;">👉 각 노드의 파드와 잘 통신이 되는것을 확인할 수 있습니다.</span>

# hubble relay 포트 포워딩 실행

$ cilium hubble port-forward&

$ hubble status

# => hubble status

# Healthcheck (via localhost:4245): Ok

# Current/Max Flows: 12,285/12,285 (100.00%)

# Flows/s: 65.38

# Connected Nodes: 3/3

# flow log 모니터링

$ hubble observe -f --protocol tcp --pod curl-pod

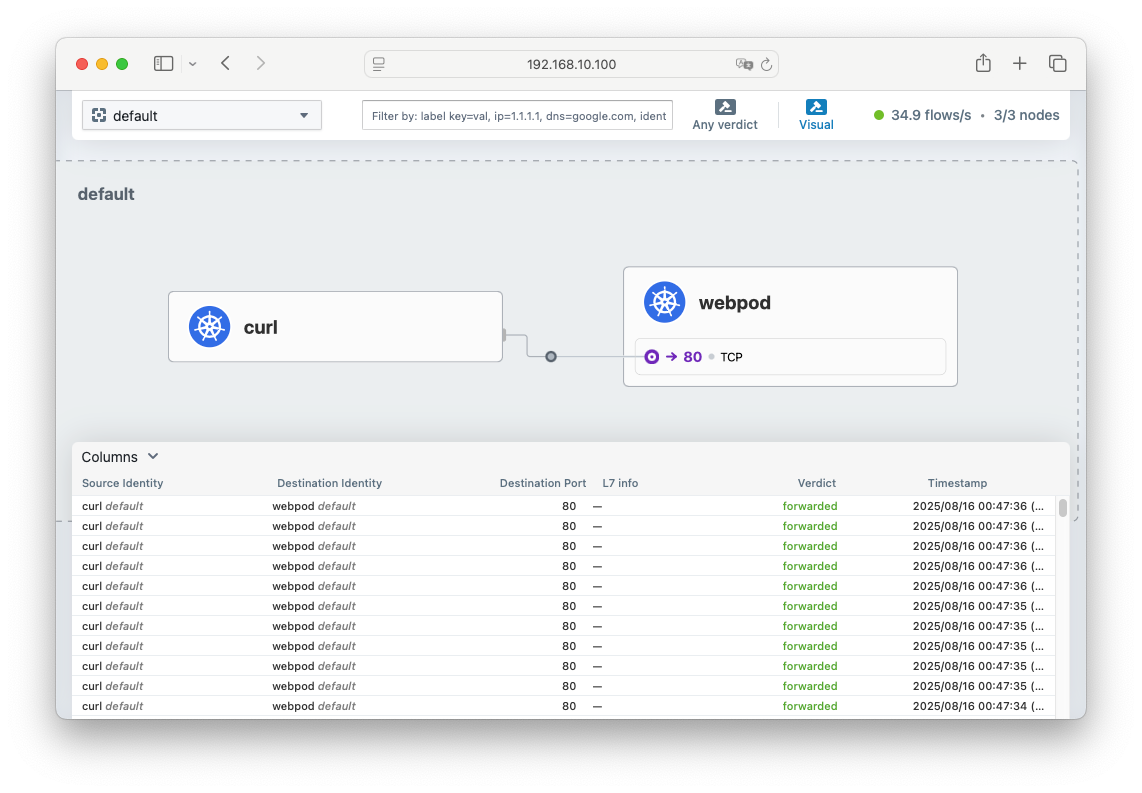

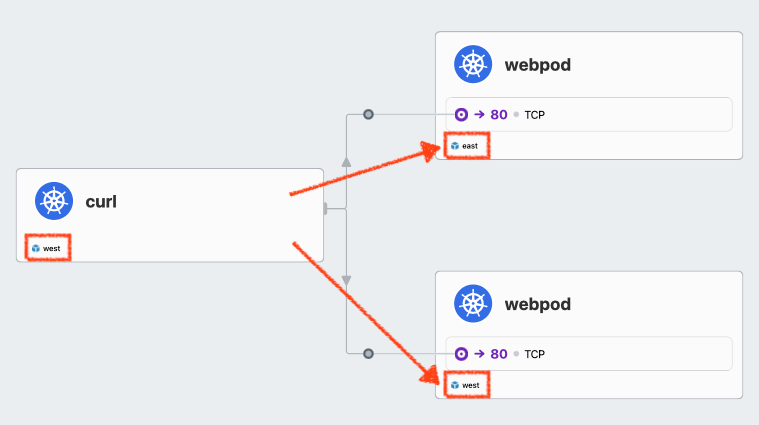

curl-pod에서 webpod로 통신하는 흐름 확인

curl-pod에서 webpod로 통신하는 흐름 확인

노드 유지보수 (k8s-w0) 실습

- 노드를 유지보수 하기 위해서 라우팅을 해제시키고, 다시 복구하는 실습을 진행해 보겠습니다. - Docs

노드 유지보수를 위한 설정

# 모니터링 : 반복 호출

$ kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

# (참고) BGP Control Plane logs

$ kubectl logs -n kube-system -l name=cilium-operator -f | grep "subsys=bgp-cp-operator"

$ kubectl logs -n kube-system -l k8s-app=cilium -f | grep "subsys=bgp-control-plane"

# 유지보수를 위한 설정

$ kubectl drain k8s-w0 --ignore-daemonsets

# => evicting pod default/webpod-697b545f57-n7fk7

# pod/webpod-697b545f57-n7fk7 evicted

# node/k8s-w0 drained

$ kubectl label nodes k8s-w0 enable-bgp=false --overwrite

# => node/k8s-w0 labeled

# 확인

$ kubectl get node

# => NAME STATUS ROLES AGE VERSION

# k8s-ctr Ready control-plane 8h v1.33.2

# k8s-w0 Ready<span style="color: green;">,SchedulingDisabled</span> <none> 8h v1.33.2

# k8s-w1 Ready <none> 8h v1.33.2

# <span style="color: green;">👉 drain을 통해 k8s-w0에 pod 스케줄링이 되지 않도록 설정 되었습니다.</span>

$ kubectl get ciliumbgpnodeconfigs

# => NAME AGE

# k8s-ctr 50m

# k8s-w1 50m

# <span style="color: green;">👉 enable-bgp=false로 라벨을 수정해서 cilium bgp node에서 k8s-w0 노드가 제외 되었습니다.</span>

$ cilium bgp routes

# => Node VRouter Prefix NextHop Age Attrs

# k8s-ctr 65001 172.20.0.0/24 0.0.0.0 52m12s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w1 65001 172.20.1.0/24 0.0.0.0 52m12s [{Origin: i} {Nexthop: 0.0.0.0}]

$ cilium bgp peers

# => Node Local AS Peer AS Peer Address Session State Uptime Family Received Advertised

# k8s-ctr 65001 65000 192.168.10.200 established 45m21s ipv4/unicast 3 2

# k8s-w1 65001 65000 192.168.10.200 established 45m21s ipv4/unicast 3 2

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip bgp'"

# => Network Next Hop Metric LocPrf Weight Path

# *> 10.10.1.0/24 0.0.0.0 0 32768 i

# *> 172.20.0.0/24 192.168.10.100 0 65001 i

# *> 172.20.1.0/24 192.168.10.101 0 65001 i

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip route bgp'"

# => B>* 172.20.0.0/24 [20/0] via 192.168.10.100, eth1, weight 1, 00:46:08

# B>* 172.20.1.0/24 [20/0] via 192.168.10.101, eth1, weight 1, 00:46:08

$ sshpass -p 'vagrant' ssh vagrant@router ip -c route | grep bgp

# => 172.20.0.0/24 nhid 63 via 192.168.10.100 dev eth1 proto bgp metric 20

# 172.20.1.0/24 nhid 64 via 192.168.10.101 dev eth1 proto bgp metric 20

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip bgp summary'"

# => Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

# 192.168.10.100 4 65001 915 919 0 0 0 00:45:35 1 3 N/A

# 192.168.10.101 4 65001 915 919 0 0 0 00:45:35 1 3 N/A

# <span style="color: green;">192.168.20.100</span> 4 65001 856 857 0 0 0 00:03:03 Active 0 N/A

# <span style="color: green;">👉 누적된 통계를 제외하면 router의 BGP 정보에는 k8s-w0 노드가 제외된 것을 확인할 수 있습니다.</span>

유지보수 완료 후 라우팅 복원

# 원복 설정

$ kubectl label nodes k8s-w0 enable-bgp=true --overwrite

# => node/k8s-w0 labeled

$ kubectl uncordon k8s-w0

# => node/k8s-w0 uncordoned

# <span style="color: green;">👉 node를 다시 스케쥴링 가능하도록 설정합니다.</span>

# 확인

$ kubectl get node

# => NAME STATUS ROLES AGE VERSION

# k8s-ctr Ready control-plane 8h v1.33.2

# k8s-w0 <span style="color: green;">Ready</span> <none> 8h v1.33.2

# k8s-w1 Ready <none> 8h v1.33.2

$ kubectl get ciliumbgpnodeconfigs

# => NAME AGE

# k8s-ctr 57m

# <span style="color: green;">k8s-w0 65s</span>

# k8s-w1 57m

$ cilium bgp routes

# => Node VRouter Prefix NextHop Age Attrs

# k8s-ctr 65001 172.20.0.0/24 0.0.0.0 57m48s [{Origin: i} {Nexthop: 0.0.0.0}]

# <span style="color: green;">k8s-w0 65001 172.20.2.0/24 0.0.0.0 1m25s [{Origin: i} {Nexthop: 0.0.0.0}]</span>

# k8s-w1 65001 172.20.1.0/24 0.0.0.0 57m48s [{Origin: i} {Nexthop: 0.0.0.0}]

$ cilium bgp peers

# => Node Local AS Peer AS Peer Address Session State Uptime Family Received Advertised

# k8s-ctr 65001 65000 192.168.10.200 established 51m18s ipv4/unicast 4 2

# <span style="color: green;">k8s-w0 65001 65000 192.168.10.200 established 1m52s ipv4/unicast 4 2</span>

# k8s-w1 65001 65000 192.168.10.200 established 51m18s ipv4/unicast 4 2

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip bgp'"

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip route bgp'"

$ sshpass -p 'vagrant' ssh vagrant@router ip -c route | grep bgp

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip bgp summary'"

# 노드별 파드 분배 실행

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 0 138m 172.20.0.187 k8s-ctr <none> <none>

# webpod-697b545f57-d7dn8 1/1 Running 0 9m33s 172.20.1.197 k8s-w1 <none> <none>

# webpod-697b545f57-j6b7t 1/1 Running 0 138m 172.20.0.72 k8s-ctr <none> <none>

# webpod-697b545f57-rl4d2 1/1 Running 0 138m 172.20.1.7 k8s-w1 <none> <none>

$ kubectl scale deployment webpod --replicas 0

$ kubectl scale deployment webpod --replicas 3

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# curl-pod 1/1 Running 0 138m 172.20.0.187 k8s-ctr <none> <none>

# webpod-697b545f57-h4lmx 1/1 Running 0 9s 172.20.1.12 k8s-w1 <none> <none>

# webpod-697b545f57-q8bzk 1/1 Running 0 9s 172.20.0.161 k8s-ctr <none> <none>

# webpod-697b545f57-ssc5l 1/1 Running 0 9s 172.20.2.143 <span style="color: green;">k8s-w0</span> <none> <none>

# <span style="color: green;">👉 webpod의 replica 수를 0으로 줄였다가 3으로 늘려서 노드별로 파드가 분배되는 것을 확인합니다.</span>

CRD Status Report 끄기

- 노드가 많은 대규모 클러스터의 경우, api 서버의 부하를 유발할 수 있어서

bgp status reporting off이 권장 됩니다. Docs

# 확인

$ kubectl get ciliumbgpnodeconfigs -o yaml | yq

# => ...

# status:

# bgpInstances:

# - localASN: 65001

# name: instance-65001

# peers:

# ...

# 설정

$ helm upgrade cilium cilium/cilium --version 1.18.0 --namespace kube-system --reuse-values \

--set bgpControlPlane.statusReport.enabled=false

# => Release "cilium" has been upgraded. Happy Helming!

$ kubectl -n kube-system rollout restart ds/cilium

# => daemonset.apps/cilium restarted

# 확인 : CiliumBGPNodeConfig Status 정보가 없다!

$ kubectl get ciliumbgpnodeconfigs -o yaml | yq

# => ...

# "status": {}

# ...

Service(LoadBalancer - ExternalIP) IPs를 BGP로 광고

Service(LoadBalancer - ExternalIP) IPs를 BGP로 광고

Service(LoadBalancer - ExternalIP) IPs를 BGP로 광고

- Cilium BGP는 Service(LoadBalancer - ExternalIP) IPs를 BGP로 광고할 수 있고, MetalLB를 대체할 수 있습니다.

# LB IPAM Announcement over BGP 설정 예정으로, 노드의 네트워크 대역이 아니여도 가능!

$ cat << EOF | kubectl apply -f -

apiVersion: "cilium.io/v2"

kind: CiliumLoadBalancerIPPool

metadata:

name: "cilium-pool"

spec:

allowFirstLastIPs: "No"

blocks:

- cidr: "172.16.1.0/24"

EOF

# => ciliumloadbalancerippool.cilium.io/cilium-pool created

$ kubectl get ippool

# => NAME DISABLED CONFLICTING IPS AVAILABLE AGE

# cilium-pool false False 254 16s

#

$ kubectl patch svc webpod -p '{"spec": {"type": "LoadBalancer"}}'

$ kubectl get svc webpod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# => NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# webpod LoadBalancer 10.96.134.232 172.16.1.1 80:31973/TCP 7m5s

$ kubectl get ippool

# => NAME DISABLED CONFLICTING IPS AVAILABLE AGE

# cilium-pool false False <span style="color: green;">253</span> 8m25s

# <span style="color: green;">👉 External IP가 할당되어서, 남은 IP수가 하나 줄어서 253개의 IP가 남았습니다.</span>

$ kubectl describe svc webpod | grep 'Traffic Policy'

# => External Traffic Policy: Cluster

# Internal Traffic Policy: Cluster

$ kubectl -n kube-system exec ds/cilium -c cilium-agent -- cilium-dbg service list

# => ID Frontend Service Type Backend

# ...

# 16 <span style="color: green;">172.16.1.1:80/TCP</span> LoadBalancer 1 => 172.20.0.123:80/TCP (active)

# 2 => 172.20.1.72:80/TCP (active)

# 3 => 172.20.2.138:80/TCP (active)

# <span style="color: green;">👉 ExternalIP와 각 파드의 IP가 매핑된 것을 확인할 수 있습니다.</span>

# LBIP로 curl 요청 확인

$ kubectl get svc webpod -o jsonpath='{.status.loadBalancer.ingress[0].ip}'

# => 172.16.1.1

$ LBIP=$(kubectl get svc webpod -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

$ curl -s $LBIP

$ curl -s $LBIP | grep Hostname

# => Hostname: webpod-697b545f57-2kvd9

$ curl -s $LBIP | grep RemoteAddr

# => RemoteAddr: 192.168.10.100:60634

$ curl -s $LBIP | grep RemoteAddr

# => RemoteAddr: 172.20.0.201:60184

# <span style="color: green;">👉 같은 노드의 파드로 통신 될 때는 cilium_host IP가, 다른 노드의 파드로 통신 될 때는 node의 IP가 RemoteAddr로 보입니다.</span>

# 모니터링

$ watch "sshpass -p 'vagrant' ssh vagrant@router ip -c route"

# => default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

# 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

# 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200

# 10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200

# 172.20.0.0/24 nhid 60 via 192.168.10.100 dev eth1 proto bgp metric 20

# 172.20.1.0/24 nhid 64 via 192.168.10.101 dev eth1 proto bgp metric 20

# 172.20.2.0/24 nhid 62 via 192.168.20.100 dev eth2 proto bgp metric 20

# 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200

# 192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200

# LB EX-IP를 BGP로 광고 설정

$ cat << EOF | kubectl apply -f -

apiVersion: cilium.io/v2

kind: CiliumBGPAdvertisement

metadata:

name: bgp-advertisements-lb-exip-webpod

labels:

advertise: bgp

spec:

advertisements:

- advertisementType: "Service"

service:

addresses:

- LoadBalancerIP

selector:

matchExpressions:

- { key: app, operator: In, values: [ webpod ] }

EOF

$ sshpass -p 'vagrant' ssh vagrant@router ip -c route

# => ...

# 172.16.1.1 nhid 70 proto bgp metric 20

# <span style="color: green;">nexthop via 192.168.10.100 dev eth1 weight 1</span> # <span style="color: green;">👉 BGP 광고 후 3줄이 추가되었습니다.</span>

# <span style="color: green;">nexthop via 192.168.20.100 dev eth2 weight 1</span>

# <span style="color: green;">nexthop via 192.168.10.101 dev eth1 weight 1</span>

# ...

$ kubectl get CiliumBGPAdvertisement

# => NAME AGE

# bgp-advertisements 16m

# bgp-advertisements-lb-exip-webpod 3m

# 확인

$ kubectl exec -it -n kube-system ds/cilium -- cilium-dbg bgp route-policies

# => VRouter Policy Name Type Match Peers Match Families Match Prefixes (Min..Max Len) RIB Action Path Actions

# 65001 allow-local import accept

# 65001 tor-switch-ipv4-PodCIDR export 192.168.10.200/32 172.20.1.0/24 (24..24) accept

# 65001 tor-switch-ipv4-Service-webpod-default-LoadBalancerIP export 192.168.10.200/32 172.16.1.1/32 (32..32) accept

$ cilium bgp routes available ipv4 unicast

# => Node VRouter Prefix NextHop Age Attrs

# k8s-ctr 65001 172.16.1.1/32 0.0.0.0 3m36s [{Origin: i} {Nexthop: 0.0.0.0}]

# 65001 172.20.0.0/24 0.0.0.0 12m38s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w0 65001 172.16.1.1/32 0.0.0.0 3m35s [{Origin: i} {Nexthop: 0.0.0.0}]

# 65001 172.20.2.0/24 0.0.0.0 12m25s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w1 65001 172.16.1.1/32 0.0.0.0 3m35s [{Origin: i} {Nexthop: 0.0.0.0}]

# 65001 172.20.1.0/24 0.0.0.0 12m40s [{Origin: i} {Nexthop: 0.0.0.0}]

# 현재 BGP가 동작하는 모든 노드로 전달 가능!

$ sshpass -p 'vagrant' ssh vagrant@router ip -c route

# => ...

# 172.16.1.1 nhid 70 proto bgp metric 20

# nexthop via 192.168.10.100 dev eth1 weight 1

# nexthop via 192.168.20.100 dev eth2 weight 1

# nexthop via 192.168.10.101 dev eth1 weight 1

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip route bgp'"

# => B>* 172.16.1.1/32 [20/0] via 192.168.10.100, eth1, weight 1, 00:04:10

# * via 192.168.10.101, eth1, weight 1, 00:04:10

# * via 192.168.20.100, eth2, weight 1, 00:04:10

# ...

# <span style="color: green;">👉 `B>`로 시작하는 라우팅 정보가 BGP로 광고된 것을 확인할 수 있습니다.</span>

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip bgp summary'"

# => Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

# 192.168.10.100 4 65001 332 335 0 0 0 00:14:00 2 5 N/A

# 192.168.10.101 4 65001 331 334 0 0 0 00:14:02 2 5 N/A

# 192.168.20.100 4 65001 333 337 0 0 0 00:13:47 2 5 N/A

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip bgp'"

# => Network Next Hop Metric LocPrf Weight Path

# ...

# *= 172.16.1.1/32 192.168.20.100 0 65001 i # * valid, > best, = multipath

# *> 192.168.10.100 0 65001 i

# *= 192.168.10.101 0 65001 i

# <span style="color: green;">👉 BGP 라우팅 테이블에 Multipath로 등록된 것을 확인할 수 있습니다.</span>

$ sshpass -p 'vagrant' ssh vagrant@router "sudo vtysh -c 'show ip bgp 172.16.1.1/32'"

# => BGP routing table entry for 172.16.1.1/32, version 7

# Paths: (<span style="color: green;">3 available</span>, best #2, table default)

# Advertised to non peer-group peers:

# 192.168.10.100 192.168.10.101 192.168.20.100

# 65001

# 192.168.20.100 from 192.168.20.100 (192.168.20.100)

# Origin IGP, valid, external, multipath

# Last update: Sat Aug 16 16:19:43 2025

# 65001

# 192.168.10.100 from 192.168.10.100 (192.168.10.100)

# Origin IGP, valid, external, multipath, best (Router ID)

# Last update: Sat Aug 16 16:19:43 2025

# 65001

# 192.168.10.101 from 192.168.10.101 (192.168.10.101)

# Origin IGP, valid, external, multipath

# Last update: Sat Aug 16 16:19:43 2025

router에서 LL External IP 호출 확인

#

$ LBIP=172.16.1.1

$ curl -s $LBIP

$ curl -s $LBIP | grep Hostname

# => Hostname: webpod-697b545f57-2kvd9

$ curl -s $LBIP | grep RemoteAddr

# => RemoteAddr: 192.168.10.100:35378

# 반복 접속

$ for i in {1..100}; do curl -s $LBIP | grep Hostname; done | sort | uniq -c | sort -nr

# => 34 Hostname: webpod-697b545f57-lz8mc

# 33 Hostname: webpod-697b545f57-gzzdb

# 33 Hostname: webpod-697b545f57-2kvd9

$ while true; do curl -s $LBIP | egrep 'Hostname|RemoteAddr' ; sleep 0.1; done

# => Hostname: webpod-697b545f57-2kvd9

# RemoteAddr: 192.168.10.100:46014

# Hostname: webpod-697b545f57-lz8mc

# RemoteAddr: 192.168.10.100:38402

# Hostname: webpod-697b545f57-gzzdb

# RemoteAddr: 172.20.0.201:44744

# ...

# 호출 확인을 쉽게 테스트할 수 있게 k8s-ctr 에서 replicas=2 로 줄여보겠습니다.

$ kubectl scale deployment webpod --replicas 2

# => deployment.apps/webpod scaled

$ kubectl get pod -owide

# => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

# => webpod-697b545f57-nhdcd 1/1 Running 0 3s 172.20.1.130 k8s-w1 <none> <none>

# webpod-697b545f57-t7wb4 1/1 Running 0 3s 172.20.2.198 k8s-w0 <none> <none>

# ...

$ cilium bgp routes

# => Node VRouter Prefix NextHop Age Attrs

# k8s-ctr 65001 172.16.1.1/32 0.0.0.0 10m58s [{Origin: i} {Nexthop: 0.0.0.0}]

# 65001 172.20.0.0/24 0.0.0.0 20m0s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w0 65001 172.16.1.1/32 0.0.0.0 10m58s [{Origin: i} {Nexthop: 0.0.0.0}]

# 65001 172.20.2.0/24 0.0.0.0 19m48s [{Origin: i} {Nexthop: 0.0.0.0}]

# k8s-w1 65001 172.16.1.1/32 0.0.0.0 10m57s [{Origin: i} {Nexthop: 0.0.0.0}]

# 65001 172.20.1.0/24 0.0.0.0 20m2s [{Origin: i} {Nexthop: 0.0.0.0}]

# <span style="color: green;">👉 파드를 2개로 줄여서 k8s-w0과 k8s-w1에만 파드가 있지만, k8s-ctr에도 파드 및 LB ExtIP의 라우팅 정보가 남아 있습니다.</span>

# <span style="color: green;"> 이렇게 되면 LB ExtIP 사용시 k8s-ctr로도 접속이 되어서 k8s-w0이나 k8s-w1의 webpod에서 처리되어서 k8s-ctr으로 다시 응답이 리턴되는</span>

# <span style="color: green;"> 오버헤드가 생길 수 있다는 것을 의미합니다. 이에 대한 해결 방법은 이후에 알아 보겠습니다.</span>

# router 에서 정보 확인 : k8s-ctr 노드에 대상 파드가 배치되지 않았지만, 라우팅 경로 설정이 되어 있다.

$ ip -c route

$ vtysh -c 'show ip bgp summary'

# => Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

# 192.168.10.100 4 65001 548 552 0 0 0 00:24:49 2 5 N/A

# 192.168.10.101 4 65001 547 550 0 0 0 00:24:51 2 5 N/A

# 192.168.20.100 4 65001 550 553 0 0 0 00:24:36 2 5 N/A

$ vtysh -c 'show ip bgp'

# => Network Next Hop Metric LocPrf Weight Path

# ...

# *= 172.16.1.1/32 192.168.20.100 0 65001 i

# *> <span style="color: green;">192.168.10.100</span> 0 65001 i

# *= 192.168.10.101 0 65001 i

$ vtysh -c 'show ip bgp 172.16.1.1/32'

# => BGP routing table entry for 172.16.1.1/32, version 7

# Paths: (3 available, best #2, table default)

# Advertised to non peer-group peers:

# <span style="color: green;">192.168.10.100</span> 192.168.10.101 192.168.20.100

# 65001

# 192.168.20.100 from 192.168.20.100 (192.168.20.100)

# Origin IGP, valid, external, multipath

# Last update: Sat Aug 16 16:19:42 2025

# 65001

# <span style="color: green;">192.168.10.100</span> from 192.168.10.100 (192.168.10.100)

# Origin IGP, valid, external, multipath, best (Router ID)

# Last update: Sat Aug 16 16:19:42 2025

# 65001

# 192.168.10.101 from 192.168.10.101 (192.168.10.101)

# Origin IGP, valid, external, multipath

# Last update: Sat Aug 16 16:19:42 2025

$ vtysh -c 'show ip route bgp'

# => B>* 172.16.1.1/32 [20/0] via <span style="color: green;">192.168.10.100</span>, eth1, weight 1, 00:17:01

# * via 192.168.10.101, eth1, weight 1, 00:17:01

# * via 192.168.20.100, eth2, weight 1, 00:17:01

# ...

# [k8s-ctr] 반복 접속

$ for i in {1..100}; do curl -s $LBIP | grep Hostname; done | sort | uniq -c | sort -nr

# => 52 Hostname: webpod-697b545f57-t7wb4

# 48 Hostname: webpod-697b545f57-nhdcd

# <span style="color: green;">👉 replicas가 2이기 때문에, webpod의 파드가 k8s-w0, k8s-w1의 2개로 분배되어서 Hostname이 2개가 출력되는 것을 확인할 수 있습니다.</span>

$ while true; do curl -s $LBIP | egrep 'Hostname|RemoteAddr' ; sleep 0.1; done

# => Hostname: webpod-697b545f57-t7wb4

# RemoteAddr: 192.168.10.100:43914

# Hostname: webpod-697b545f57-t7wb4

# RemoteAddr: 192.168.10.100:43920

# Hostname: webpod-697b545f57-nhdcd

# RemoteAddr: 192.168.10.100:53840

# ...

# <span style="color: green;">👉 다른 노드에 있는 webpod 파드와 접속이 되어서 k8s-ctr의 NodeIP가 출력됩니다.</span>

# 신규터미널 (3개) : k8s-w1, k8s-w2, k8s-w0

$ tcpdump -i eth1 -A -s 0 -nn 'tcp port 80'

# k8s-ctr 를 경유하거나 등 확인 : ExternalTrafficPolicy 설정 확인

$ LBIP=172.16.1.1

$ curl -s $LBIP

$ curl -s $LBIP

$ curl -s $LBIP

$ curl -s $LBIP

# <span style="color: green;">👉 k8s-w0 이나 k8s-w1과 통신이 되며 k8s-ctr의 eth1을 경유해서 패킷이 전송되는 것을 확인할 수 있습니다.</span>

Kind

Kind 소개

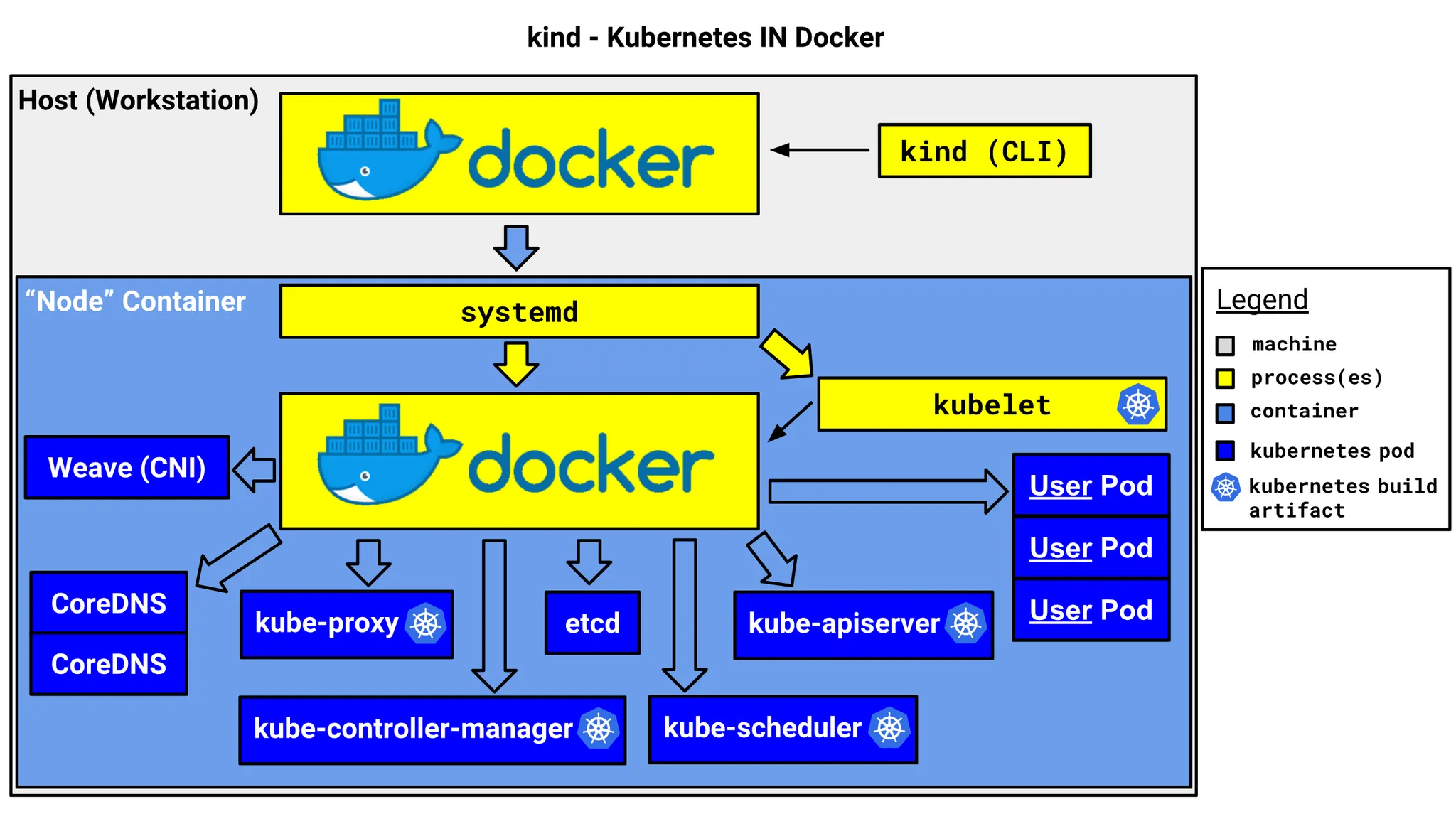

- Kind는 Kubernetes in Docker의 줄임말로, 로컬 환경에서 쉽게 Kubernetes 클러스터를 구성할 수 있도록 도와주는 도구입니다.

- 이름처럼 Docker를 이용하여 Kubernetes 클러스터를 구성하며, Docker를 이용하기 때문에 다양한 환경에서 쉽게 사용할 수 있습니다.

- Kind는 HA를 포함한 멀티노드를 지원하지만, 테스트와 실험적인 목적으로만 사용하기를 추천합니다.

- Kind는 클러스터를 구성하기 위해 kubeadm을 사용합니다.

- Kind 소개 및 설치, Kind 공식문서

Kind 설치

- Apple Silicon 기반 Mac 기준으로 설치를 진행해보겠습니다.

Docker Desktop 및 필수 툴 설치

- Docker Desktop 설치 - Link

- 실습 환경 : Kind 사용하는 도커 엔진 리소스에 최소 vCPU 4, Memory 8GB 할당을 권고합니다. - 링크

- 필수 툴 설치

# Install Kind $ brew install kind $ kind --version # => kind version 0.29.0 # Install kubectl $ brew install kubernetes-cli $ kubectl version --client=true # => Client Version: v1.33.1 ## kubectl -> k 단축키 설정 $ echo "alias kubectl=kubecolor" >> ~/.zshrc # Install Helm $ brew install helm $ helm version # => version.BuildInfo{Version:"v3.18.2", GitCommit:"04cad4610054e5d546aa5c5d9c1b1d5cf68ec1f8", GitTreeState:"clean", GoVersion:"go1.24.3"} - (선택) 유용한 툴 설치

# 툴 설치 $ brew install krew $ brew install kube-ps1 $ brew install kubectx # kubectl 출력 시 하이라이트 처리 $ brew install kubecolor $ echo "alias kubectl=kubecolor" >> ~/.zshrc $ echo "compdef kubecolor=kubectl" >> ~/.zshrckind 기본 사용 - 클러스터 배포 및 확인

이미 kubeconfig가 있다면, 미리 kubeconfig를 백업 후 kind를 실습하거나, 아래 처럼 kubeconfig 변수를 지정하여 사용하기를 권장합니다.

- (옵션) 별도 kubeconfig 지정 후 사용

# 방안1 : 환경변수 지정 $ export KUBECONFIG=/Users/<Username>/Downloads/kind/config # 방안2 : 혹은 --kubeconfig ./config 지정 가능 # 클러스터 생성 $ kind create cluster # kubeconfig 파일 확인 $ ls -l /Users/<Username>/Downloads/kind/config # => -rw------- 1 <Username> staff 5608 4 24 09:05 /Users/<Username>/Downloads/kind/config # 파드 정보 확인 $ kubectl get pod -A # 클러스터 삭제 $ kind delete cluster $ unset KUBECONFIG - Kind 클러스터 배포 및 확인

# 클러스터 배포 전 확인 $ docker ps # => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES # Create a cluster with kind $ kind create cluster # => Creating cluster "kind" ... # ✓ Ensuring node image (kindest/node:v1.33.1) 🖼 # ✓ Preparing nodes 📦 # ✓ Writing configuration 📜 # ✓ Starting control-plane 🕹️ # ✓ Installing CNI 🔌 # ✓ Installing StorageClass 💾 # Set kubectl context to "kind-kind" # You can now use your cluster with: # # kubectl cluster-info --context kind-kind # 클러스터 배포 확인 $ kind get clusters # => kind $ kind get nodes # => kind-control-plane $ kubectl cluster-info # => Kubernetes control plane is running at https://127.0.0.1:56460 # CoreDNS is running at https://127.0.0.1:56460/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy # # To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. # 노드 정보 확인 $ kubectl get node -o wide # => NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME # kind-control-plane Ready control-plane 48s v1.33.1 172.20.0.2 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://2.1.1 # 파드 정보 확인 $ kubectl get pod -A # => NAMESPACE NAME READY STATUS RESTARTS AGE # kube-system coredns-674b8bbfcf-922ww 1/1 Running 0 49s # kube-system coredns-674b8bbfcf-mm68k 1/1 Running 0 49s # kube-system etcd-kind-control-plane 1/1 Running 0 55s # kube-system kindnet-cvgzn 1/1 Running 0 49s # kube-system kube-apiserver-kind-control-plane 1/1 Running 0 55s # kube-system kube-controller-manager-kind-control-plane 1/1 Running 0 55s # kube-system kube-proxy-xkczv 1/1 Running 0 49s # kube-system kube-scheduler-kind-control-plane 1/1 Running 0 55s # local-path-storage local-path-provisioner-7dc846544d-th5jx 1/1 Running 0 49s $ kubectl get componentstatuses # => NAME STATUS MESSAGE ERROR # scheduler Healthy ok # controller-manager Healthy ok # etcd-0 Healthy ok # 컨트롤플레인 (컨테이너) 노드 1대가 실행 $ docker ps # => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES # d2b1bc35791a kindest/node:v1.33.1 "/usr/local/bin/entr…" About a minute ago Up About a minute 127.0.0.1:56460->6443/tcp kind-control-plane $ docker images # => REPOSITORY TAG IMAGE ID CREATED SIZE # kindest/node <none> 071dd73121e8 2 months ago 1.09GB # kube config 파일 확인 $ cat ~/.kube/config # 혹은 $ cat $KUBECONFIG # KUBECONFIG 변수 지정 사용 시 # <span style="color: green;">👉 기존 kube config에 추가적으로 kind 클러스터 관련 항목이 추가된 것을 확인할 수 있습니다.</span> # <span style="color: green;"> 또한 current-context: kind-kind여서 kubectl 명령어를 실행하면 kind 클러스터에 연결되어 실행됩니다.</span> # nginx 파드 배포 및 확인 : 컨트롤플레인 노드인데 파드가 배포 될까요? $ kubectl run nginx --image=nginx:alpine # => pod/nginx created $ kubectl get pod -owide # => NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES # nginx 1/1 Running 0 11s 10.244.0.5 kind-control-plane <none> <none> # <span style="color: green;">👉 파드가 배포 됩니다!</span> # 노드에 Taints 정보 확인 $ kubectl describe node | grep Taints # => Taints: <none> # <span style="color: green;">👉 컨트롤플레인이지만 Taints가 없어서 파드가 배포 됩니다.</span> # 클러스터 삭제 $ kind delete cluster # => Deleting cluster "kind" ... # kube config 삭제 확인 $ cat ~/.kube/config # 혹은 $ cat $KUBECONFIG # KUBECONFIG 변수 지정 사용 시 # <span style="color: green;">👉 kind 클러스터 관련 항목이 삭제된 것을 확인할 수 있습니다.</span> - kind로 worker node가 3개인 클러스터 배포 후 기본 정보를 확인해보겠습니다.

# 클러스터 배포 전 확인 $ docker ps # => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES # Create a cluster with kind $ kind create cluster --name myk8s --image kindest/node:v1.32.2 --config - <<EOF kind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 nodes: - role: control-plane extraPortMappings: - containerPort: 30000 hostPort: 30000 - containerPort: 30001 hostPort: 30001 - containerPort: 30002 hostPort: 30002 - containerPort: 30003 hostPort: 30003 - role: worker - role: worker - role: worker EOF # => Creating cluster "myk8s" ... # ✓ Ensuring node image (kindest/node:v1.32.2) 🖼 # ✓ Preparing nodes 📦 📦 📦 📦 # ✓ Writing configuration 📜 # ✓ Starting control-plane 🕹️ # ✓ Installing CNI 🔌 # ✓ Installing StorageClass 💾 # ✓ Joining worker nodes 🚜 # Set kubectl context to "kind-myk8s" # You can now use your cluster with: # # kubectl cluster-info --context kind-myk8s # Have a nice day! 👋 # 확인 $ kind get nodes --name myk8s # => myk8s-worker3 # myk8s-worker2 # myk8s-control-plane # myk8s-worker $ kubens default # => Context "kind-myk8s" modified. # Active namespace is "default". # kind 는 별도 도커 네트워크 생성 후 사용 : 기본값 172.20.0.0/16 $ docker network ls # => NETWORK ID NAME DRIVER SCOPE # db5d49a84cd0 bridge bridge local # 8204a0851463 host host local # <span style="color: green;">3bbcc6aa8f38 kind</span> bridge local $ docker inspect kind | jq # => ... # "IPAM": { # "Config": [ # { # "Subnet": "172.20.0.0/16", # "Gateway": "172.20.0.1" # ... # k8s api 주소 확인 : 어떻게 로컬에서 접속이 되는 걸까요? $ kubectl cluster-info # => Kubernetes control plane is running at <span style="color: green;">https://127.0.0.1:57349</span> # CoreDNS is running at https://127.0.0.1:57349/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy # # To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'. # 노드 정보 확인 : CRI 는 containerd 사용 $ kubectl get node -o wide # => NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME # myk8s-control-plane Ready control-plane 3m36s v1.32.2 172.20.0.5 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://2.0.3 # myk8s-worker Ready <none> 3m26s v1.32.2 172.20.0.2 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://2.0.3 # myk8s-worker2 Ready <none> 3m26s v1.32.2 172.20.0.3 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://2.0.3 # myk8s-worker3 Ready <none> 3m26s v1.32.2 172.20.0.4 <none> Debian GNU/Linux 12 (bookworm) 5.10.76-linuxkit containerd://2.0.3 # 파드 정보 확인 : CNI 는 kindnet 사용 $ kubectl get pod -A -o wide # => NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES # kube-system coredns-668d6bf9bc-2d5fq 1/1 Running 0 3m37s 10.244.0.3 myk8s-control-plane <none> <none> # kube-system coredns-668d6bf9bc-f8vg2 1/1 Running 0 3m37s 10.244.0.4 myk8s-control-plane <none> <none> # kube-system etcd-myk8s-control-plane 1/1 Running 0 3m45s 172.20.0.5 myk8s-control-plane <none> <none> # kube-system <span style="color: green;">kindnet-b47qh</span> 1/1 Running 0 3m36s 172.20.0.2 myk8s-worker <none> <none> # kube-system <span style="color: green;">kindnet-fx7pl</span> 1/1 Running 0 3m38s 172.20.0.5 myk8s-control-plane <none> <none> # kube-system <span style="color: green;">kindnet-jr9g8</span> 1/1 Running 0 3m36s 172.20.0.4 myk8s-worker3 <none> <none> # kube-system <span style="color: green;">kindnet-sck5c</span> 1/1 Running 0 3m36s 172.20.0.3 myk8s-worker2 <none> <none> # kube-system kube-apiserver-myk8s-control-plane 1/1 Running 0 3m45s 172.20.0.5 myk8s-control-plane <none> <none> # kube-system kube-controller-manager-myk8s-control-plane 1/1 Running 0 3m45s 172.20.0.5 myk8s-control-plane <none> <none> # kube-system kube-proxy-5vlx4 1/1 Running 0 3m36s 172.20.0.4 myk8s-worker3 <none> <none> # kube-system kube-proxy-8kz4j 1/1 Running 0 3m36s 172.20.0.3 myk8s-worker2 <none> <none> # kube-system kube-proxy-rlnwn 1/1 Running 0 3m37s 172.20.0.5 myk8s-control-plane <none> <none> # kube-system kube-proxy-znszc 1/1 Running 0 3m36s 172.20.0.2 myk8s-worker <none> <none> # kube-system kube-scheduler-myk8s-control-plane 1/1 Running 0 3m45s 172.20.0.5 myk8s-control-plane <none> <none> # local-path-storage local-path-provisioner-7dc846544d-h5xp6 1/1 Running 0 3m37s 10.244.0.2 myk8s-control-plane <none> <none> # 네임스페이스 확인 >> 도커 컨테이너에서 배운 네임스페이스와 다릅니다! $ kubectl get namespaces # => NAME STATUS AGE # default Active 5m31s # kube-node-lease Active 5m31s # kube-public Active 5m31s # kube-system Active 5m31s # local-path-storage Active 5m23s # 컨트롤플레인/워커 노드(컨테이너) 확인 : 도커 컨테이너 이름은 myk8s-control-plane , myk8s-worker/2/3 임을 확인 $ docker ps # => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES # 98bc90974283 kindest/node:v1.32.2 "/usr/local/bin/entr…" 6 minutes ago Up 5 minutes myk8s-worker3 # 4bf34678cbed kindest/node:v1.32.2 "/usr/local/bin/entr…" 6 minutes ago Up 5 minutes myk8s-worker2 # f85eef8fcd53 kindest/node:v1.32.2 "/usr/local/bin/entr…" 6 minutes ago Up 5 minutes 0.0.0.0:30000-30003->30000-30003/tcp, 127.0.0.1:57349->6443/tcp myk8s-control-plane # 1f27bfafc1ac kindest/node:v1.32.2 "/usr/local/bin/entr…" 6 minutes ago Up 5 minutes myk8s-worker # <span style="color: green;">👉 controlplane의 API 서버(6443/tcp)를 host의 57349포트로 포트포워딩 하고 </span> # <span style="color: green;"> kubectl cluster-info에서 확인한것 처럼 kubectl 사용시 127.0.0.1:57349로 접속이 되기 때문에</span> # <span style="color: green;"> host에서 kubectl로 접속이 가능하게 됩니다.</span> $ docker images # => REPOSITORY TAG IMAGE ID CREATED SIZE # kindest/node <none> 071dd73121e8 2 months ago 1.09GB # kindest/node <span style="color: green;">v1.32.2</span> b5193db9db63 5 months ago 1.06GB # <span style="color: green;">👉 클러스터 설치시 버전을 1.32.2로 지정했기 때문에 kindest/node:v1.32.2 이미지가 다운로드된 것을 확인할 수 있습니다.</span> $ docker exec -it myk8s-control-plane ss -tnlp # => State Recv-Q Send-Q Local Address:Port Peer Address:Port Process # LISTEN 0 4096 127.0.0.1:10257 0.0.0.0:* users:(("kube-controller",pid=584,fd=3)) # LISTEN 0 4096 127.0.0.1:10259 0.0.0.0:* users:(("kube-scheduler",pid=522,fd=3)) # LISTEN 0 4096 127.0.0.1:34873 0.0.0.0:* users:(("containerd",pid=105,fd=11)) # LISTEN 0 4096 127.0.0.1:10248 0.0.0.0:* users:(("kubelet",pid=705,fd=17)) # LISTEN 0 4096 127.0.0.1:10249 0.0.0.0:* users:(("kube-proxy",pid=890,fd=12)) # LISTEN 0 4096 172.20.0.5:2379 0.0.0.0:* users:(("etcd",pid=646,fd=9)) # LISTEN 0 4096 127.0.0.1:2379 0.0.0.0:* users:(("etcd",pid=646,fd=8)) # LISTEN 0 4096 172.20.0.5:2380 0.0.0.0:* users:(("etcd",pid=646,fd=7)) # LISTEN 0 4096 127.0.0.1:2381 0.0.0.0:* users:(("etcd",pid=646,fd=13)) # LISTEN 0 1024 127.0.0.11:38253 0.0.0.0:* # LISTEN 0 4096 *:10250 *:* users:(("kubelet",pid=705,fd=11)) # LISTEN 0 4096 <span style="color: green;">*:6443</span> *:* users:(("kube-apiserver",pid=562,fd=3)) # LISTEN 0 4096 *:10256 *:* users:(("kube-proxy",pid=890,fd=10)) # 디버그용 내용 출력에 ~/.kube/config 권한 인증 로드 $ kubectl get pod -v6 # => I0816 17:31:00.340008 21457 loader.go:402] <span style="color: green;">Config loaded from file: /Users/anonym/.kube/config</span> # I0816 17:31:00.342176 21457 envvar.go:172] "Feature gate default state" feature="ClientsAllowCBOR" enabled=false # I0816 17:31:00.342185 21457 envvar.go:172] "Feature gate default state" feature="ClientsPreferCBOR" enabled=false # I0816 17:31:00.342188 21457 envvar.go:172] "Feature gate default state" feature="InformerResourceVersion" enabled=false # I0816 17:31:00.342190 21457 envvar.go:172] "Feature gate default state" feature="InOrderInformers" enabled=true # I0816 17:31:00.342212 21457 envvar.go:172] "Feature gate default state" feature="WatchListClient" enabled=false # I0816 17:31:00.378209 21457 round_trippers.go:632] "Response" verb="GET" url="https://127.0.0.1:57349/api/v1/namespaces/default/pods?limit=500" status="200 OK" milliseconds=29 # No resources found in default namespace. # kube config 파일 확인 $ cat ~/.kube/config # 혹은 $ cat $KUBECONFIG # <span style="color: green;">👉 kind-myk8s 클러스터 관련 항목이 추가된 것을 확인할 수 있습니다.</span> # <span style="color: green;"> 또한 current-context: kind-myk8s여서 kubectl 명령어를 실행하면 kind-myk8s 클러스터에 연결되어 실행됩니다.</span> - Kind 클러스터 삭제

# 클러스터 삭제 $ kind delete cluster --name myk8s # => Deleting cluster "myk8s" ... # Deleted nodes: ["myk8s-worker3" "myk8s-worker2" "myk8s-control-plane" "myk8s-worker"] $ docker ps # => CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES $ cat ~/.kube/config

Cluster Mesh

현재 1.18 버전의 ClusterMesh 분산 동작에 이상이 있는것 같아서, 1.17.6 버전으로 실습을 진행하겠습니다.

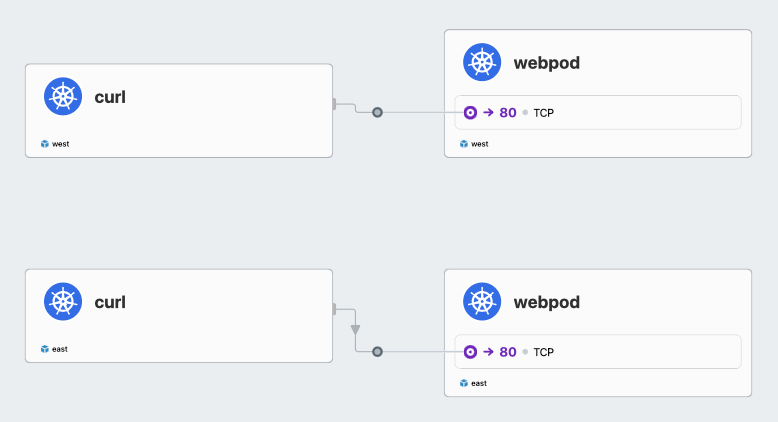

- Cluster Mesh는 Cilium의 기능 중 하나로, 여러 Kubernetes 클러스터를 연결하여 네트워크를 확장할 수 있는 기능입니다.

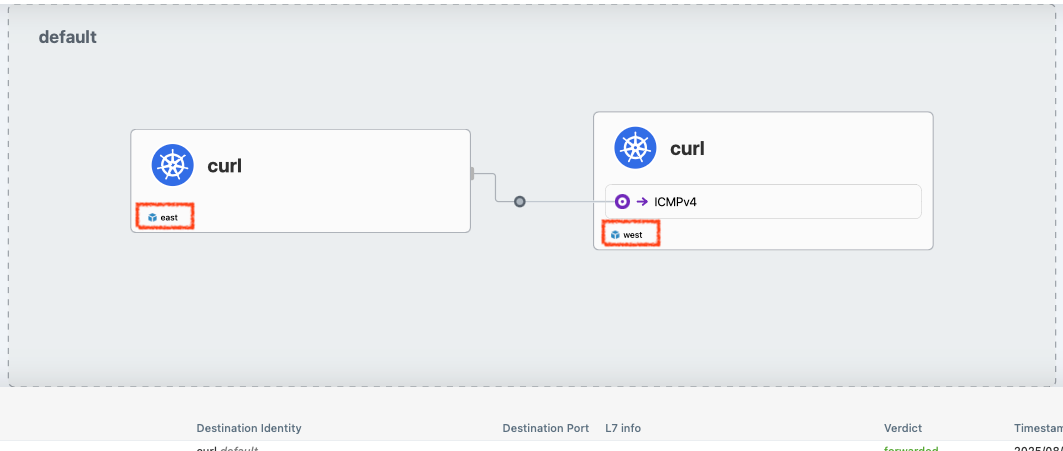

kind k8s 클러스터 west, east 배포

# west 클러스터를 먼저 배포합니다.

$ kind create cluster --name west --image kindest/node:v1.33.2 --config - <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings: